Thursday, February 14th 2019

AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

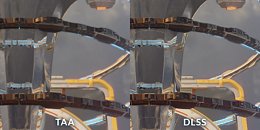

A report via PCGamesN places AMD's stance on NVIDIA's DLSS as a rather decided one: the company stands for further development of SMAA (Enhanced Subpixel Morphological Antialiasing) and TAA (Temporal Antialising) solutions on current, open frameworks, which, according to AMD's director of marketing, Sasa Marinkovic, "(...) are going to be widely implemented in today's games, and that run exceptionally well on Radeon VII", instead of investing in yet another proprietary solution. While AMD pointed out that DLSS' market penetration was a low one, that's not the main issue of contention. In fact, AMD decides to go head-on against NVIDIA's own technical presentations, comparing DLSS' image quality and performance benefits against a native-resolution, TAA-enhanced image - they say that SMAA and TAA can work equally as well without "the image artefacts caused by the upscaling and harsh sharpening of DLSS."

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

Source:

PCGamesN

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

170 Comments on AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

Edit: sorry, lol. Couldn't resist.

Some time ago I've attended a Warsaw Security Summit conference (Hope Cucker will be thirlled cause it was held in Poland)

Innovation with AI and Deep learning for cameras and since I was doing Video Surveillance Systems I attended. Using AI and/or deep learning techiques the camera was able to recognize human emotions. It could tell if somebody is sad or stressed etc. Basically it would tell you if a dude is up to something since his behavior, face would say it. Like a somebody who's about to commit a crime. That's innovation which has been showed and analized later with in depth information and code. What we have with DLSS here? People hear Deep Learning Multi-sampling and it must be great since "deep learning" is mentioned there. What a bull crap on a barn floor that marketing shenaningans.You can take a look in TPU review. You have a comparison. Take a look. it's not only the algorithm but also purpose of it and if it's meant for this particular job (should Deep Learning be used for this ?) and of course if it's worth it.

I don't know your game preferences but imagin motion blur effect. Did you like it in games when you wanted to excel at a particular game? I didn't. I always wanted nice smooth and crispy image. With DLSS It seems like we are going backwards under the Deep Learning flag with a promise and motto "The way it's meant to be played" from now on :p. It's just sad.

TL;DR;

Still frames are much easier to compare and scrutinize than live action. Until I see it in person while I am playing, I will withhold final judgement. That said, I likely will never see it as I likely won't be buying a 2000 series card.

22:58Anyone who knows how GPUs and raytracing work understands that the new RT cores are only doing a part of the rendering. GPUs are in fact a collection on various specific accelerated hardware (geometry processing, tessellation, TMUs, ROPs, video encoding/decoding, etc.) and clusters of ALU/FPUs for generic math. Even in a fully raytraced scene, 98% of this hardware will still be used. The RT cores are just another type specialized accelerators for one specific task. And it's not like everything can/will be raytraced; like UI elements in a game, a page in your web browser or your Windows desktop, it's rasterized because it's efficient, and that will not change.

I'm waiting to see what the next few updates do, depending on game I might turn DLSS on, but at this point, RT is not worth the smearing that DLSS is.

Heh, and yet G-Sync spawned FreeSync™.

BTW; many thought Vega was going to be a Pascal killer too…

It shows how ray tracing eats the resources and we still have a limitation with the hardware currently available in the market. I think this video shows what is needed and gives an example of other ways to achieve the ray traced scene in games nowadays or in the future. We will have to see where and how it will be done and how will it work.

RT is being processed concurrently with rasterization work. There are some prerequisites - generally G-Buffer - but largely it happens at the same time.

I think yes. without RT cores there would be more for rasterization (unless RT cores do the rasterization as well? I don't think so) I'm not sure now since you've gotten surprised by my statement. Although it makes sense. RT cores take space. So without RT cores the 2080 TI would have been faster in rasterization.