Thursday, February 14th 2019

AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

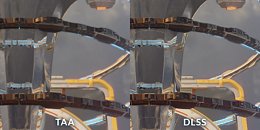

A report via PCGamesN places AMD's stance on NVIDIA's DLSS as a rather decided one: the company stands for further development of SMAA (Enhanced Subpixel Morphological Antialiasing) and TAA (Temporal Antialising) solutions on current, open frameworks, which, according to AMD's director of marketing, Sasa Marinkovic, "(...) are going to be widely implemented in today's games, and that run exceptionally well on Radeon VII", instead of investing in yet another proprietary solution. While AMD pointed out that DLSS' market penetration was a low one, that's not the main issue of contention. In fact, AMD decides to go head-on against NVIDIA's own technical presentations, comparing DLSS' image quality and performance benefits against a native-resolution, TAA-enhanced image - they say that SMAA and TAA can work equally as well without "the image artefacts caused by the upscaling and harsh sharpening of DLSS."

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

Source:

PCGamesN

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

170 Comments on AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

And i think the pictures are labeled wrong as well. But we need more information about what resolution they were taken, what is the resolution DLSS scales up from (and fills out some details). And what the exact TAA and SMAA settings are.

looking at the comparisons images in this thread the ones labeled DLSS look far better.. okay the DLSS knockers think they are labeled wrong.. maybe they are who knows.. he he

trog

I think nVidia should have used their silicon budget on much more Cuda cores, and find a solution like a xbox 360 promised to offer basically free MSAA X2 or more.

personally i think AMD probably also want to offer something similar to DLSS (hence the talk about to use Direct ML before) but stuff like DLSS is not just simply "inject" the AA into the game. DLSS need the image to be trained using ML first. for nvidia this training cost is something they wiling to shoulder themselves instead of passing them to game developer. will AMD wiling to do the same? some people said this latest effort from nvidia is just wasting money but that's simply how they roll. they try to push something and when it does not work for them they just move on.they can add more CUDA cores but doing so they will face another problem. the glimpse of that problem already here with RTX2080Ti.

The Lies about DLSS

1. It improves performance (now we know this is actually a vague statement because it has a lot of limitations )

2. maintains or sometimes ever increases Image quality (This is also a lie because it doesnt and it actually make it look worse than current scalar methods because of the obnoxious blur effect)

Jensen also when on sayin that in some cases DLSS can give you an image that looks much much better than the resolution it is upscaling to . I think this was DLSSX2 ? So he basically said in one of the presentations that a 1440p image upscaled to 4k will look super good than the 4k image itself..

Where are the SMAA or TSSAA8x comparisson shots?? I thing AMD should invest heavily in those 2 since they are almost performace gap free.

It's as if the labels would be mixed up here, the DLSS is heavily blurred in reality.

Dont even have to label these because its very obvious, one of the images is very blurry as if like there is some sort of Depth of Field effect being applied to the scene.......

:banghead::banghead:

In other news - water is wet.

I would be worried about their mental health if they would say Nvidia's proprietary solution is cool or something.Nvidia left itself wide open for this one. Nice jab, though in line with the usual marketing bullshit on the topic. Native-resolution, TAA-enhanced image will always be better than DLSS image, because DLSS is not native resolution. At the same time, DLSS will be in range of 40% faster.

Taking the example of "4K" image and comparisons, test so far tend to show that DLSS image upscaled from 1440p is roughly on par with 1800p + TAA in both image quality and performance. Native 4K + TAA will be considerably slower. 1440p + TAA will be faster but uglier.Correct. But they neglect to mention this approach has a clear downside of using the same compute resources that are otherwise directly used for rendering. It is not clear if and how much of CUDA cores DLSS uses in addition to Tensor cores but the impression so far is that DLSS is leaner on compute than it would be without Tensor cores.

That said, they aren't wrong... DLSS is dead in the water.LOL. Where is the blur? Its too dark to see... :D