Thursday, February 14th 2019

AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

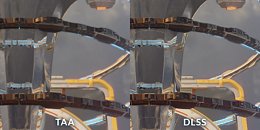

A report via PCGamesN places AMD's stance on NVIDIA's DLSS as a rather decided one: the company stands for further development of SMAA (Enhanced Subpixel Morphological Antialiasing) and TAA (Temporal Antialising) solutions on current, open frameworks, which, according to AMD's director of marketing, Sasa Marinkovic, "(...) are going to be widely implemented in today's games, and that run exceptionally well on Radeon VII", instead of investing in yet another proprietary solution. While AMD pointed out that DLSS' market penetration was a low one, that's not the main issue of contention. In fact, AMD decides to go head-on against NVIDIA's own technical presentations, comparing DLSS' image quality and performance benefits against a native-resolution, TAA-enhanced image - they say that SMAA and TAA can work equally as well without "the image artefacts caused by the upscaling and harsh sharpening of DLSS."

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

Source:

PCGamesN

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

170 Comments on AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

said that before,grapes are getting even more sour with amd leaving rt features out for nvidia to claim for themselves.

Edit: To add to that, if AMD had RTRT features, that crap would be off. It looks terrible. I might give it a go on Metro (if I had it) but as long as it looks like BFV, that shit is off.

the fact it's shiny ? yes,it is,which stands out when its applied to hair.

still beats the default hair and I like how it has no performance impact. I liked hw in witcher 3 more, but that came with a big performance penalty. not everyone has enough gpu resources to spare fort hat.

Edit: I'll cut you a break because I didn't explicitly say reflective surfaces. However, I figured someone with your intellect would have put it together.

you said "like the feature I saw in BF5"

BF5 has raytraced reflections only. you're literally complaining how nvidia reflections are shiny.

don't worry tho,I'm enjoying this,it's hilarious :laugh:

puddles,cars,windows - everything in a pc game that uses ssr is overexposed to show off the effect.

Gotcha.

Nice Ninja.

£150~ RX 570 8GB mustard rice ~

I think TAA looks good. It's in Fallout 4 and Warframe. I'd take it over a dumb computer program guessing what a super-sampled image should look like. Especially when you consider how much die space is taken up by those Tensor cores. Honestly, those tensors are for AI training in servers and pro cards and NVIDIA is trying to sell them to gamers so they are OK paying more for less performance because the dies are bigger. ~

For me it's a way of achieving at least same resault or better in a cost efficient way (there's more but this is a good example). RTX price is huge and this innovation ain't nothing like what we already have with open techniques. It is worse.

I disagree with your statement. This is what I think about the DLSS. It's my opinion. If you say it's worth something, that's OK with me.

I also disagree that having dozen of techniques to achieve one goal is a great way. You can't focus on so called "innovations" and start from scratch every time, when you have all that's needed already there and open to everybody. Think about game developers and gamers. Do you realize what would have happend if we had these "innovations" once a year? They won't implement every single one.

So for me at this point, DLSS is a downgrade. :)

Interesting topic: When does the AI program become so intelligent that it doesn't want to upscale your video games? :)