Thursday, February 14th 2019

AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

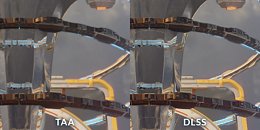

A report via PCGamesN places AMD's stance on NVIDIA's DLSS as a rather decided one: the company stands for further development of SMAA (Enhanced Subpixel Morphological Antialiasing) and TAA (Temporal Antialising) solutions on current, open frameworks, which, according to AMD's director of marketing, Sasa Marinkovic, "(...) are going to be widely implemented in today's games, and that run exceptionally well on Radeon VII", instead of investing in yet another proprietary solution. While AMD pointed out that DLSS' market penetration was a low one, that's not the main issue of contention. In fact, AMD decides to go head-on against NVIDIA's own technical presentations, comparing DLSS' image quality and performance benefits against a native-resolution, TAA-enhanced image - they say that SMAA and TAA can work equally as well without "the image artefacts caused by the upscaling and harsh sharpening of DLSS."

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

Source:

PCGamesN

Of course, AMD may only be speaking from the point of view of a competitor that has no competing solution. However, company representatives said that they could, in theory, develop something along the lines of DLSS via a GPGPU framework - a task for which AMD's architectures are usually extremely well-suited. But AMD seems to take the eyes of its DLSS-defusing moves, however, as AMD's Nish Neelalojanan, a Gaming division exec, talks about potential DLSS-like implementations across "Some of the other broader available frameworks, like WindowsML and DirectML", and that these are "something we [AMD] are actively looking at optimizing… At some of the previous shows we've shown some of the upscaling, some of the filters available with WindowsML, running really well with some of our Radeon cards." So whether it's an actual image-quality philosophy, or just a competing technology's TTM (time to market) one, only AMD knows.

170 Comments on AMD Doesn't Believe in NVIDIA's DLSS, Stands for Open SMAA and TAA Solutions

Don't be insulting other members.

Don't be bickering back and forth with each other with off topic banter.

Thanks.

That's it.I see the sarcasm in your post. As for hairworks, it doesn't look as good as some might think. Perhaps is one's opinion whether they like it or not.

As for DLSS, that's complete garbage.AMD has no reason to compete with something that's already proven to be a complete failure.

My updated Two Cents

That also underlines the primary motive for Nvidia. The motive is not 'the tech/hardware is capable now'. They just repurposed technology to 'make it work', and that is the starting point from which they'll probably iterate.

Nevertheless, I do agree, given the RT implementations we've seen at this point and the die sizes required to get there. On the other hand, if you see the way Nvidia built up Turing, its hard to imagine getting the desired latencies etc. for all that data transport to a discrete RT/tensor only card.

Your float is overflow safe so long as division uses a higher bitstep than the addition when taking the average of pixels.

Figure 1. Tensor cores signficantly accelerate FP16 and INT8 matrix calculations

*Signficantly* Really, Nvidia?

Obviously this is just my experience on my hardware for just one game implimentation, but it has notebly improved my enjoyment of this game.