Monday, February 18th 2019

AMD Radeon VII Retested With Latest Drivers

Just two weeks ago, AMD released their Radeon VII flagship graphics card. It is based on the new Vega 20 GPU, which is the world's first graphics processor built using a 7 nanometer production process. Priced at $699, the new card offers performance levels 20% higher than Radeon RX Vega 64, which should bring it much closer to NVIDIA's GeForce RTX 2080. In our testing we still saw a 14% performance deficit compared to RTX 2080. For the launch-day reviews AMD provided media outlets with a press driver dated January 22, 2019, which we used for our review.

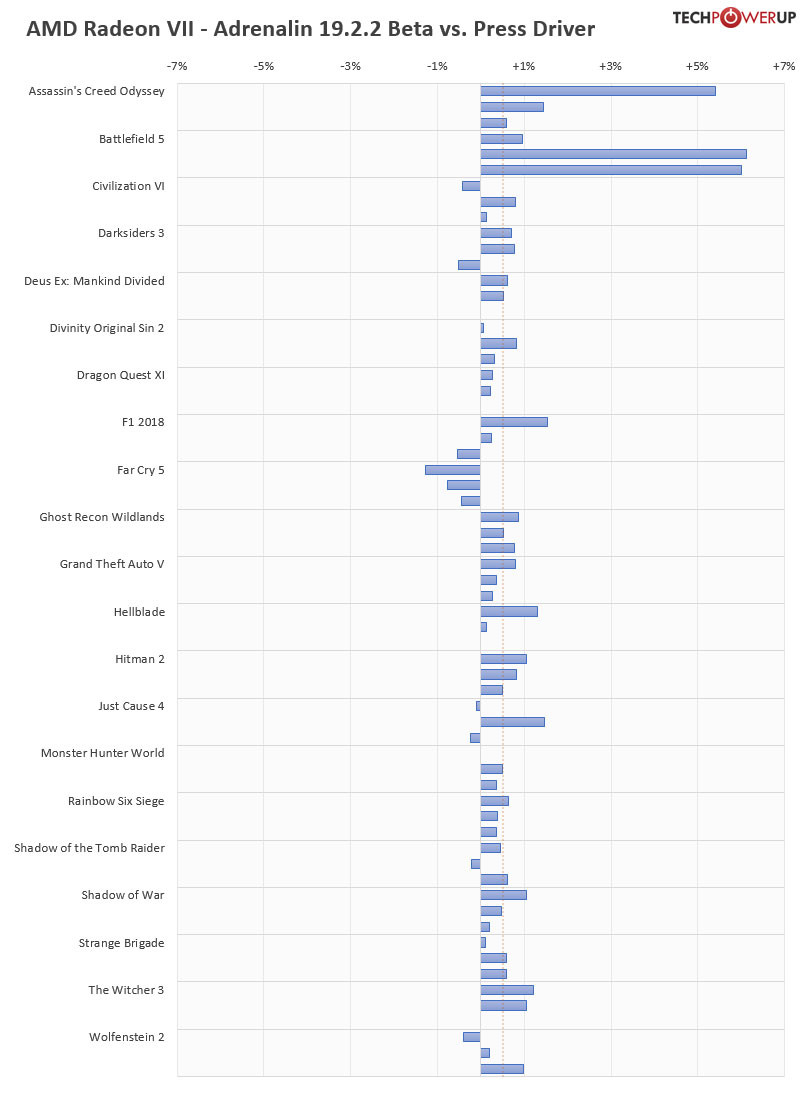

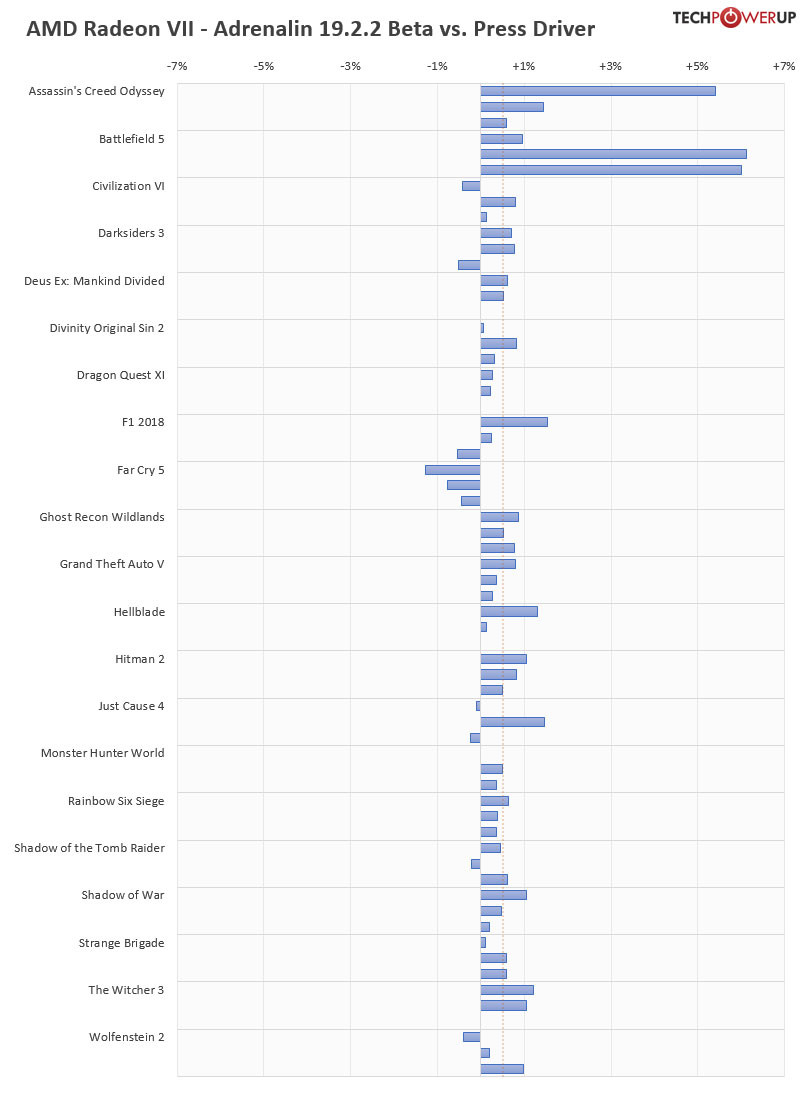

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

182 Comments on AMD Radeon VII Retested With Latest Drivers

Seems like those who were begging for a retest for performamce issues didnt leave the wine in the casket long enough. :)

Edit: Hilarious this gets downvoted. Another polarizing toxic fanboy goes on ignore at tpu. I wonder if there is a max amount of users one can ignore here...lol

You got what performance upfront, when 960 beat 780Ti ($699), come again?

AMD perf improves over time, nVidia falls behind not only behind AMD, but behind own newer cards.

As card you bought gets older, NV doesn't give a flying sex act.

It needs quite a twisting to turn this into something positive.

What's rather unusual this time, is AMD being notably worse at perf/$ edge, at least with game list picked up at TP.

290x was slower than 780Ti at launch, but it cost $549 vs $699, so there goes "I get 10% at launch" again.

Performance per watt on recent AMD top-end cards have been a disaster too. Vega 64 does not beat 1080 while pulling 100% more power.

Radeon VII is just as bad.

The only reason why 290 series aged well, was because 390 series were a rebrand and AMD kept optimizing them to try and keep up with Nvidia. Notoriously bad performance per watt on both series.

Once again, 7970 was the last good AMD top-end GPU.

That's beyond my comprehension.

Should I count 500 series twice? I don't know why, but there might be green reasons.

I would count rebrands as, what they are, zero.

Now, if you'd stop twisting reality, we'd have to mention:

=> Polaris => Vega => Vega 7nm

That's all the released we had between 290x and now. On the green side of things:

=> Pascal => [.... Volta never materialized in consumer market....] => Tesla

Every single person with knowledge of GPU's knows that 980 Ti reference performs MUCH worse than custom cards out of the box (yet even reference can make Fury X look weak post OC).

www.techpowerup.com/reviews/Gigabyte/GTX_980_Ti_G1_Gaming/33.html

www.techpowerup.com/reviews/MSI/GTX_980_Ti_Lightning/26.html

35-40% OC gain in the end, over 980 Ti reference. Completely wrecks Fury X at the end of the day.

Meanwhile Fury X can gain 3% from OC, bumps up the powerusage like crazy tho. OVERCLOCKERS DREAM!!!

Imagine the amount of "finewine" that has to happen until Radeon VII matches Turing on efficiency. They would have to make it run on happy thoughts (which, I imagine, you have in spades).

And sure, since Nvidia optimizes for their newest architecture, an older product may become a little slower.

It would look exactly the same with AMD, if they actually changed the architecture from time to time.

But since they're still developing GCN, it's inevitable that older cards will get better over time.

We may see this change when they switch to a new architecture (if we live long enough, that is...).They made it very good at launch. I'm fine with that. I buy every product as is. I have no guarantee of future improvements and I don't assume they will happen.

What other durable goods you buy become better during their lifetime? Why do you think this is what should be happening?

Does your shoes become better? Furniture? Cars?

When my car is recalled for repair, I'm quite mad that they haven't found it earlier. These are usually issues that shouldn't go through QC.

I imagine this is what you think: "what a lovely company it is: I already paid them for the car, but they keep fixing all these fatal flaws!".Seriously, you'll lecture me on twisting?

AMD has done a solid job with their drivers (though this release was quite awkward at best for some) and results do improve with time in some titles (as it does with nvidia)...not all. This is also in part due to the stagnant GCN arc that prevailed for several generations.

Anyway, I'm happy the issues from day1 are mitigated....time will tell about performance improvements..

Last time I've checked on a rather AMD unfriendly TP, power gap was 25%, so uh, well, there is that.

But you are missing the point, for FineWine(tm) to work, it just needs to get better over time, there are no particular milestones it needs to beat.

There is no price parity between 2080 and VII, the latter comes with 3 games and twice the RAM.I'm glad 960 beating $699 780Ti is justifiable.That's a dead horse, why not just leave it there?

290x was a bit behind, but was $549 vs $699, there was nothing "but better" about it, you paid 25%+ for about 10% more perf at launch, which gradually disappeared.

Assassin's Creed Origins (NVIDIA Gameworks, 2017)

Battlefield V RTX (NVIDIA Gameworks, 2018)

Civilization VI (2016)

Darksiders 3 (NVIDIA Gameworks, 2018), old game remaster, where's Titan Fall 2.

Deus Ex: Mankind Divided (AMD, 2016)

Divinity Original Sin II (NVIDIA Gameworks, 2017)

Dragon Quest XI (Unreal 4 DX11, large NVIDIA bias, 2018)

F1 2018 (2018), Why? Microsoft's Forza franchise is larger than this Codemaster game.

Far Cry 5 (AMD, 2018)

Ghost Recon Wildlands (NVIDIA Gameworks, 2017), missing Tom Clancy's The Division

Grand Theft Auto V (2013)

Hellblade: Senuas Sacrif (Unreal 4 DX11, NVIDIA Gameworks)

Hitman 2

Monster Hunter World (NVIDIA Gameworks, 2018)

Middle-earth: Shadow of War (NVIDIA Gameworks, 2017)

Prey (DX11, NVIDIA Bias, 2017 )

Rainbow Six: Siege (NVIDIA Gameworks, 2015)

Shadows of Tomb Raider (NVIDIA Gameworks, 2018)

SpellForce 3 (NVIDIA Gameworks, 2017)

Strange Brigade (AMD, 2018),

The Witcher 3 (NVIDIA Gameworks, 2015)

Wolfenstein II (2017, NVIDIA Gameworks), Results different from www.hardwarecanucks.com/forum/hardware-canucks-reviews/78296-nvidia-geforce-rtx-2080-ti-rtx-2080-review-17.html when certain Wolfenstein II map exceeded RTX 2080'

Apple: Yes, we're slowing down older iPhonesI colored things.

and wowI colored things.

And wow.

Isn't Hitman an AMD title though?

and 980ti is still a faster card

by 6% in pcgh's review (and this is stock)

www.pcgameshardware.de/Radeon-VII-Grafikkarte-268194/Tests/Benchmark-Review-1274185/2/

by even more in computerbase ranglist-no 980ti here but Fury X does very poorly

www.computerbase.de/thema/grafikkarte/rangliste/

also,calm down,you're having a fanboy tantrum all over this thread.

It's good we have a choice, right?

But I agree with you in this case: Maxwell was awesome.So what? I will replace the card at some point. I'll get a new one with a new "10% more performance at launch". I will keep having faster cards.

And you generally pay more (in %) than the performance difference is. It has to be that way, because the market just wouldn't work (convergence).

I don't think I have ever seen an attempt to seriously undervolt a Turing GPU for comparison though.Glacier engine used to favor AMD a lot back in Absolution and initially in Hitman, it was one of AMD's DX12 showcases. Eventually, the results pretty much evened out in DX12. Not sure about DX11. When developing Hitman 2 they dropped DX12 renderer and kept going with DX11.

So drivers are stable now, good. How is the noise @W1zzard any improvements on that department? I.E. changes in fan profile etc.

Trying to prove that Fury X can perform on par with a 980 Ti reference was fun tho. Remember the 30-40% OC headroom next time.

I'm out xD

ether way all this toxicity is getting rather boring..

trog

Also, non of the above, period.

No retaliatory comments, either.

Stay on topic.

Thank You

Counting Prey as favouring AMD (i.e. it could be even worse) it's:

AMD: 4

Nvidia: 14

undecided: 4

AMD: 4/18 ~= 22%

Nvidia: 14/18 ~= 78%

which is basically the market share these companies have in discrete GPU.

Do you think it should be 50:50? Or what? And why?

Many have misconceptions about what optimizations really are. Games are rarely specifically optimized for targeted hardware, and likewise drivers are rarely optimized for specific games in their core. The few exceptions to this are cases to deal with major bugs or bottlenecks.

Games should not be written for specific hardware, they are written using vendor-neutral APIs. Game developers should focus on optimizing their engine for the API, and driver developers should focus on optimizing their driver for the API, because when they try to cross over, that's when things starts to get messy. When driver developers "optimize" for games, they usually manipulate general driver parameters and replace some of the game's shader code, and in most cases it's not so much optimization as them trying to remove stuff without you seeing the degradation in quality. Games have long development cycles and are developed and tested against API specs, so it's obvious problematic when suddenly a driver diverges from spec and manipulate the game, and generally this causes more problems than it solves. If you have ever experienced a new bug or glitch in a game after a driver update, then you now know why…

This game "optimization" stuff is really just about manipulating benchmarks, and have been going on since the early 2000s. If only the vendors spend this effort on actually improving their drivers instead, then we'll be far better off!Not at all. Many AAA titles are developed exclusively for consoles and then ported to PC, if anything there are many more games with a bias favoring AMD than Nvidia.

Most people don't understand what causes games to be biased. First of all, a game is not biased just because it scales better on vendor A than vendor B. Bias is when a game has severe bottlenecks or special design considerations, either intentional or "unintentional", that gives one vendor a disadvantage it shouldn't have. When some games scale better on one vendor and some other games scale better on another vendor isn't a problem by itself, games are not identical, and different GPUs have various strengths and weaknesses, so we should use a wide selection to determine real world performance. Significant bias happens when a game is designed around one specific feature, and the game scales badly on different hardware configurations. A good example of this is games which are built for consoles but doesn't really scale well with much more powerful hardware. But in general, games are much less biased than most people think, and just because the benchmark doesn't confirm your presumptions doesn't mean the benchmark is biased.Over the past 10+ years, every generation have improved ~5-10% within their generation's lifecycle.

AMD is no better at driver improvements than Nvidia, this myth needs to die.FUD which has been disproven several times. I don't belive Nvidia have ever intentionally sabotaged older GPUs.