Monday, February 18th 2019

AMD Radeon VII Retested With Latest Drivers

Just two weeks ago, AMD released their Radeon VII flagship graphics card. It is based on the new Vega 20 GPU, which is the world's first graphics processor built using a 7 nanometer production process. Priced at $699, the new card offers performance levels 20% higher than Radeon RX Vega 64, which should bring it much closer to NVIDIA's GeForce RTX 2080. In our testing we still saw a 14% performance deficit compared to RTX 2080. For the launch-day reviews AMD provided media outlets with a press driver dated January 22, 2019, which we used for our review.

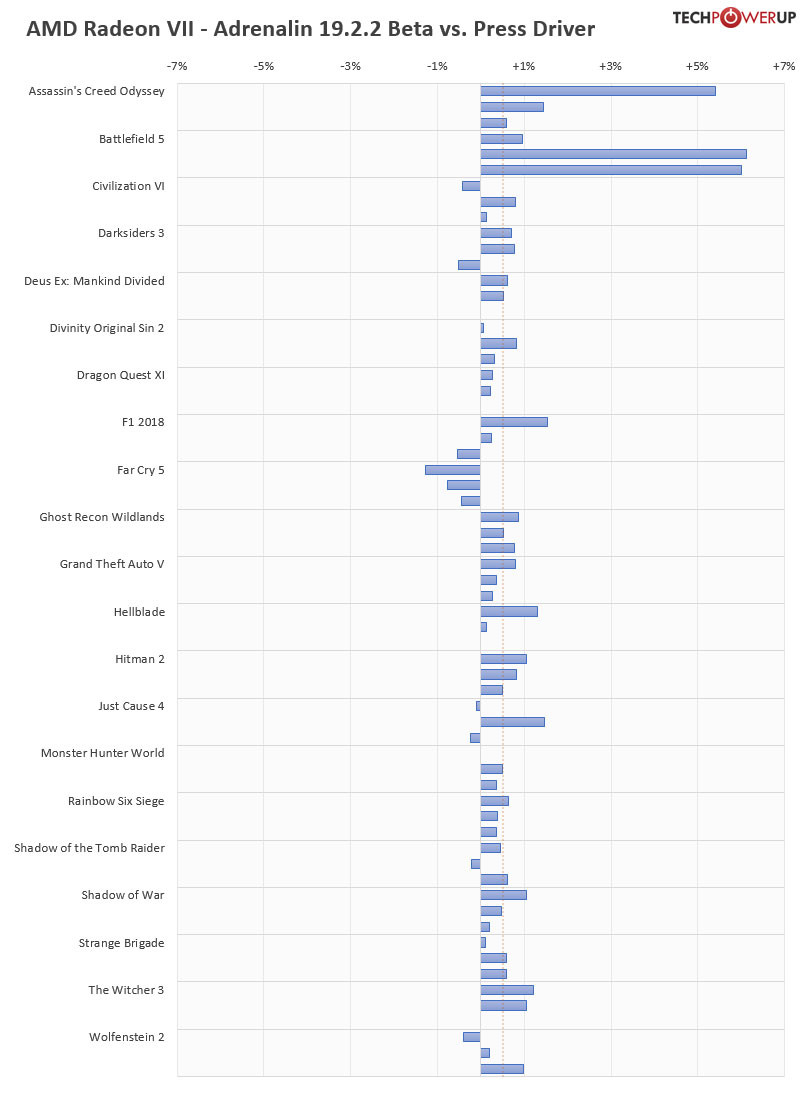

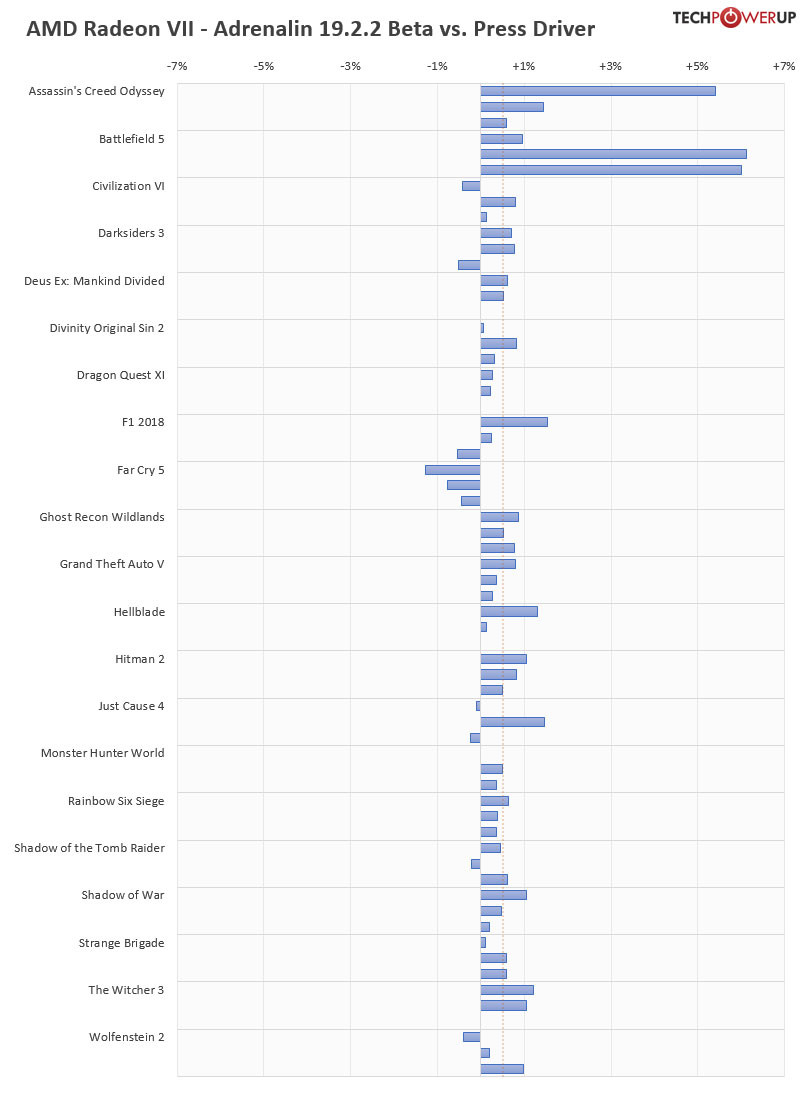

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

182 Comments on AMD Radeon VII Retested With Latest Drivers

FP64 is almost completely useless when it comes to gaming. It is useful in certain types of compute scenarios. Both manufacturers have struggled to find a balance between workstation/server/GPGPU cards and consumer cards in terms of compute features. If you look at the history, both have also settled to the balance points they decided upon - AMD at 1:16 and Nvidia at 1:32, with both trying to have a compute GPU at the top of their lineups that can do 1:2 or thereabouts.

When it comes to FP64, AMD history looks like this (a little messy due to reuse of GPUs over generations):

- HD4000/5000 high and midrange cards have FP64 at 1:5 FP32 (4870/4850/4770/4750, 5870/5850/5830). Lowend does not do FP64.

- Some of (higher end) HD6000/7000 have 1:4 (Tahiti, 7950/7970). HD7000 midrange has 1:16 (Pitcairn, 7870/7850), lowend has 1:16. Some really lowend things do not do FP64.

- High end R* 200 series (Hawaii, R9 290/290X) has 1:8, midrange has 1:4 (Tahiti, R9 280/280X) or 1:16 (Tonga, R9 285/285X) and lowend has 1:16. Some really lowend things do not do FP64.

- Fiji (Fury/FuryX) has 1:16

- RX400/500 has 1:16

- Vega10 (Vega56/Vega64) has 1:16

- Vega20 (Radeon VII) has 1:4

FP64 situation on the NVidia side looks like this:

- GTX200 series high end (GTX280/260) has 1:8.

- GTX400 series (Fermi) high end (GTX480/470) has 1:8, midrange and lowend (GTX460/450/440/430) has 1:12 and lowest end does not do FP64.

- GTX600 series (Kepler) has 1:24, except Titans at 1:3 and some lowend cards that are Fermi and have 1:12.

- GTX900 series (Maxwell) has 1:32.

- GTX1000 series (Pascal) has 1:32.

- Volta (Titan V) has 1:2.

- RTX2000/GTX1600 series (Turing) has 1:32.

However, that's Nvidia cutting back on double precision computing power, which is really different from what RTX and tensor cores do.

And @londiste collected the data.

Over the last 10 years Nvidia moved from 1:8 / 1:12 to 1:32.

In the same period AMD moved from 1:4 / 1:5 to 1:16.

So it does seem that they both "removed compute". Do you agree?

So, it's not quite the same animal. Making desktop chips requires constant improvements, where as you make a decent chip for a console and it's expected to last 5 years or more. AMD already has Navi in the bag, primarily for the PS5, and we'll see that too on desktops, but it remains to be seen how well it will do, or what comes after that.

The thing about fp64 is that it's not normally used during rendering, in fact fp32 is overkill for parts of the rendering in games. E.g. after rasterization, when processing fragments("pixels"), only a tiny fraction of the precision of fp32 is actually used.