Monday, February 18th 2019

AMD Radeon VII Retested With Latest Drivers

Just two weeks ago, AMD released their Radeon VII flagship graphics card. It is based on the new Vega 20 GPU, which is the world's first graphics processor built using a 7 nanometer production process. Priced at $699, the new card offers performance levels 20% higher than Radeon RX Vega 64, which should bring it much closer to NVIDIA's GeForce RTX 2080. In our testing we still saw a 14% performance deficit compared to RTX 2080. For the launch-day reviews AMD provided media outlets with a press driver dated January 22, 2019, which we used for our review.

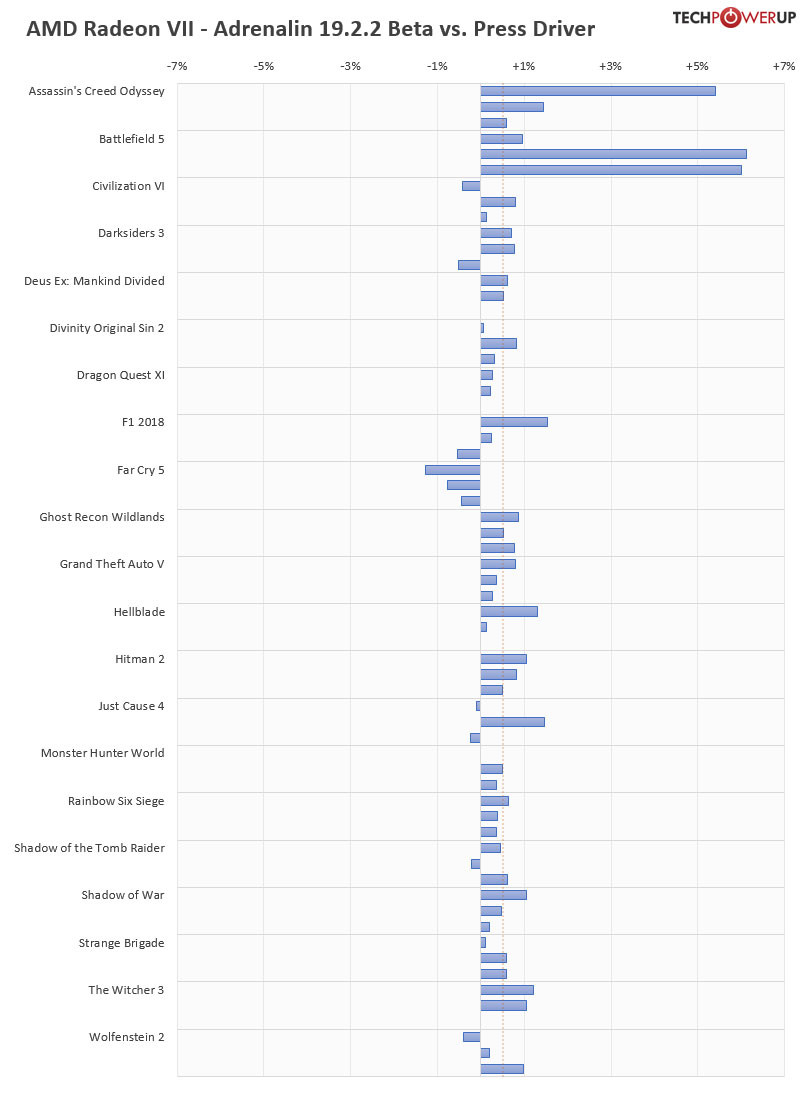

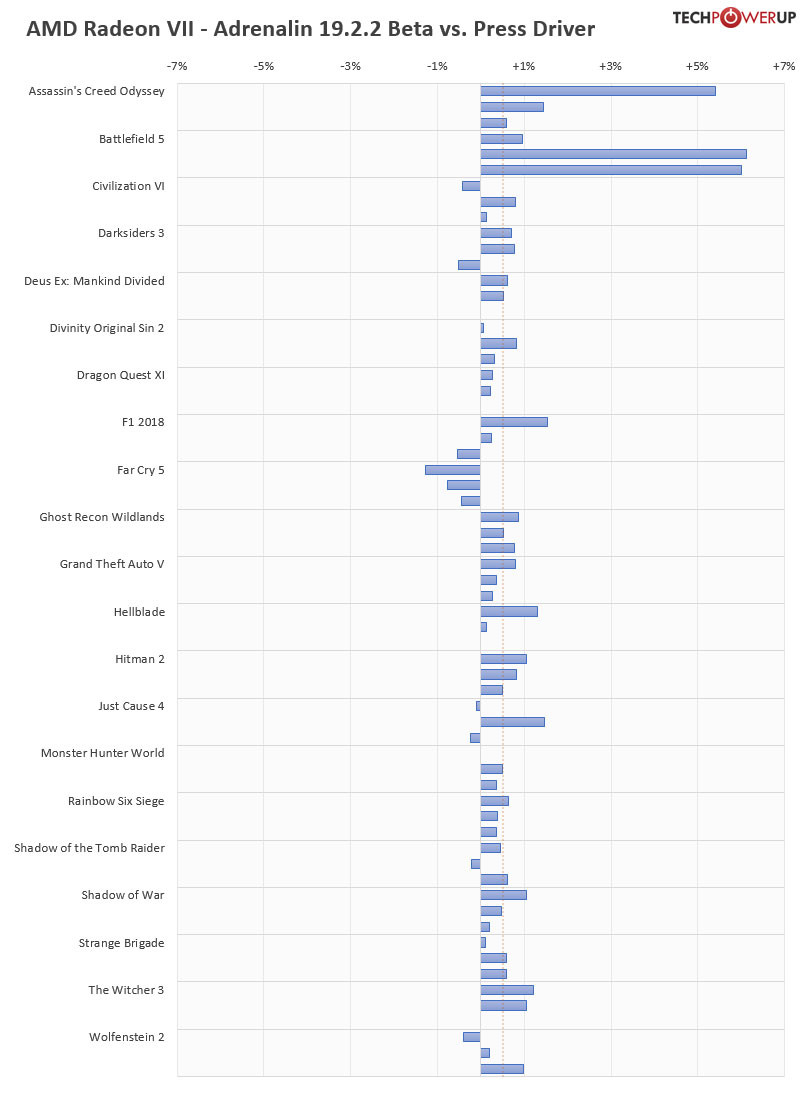

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

182 Comments on AMD Radeon VII Retested With Latest Drivers

Why is this turning into an Nvidia driver performance slowchat? That horse is dead, buried and probably cast into the ocean by now. Let it go unless you have actual data and if you do, open a nice little topic for it. Man...

I undervolted my custom watercooled Vega 64 before with nice performance boosts.

At 1085mV undervolt and 2.5% frequency overclock Vega 64 ran for past 1.5 years at frequencies in range of 1670MHz with max temperature at 42°C while consuming around 30W less than refernce settings.

I have undervolted Radeon VII 1030mV @1082MHz.

In the two main games that I play at the moment BF V and Black Ops 4 the average power consumption on my Radeon VII is around 250W with this setting.

Waiting for waterblocks to be available to start fine tuning Radeon VII like Vega 64.

The refernce cooler is limiting factor at the moment. :oops:

I have the watercooling setup as was used for cooling Vega 64 and Ryzen 2700x.

Only thing missing is waterblock for Radeon VII.

Buy a Radeon 7 dude. Then test performance along the way for new driver releases. We will all shut up if you give us hard numbers.

Plus you get to suport AMD GPu division. Two birds one stone right? I assuming you are not those hyppcrites who are all talks but no action?

I mean, I wanted to give AMD a chance and I bought a 1700X. It's alright. It's a good direction for AMD. So much so, I'll buy a Ryzen 2, guaranteed when it releases. But the GFX dept at AMD... Not quite there yet. No matter how much some people suggest it is. I do think, that if Intel throws enough money at it, they might even overtake AMD, and that will be their death knell.

Well i was young and stupid and let my emotion clouded my judgement. Not anymore tho...

GPUs, especially the high end versions are all overpriced period.

Thanks a lot for the effort to retest. :toast:

Me as Radeon VII owner was not expecting any large performance increase between 19.2.1 and 19.2.2. The time frame I believe is too short between the two.

It did though manage to fix few issues with Wattman and overclocking.

What I personally noticed is that Black Ops 4 is running better. With 19.2.1 on my PC the frame was changing very fast between 70 FPS and 120FPS by every movement in game. The GPU clock was having lot of dips. With new drive it is mostly varying above 100FPS staying close to 120FPS and GPU clock is relatively stable. I have BenQ Zowie XL2730 FreeSync 144Hz monitor.

i follow all this stuff because it interests me.. but its all being spoiled by to much negativity.. too much entitlement infringement.. too much silly red green bickering..

huge lengthy threads about f-ck all...

trog

I mean: we've been hearing about FineWine for years and it doesn't look like this could ever stop. There are, as you said "examples" or "traces" that something could be going on, but that's a bit like a proof of existence of ether or gods - we've seen some weird stuff going on, so let's assume there's a reason and let's give it a catchy name.

We had a nice discussion about quality of journalism in another topic. Seriously, wouldn't you like to see a proper experiment for this theory?

It's not that hard either. You take a few cards from different generations, 20-30 driver editions released over 5 years, a few AAA games and you measure all data points.

Instead, we have hundreds of thousands of people on thousands of forums talking about a phenomenon that no one has ever confirmed in a controlled environment. And it's been going for years. And it will keep going for as long as AMD will be making GPUs.

Why hasn't any of the large tech sites done this? Are they afraid of the result and angry mob from either side?

Why hasn't AMD done this?