Monday, March 4th 2019

AMD Patents Variable Rate Shading Technique for Console, VR Performance Domination

While developers have become more and more focused on actually taking advantage of the PC platform's performance - and particularly graphical technologies - advantages over consoles, the truth remains that games are being optimized for the lowest common denominator first. Consoles also share a much more user-friendly approach to gaming - there's no need for hardware updates or software configuration, mostly - it's just a sit on the couch and leave it affair, which can't really be said for gaming PCs. And the console market, due to its needs for cheap hardware that still offers performance levels that can currently fill a 4K resolution screen, are the most important playground for companies to thrive. Enter AMD, with its almost 100% stake in the console market, and Variable Rate Shading.

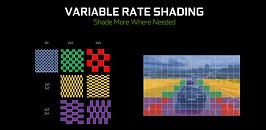

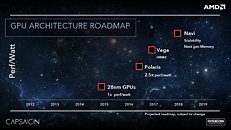

As we've seen with NVIDIA's Turing implementation for Variable Rate Shading, this performance-enhancing technique works in two ways: motion adaptive shading and content adaptive shading. Motion adaptive shading basically takes input from previous frames in order to calculate which pixels are moving fast across the screen, such as with a racing perspective - fast-flying detail doesn't stay focused in our vision so much that we can discern a relative loss in shading detail, whilst stationary objects, such as the focused hypercar you're driving, are rendered in all their glory. Valuable compute time can be gained by rendering a coarse approximation of the pixels that should be in that place, and upscaling them as needed according to the relative speed they are moving across the frame. Content adaptive shading, on the other hand, analyzes detail across a scene, and by reducing shading work to be done across colors and detail that hasn't had much movement in the previous frame and frames - saves frame time.This particular technology is one of those that makes all the sense for AMD to implement in their architecture, because these are sure ways of gaining performance and frametime levels with minimal compromises in image quality. This is a particularly important aspect in the svelte GPU world of consoles - yes, performance is extracted much closer to the metal, but remember, an Xbox One X is currently rendering full 4K games with a GPU that's much closer to an RX 580 in performance than to an RTX 2070 - a graphics card that can't run Anthem at the same 4K Medium settings that the Xbox console can (without RTX). NVIDIA themselves have said that in certain scenarios, the GTX 1660 Ti delivers 1.5x higher frame rates compared with the GTX 1060 - solely due to the application of VRS.Not only in letting consoles achieve much higher pixel density does this tech work, but it can also allow for a performance democratization for VR, where higher frames per second are necessary to offset some side effects of that kind of gaming - and where Sony seems to be betting on as an evolutionary focus in the years to come. It remains to be seen whether or not AMD is able to implement this tech for Navi, but the upside is too great, and the patent too timely, for it not be deployed - at least in AMD's custom silicon for the next generation of consoles.

Sources:

AMD Variable Rate Shading Patent, PCGamesN

As we've seen with NVIDIA's Turing implementation for Variable Rate Shading, this performance-enhancing technique works in two ways: motion adaptive shading and content adaptive shading. Motion adaptive shading basically takes input from previous frames in order to calculate which pixels are moving fast across the screen, such as with a racing perspective - fast-flying detail doesn't stay focused in our vision so much that we can discern a relative loss in shading detail, whilst stationary objects, such as the focused hypercar you're driving, are rendered in all their glory. Valuable compute time can be gained by rendering a coarse approximation of the pixels that should be in that place, and upscaling them as needed according to the relative speed they are moving across the frame. Content adaptive shading, on the other hand, analyzes detail across a scene, and by reducing shading work to be done across colors and detail that hasn't had much movement in the previous frame and frames - saves frame time.This particular technology is one of those that makes all the sense for AMD to implement in their architecture, because these are sure ways of gaining performance and frametime levels with minimal compromises in image quality. This is a particularly important aspect in the svelte GPU world of consoles - yes, performance is extracted much closer to the metal, but remember, an Xbox One X is currently rendering full 4K games with a GPU that's much closer to an RX 580 in performance than to an RTX 2070 - a graphics card that can't run Anthem at the same 4K Medium settings that the Xbox console can (without RTX). NVIDIA themselves have said that in certain scenarios, the GTX 1660 Ti delivers 1.5x higher frame rates compared with the GTX 1060 - solely due to the application of VRS.Not only in letting consoles achieve much higher pixel density does this tech work, but it can also allow for a performance democratization for VR, where higher frames per second are necessary to offset some side effects of that kind of gaming - and where Sony seems to be betting on as an evolutionary focus in the years to come. It remains to be seen whether or not AMD is able to implement this tech for Navi, but the upside is too great, and the patent too timely, for it not be deployed - at least in AMD's custom silicon for the next generation of consoles.

39 Comments on AMD Patents Variable Rate Shading Technique for Console, VR Performance Domination

Xbox One X is very rarely rendering full 4K games. The same games are usually running at a similar performance on RX580 on PC side of things.

Anthem is running at medium-ish settings on Xbox One X which RX580 can hundle just fine at 4K and 30FPS.

GTX 1660Ti delivering 1.5 higher frame rates compared to GTX 1060 has little to do with VRS and is pure marketing bullshit. Well, maybe a littel - it is about 45% faster by itself and then VRS adds that 5% at the top :D

What I am hoping though, is patents not coming to play too hard. AMD and Nvidia have been in cold war type standoff for decades when it comes to patents - either one starting to litigate on patents is pretty close to MAD :(

Also, kinda slightly off topic but does anyone know if Polaris-based cards are using FP16 in Wolf 2 and Far Cry 5 (for water physics) instead of FP32 when not needed?

Wolfenstein's Content Adaptive Shading has been tested by a number of sites. I like TechReport's and Digital Foundry's technically pretty good take at it:

techreport.com/review/34269/testing-turing-content-adaptive-shading-with-wolfenstein-ii-the-new-colossus

The FP16 cores you have in mind are specialized FP32 cores that can do 2:1 FP16 as they were in Big Pascal, Volta and now in Turing. Pascal indeed had a very small amount of these. Anandtech covered these pretty well, I think: www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/5

So Maxwell and Kepler can do it (but no benefit as it is still using Fp32 circuitry) but Pascal it appears cannot. Unless I am being blind. Which may be the case. >_<

I am pretty sure both GP100 as well as GP104 can promote FP16 to FP32 and run it as such. Regressing a feature like that makes no sence and from all the FP16 benchmark (mostly ML) comparisons (including some with GP100) FP16 vs FP32 works at an expected level - slower than FP32 but not by much. Anandtech's story focuses on new features (natively FP16, basically RPM) for which support in GP104 is minimal.

Edit:

Actually, is it even possible to deny promoting FP16 to FP32 in a GPU? If you deny it in hardware/API/drivers somehow, you can still write shaders to run FP32 code that only has FP16 data.

Also a RX580 only runs Anthem at 4k 30fps with the LOWest settings. Not medium, as you can see on any benchmark.

Can you find any benchmark where Anthem is tested on 2160p medium or low?

Googling gives me only this for now: www.gpucheck.com/compare-game-gpu/anthem/amd-radeon-rx-580-vs-amd-radeon-rx-570/intel-core-i7-7700k-4-20ghz-vs-intel-core-i7-7700k-4-20ghz

17.5 at Ultra sounds about right but rest of the numbers are off.

Most reviews on different sites put RX580 average at 2160p Ultra around or just below 20 FPS. There is a noticeable performance boost from going from High to Medium. Xbox One X also has some slowdowns so it does not quite reach locked 30 FPS.

Curious question: what sort of visual degradation would be seen using just FP16 for shading?

www.techpowerup.com/reviews/AMD/Radeon_Vega_GPU_Architecture/

GCN is too old to be relevent these days. Not suited for gaming nor scientific computing. It needs to die.

but I have a new proposition for you :)

nobody don't wanne try carbon nanotubes and such because nobody knows what it meens to go there, right ?

well how about nobody takes the risk: if each silicon fabrication corporation puts equal or profit-relative money into a joint account and use that money to together to find out ? each corporation would provide the people who would be working on it and call it a shared venture. nobody loses money, nobody is guinny pig for anyone else, everyone benefit and everyone gets important answers to critical questions: how mutch it costs, what materials are required, what the yields would be like, how mutch time it takes, how fast can it be....

if nobody wanne take the risk on their own money to find out, then don't. when news like this comes out and you know you are out of silicon and you got nowhere to go.... cutting corners will only go so far and will only give you so mutch (you tried it last time with brilinear filtering, so you know where it gets you). this is the time, or already past the time, to look elsewhere.

Hint: the reason we accept it, is because the pixel density is so high that we can't see the detail most of the time.

Conclusion: might just as well stick to 1080p at proper, actual detail settings as intended. No scaling problems, lower cost, higher FPS. Win Win Win in my book.