Thursday, April 11th 2019

NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

NVIDIA today announced that it is extending DXR (DirectX Raytracing) support to several GeForce GTX graphics models beyond its GeForce RTX series. These include the GTX 1660 Ti, GTX 1660, GTX 1080 Ti, GTX 1080, GTX 1070 Ti, GTX 1070, and GTX 1060 6 GB. The GTX 1060 3 GB and lower "Pascal" models don't support DXR, nor do older generations of NVIDIA GPUs. NVIDIA has implemented real-time raytracing on GPUs without specialized components such as RT cores or tensor cores, by essentially implementing the rendering path through shaders, in this case, CUDA cores. DXR support will be added through a new GeForce graphics driver later today.

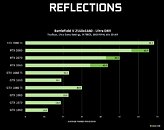

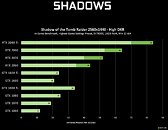

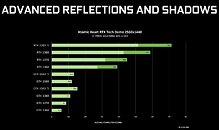

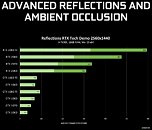

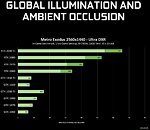

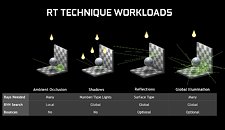

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

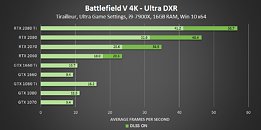

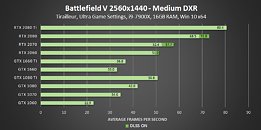

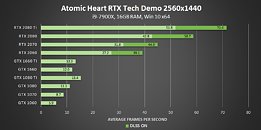

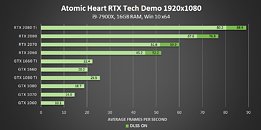

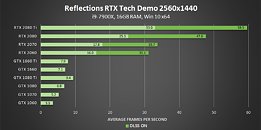

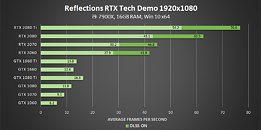

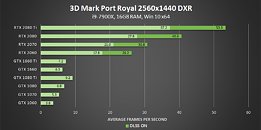

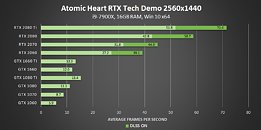

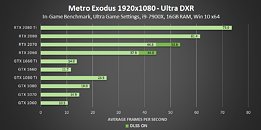

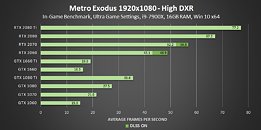

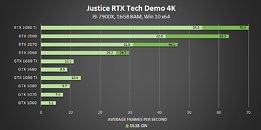

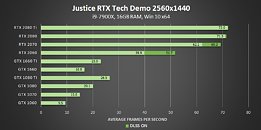

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

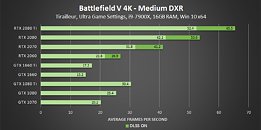

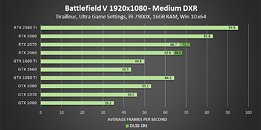

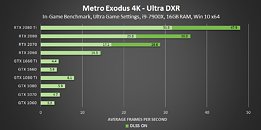

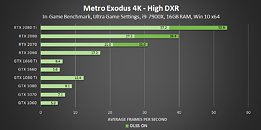

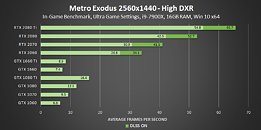

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

111 Comments on NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

Not just 3 , right ?

Did FreeSync help selling more G-Sync monitors?

Well played Nvidia, well played.

Same case.

If AMD come up with something let's say "FreeRay" which runs on and optimized for typical graphics cards instead of RTX cards, would that benefit RTX card sales ?

Nope

.

DoA

pathetic

more stone to the castle of : "i wait next gen GPU's"

In the past I was able to use a weaker GTX960 in games that have PhysX (Fallout 4, Metro 2033) with help of another GT740 dedicated to PhysX. During games, I could monitor the usage on both cards with GPU-Z and I saw it working nicelly.

A similar approach for RTX would allow people like me, that already own a GTX1080, to get into using RTX by just adding another 1060 or 1660, dedicated to that.

I guess nvidia's intent was not to make the technology truly usable, but just to frustrate us and hopefully in this way make us buy the new RTX cards.

That's not gonna work and actually I think will be generating a backlash against RTX adoption by the game developers.

PCI Express 3.0's 8 GT/s bit rate effectively delivers 985 MB/s per lane. With two x16 slots... I think is enough.

Or heck, if there are too high latencies, they could finaly use the NVLink for something useful for a change, because SLI never scaled right... I'll get a 1070 if needed. They are so proud of that link...

PS: Or just cut to the cheese and, similar with how they released a Turing card without the RTX cores, release a card that has only those cores, like a co-processor, for us that want to upgrade without paying $1000.

If AMD could come up with their own solution to optimize DXR without dedicated "cores", RTX cards would become utterly pointless.

What it REALLY is for, is to allow developers that don't have RTX cards to get in and start developing for RTX and allow them to see their results before releasing the features to the masses in their games. Going from a very small install base of developers to a considerably large install base, means that you have more people developing for DXR and Vulkan Ray Tracing than you had before.

It has been 7 months, only 3 games supporting this "Just works" technology and none of them fully supports all DXR effects in a single game.

And again, this is not a discussion to have in a thread about RTX being sort of supported on Pascal cards.

This "RTX being sort of supported on Pascal cards" move has one purpose only : Try to convince ppl to "upgrade / side-grade" to RTX cards.

Then, what is the point to do this "upgrade / side-grade" when there are not even a handful of Ray-Tracing games out there ?

RTX based on DXR which is just one part of the DX12 API, RTX itself is not comparable to fully featured DX10 / DX11 / DX12 .

Compare it to Nvidia's last hardware-accelerated marketing gimmick a.k.a PhysX, tho.

It was the same thing again, hardware PhysX got 4 games back in 2008 and 7 in 2009, then hardware PhysX simply fade out and now open sourced.

Now we got 3 Ray Traced games in 7 months, sounds familiar ?