Thursday, April 11th 2019

NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

NVIDIA today announced that it is extending DXR (DirectX Raytracing) support to several GeForce GTX graphics models beyond its GeForce RTX series. These include the GTX 1660 Ti, GTX 1660, GTX 1080 Ti, GTX 1080, GTX 1070 Ti, GTX 1070, and GTX 1060 6 GB. The GTX 1060 3 GB and lower "Pascal" models don't support DXR, nor do older generations of NVIDIA GPUs. NVIDIA has implemented real-time raytracing on GPUs without specialized components such as RT cores or tensor cores, by essentially implementing the rendering path through shaders, in this case, CUDA cores. DXR support will be added through a new GeForce graphics driver later today.

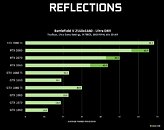

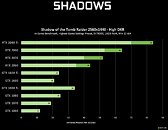

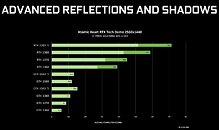

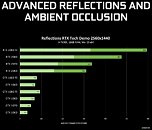

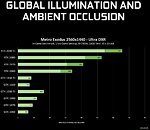

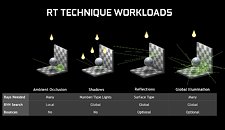

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

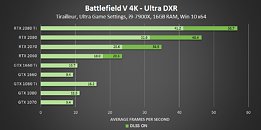

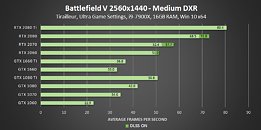

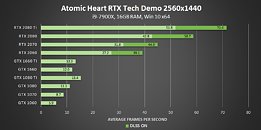

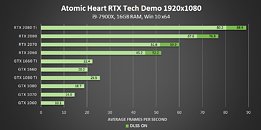

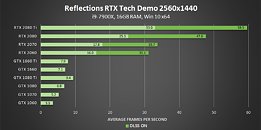

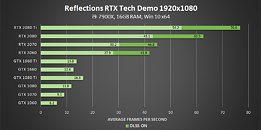

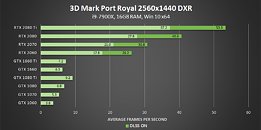

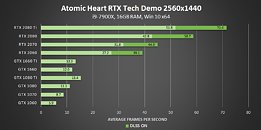

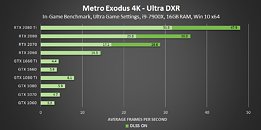

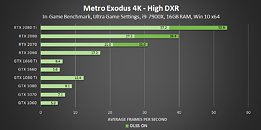

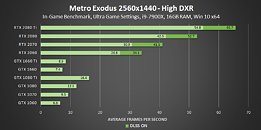

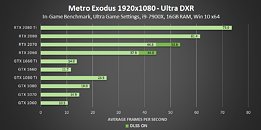

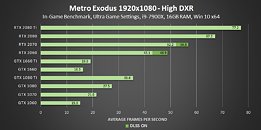

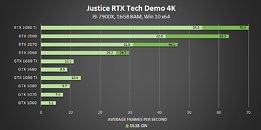

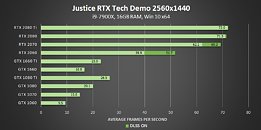

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

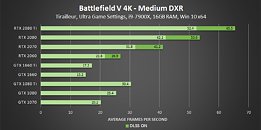

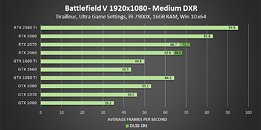

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

111 Comments on NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

It makes more sense to me that AMD would develop a raytracing ASIC which operates as a co-processor on the GPU not unlike the Video Coding Engine and Unified Video Decoder. It would pull meshes and illumination information from the main memory, bounce it's rays, and then copy back the updated meshes to be anti-aliased.

All that information is generally in the VRAM. A raytracing ASIC would be more cache than anything else.

And we knew the outcome.

You are asking AMD to do something their chips literally do not have the facilities to do. At best, they can achieve something akin to emulation.

It simply won't happen, because short of new hardware, it can't happen.That's because all the RT cores do is the magic denoising.

Back when AMD first announced FreeSync,

ppl speculated if it is possible without the expensive FPGA module embedded inside the monitor.

Turns out it is.

Nvidia themselves shown you RTRT is possible without ASIC acceleration.

Pascal architecture itself wasn't built with RTRT in mind yet can run RTRT with not bad results.

It is quite impressive to see in a ray traced game, a 1080ti could to 70% of fps compared to a 2060, with the first driver that enable Pascal to do this. (Poor optimization)

RT cores are dead weight in conventional Rasterization, but Cuda can do both.

Same goes to AMD.

ASIC for RTRT will soon be obsolete, just like the ASIC for PhysX.

To my knowledge Nvidia has never said outside of Optix AI that the denoiser is accelerated. It always references them as "Fast".

Denoisers are Filters and will vary as such.

Turing in particular needs instructions for FP32 and FP16 simultaneously in each WARP or large swaths of transistors end up idling:

www.anandtech.com/show/13973/nvidia-gtx-1660-ti-review-feat-evga-xc-gaming/2

Which game would you choose instead of Metro? and why?

The main problem is that its so very abstract. Nvidia uses performance levels without a clear distinction for RTX On. Everything is fluid here; they will always balance the amount of RT around the end-user performance requirements; the BF V patch was a good example of that. What really matters is the actual quality improvement and then the performance hit required to get there. This is not like saying 'MSAA x4 versus MSAA x8' or something like that. There is no linearity, no predictability and the featureset can be used to any extent.

Please try to word your replies better. Or in case your only point was "Nvidia is trying to scam people out of their money" or "RTX will die because I don't like it as it is now", we've already had hundreds of posts about that.

For 5 years is almost garbage. It's very rear in industry to find comparison with that to cost so much.

Extreme quality audio equipment as Amplifiers, Turntables, Speakers, etc could be bought for 1K to last decades.

Compare example some studio-club turntable from Technics who reach Anniversary of 50 years, indestructible 15kg monster with replaceable every single part on him could be bought for price of RTX2080 example, Extreme quality speakers. That's poor insanity where gaming industry lead us.

Turing was Turning point for me, moment when I figure out that from now I will play games 2-3 years after launch date, pay only one platform in generation, not two with same memory types and similar core architectures because that's literary throwing money.

You can buy 85 Vinyls or 150 CDs average for price of single custom build RTX2080Ti. That's abnormal.

You can buy Benelli M4 12ga Tactical Shutgun for RTX2080Ti... When you check little peace of plastic with chip ready to become outdated for 5 years, it's funny.

I prepared 700 euro money and waited RTX2080Ti with option to add 100-150 euro more even 200 for some premium models, I hoped maybe even K|NGP|N version.

I was not even close to buy 30% stronger card than GTX1080Ti. At the end I didn't had enough for even RTX2080 and need to add significant money.

Option was to buy second hand GTX1080Ti and rest of money to buy 1TB M.2 but I will remember this GeForce architecture as many of you will remember next generations and gave up from brand new cards. You can play nice with previous high end model with 1-2 years warranty second hand below 500$.

I knew in one moment when people become aware how much they pay for Intel processors and NVIDIA graphic cards they will change approach and than even significant drop of price will not

Now if NVIDIA drop price for 150$ they would change nothing significantly, they would sell more GPU, but not much as they expected.

PC gaming is if you can afford GTX1080, GTX1080Ti, RTX2080/2080Ti, eventually RTX2070.

But investing few hundreds for lower class of GPU... better PS4.

We didn't test 'Hairworks games' or 'PhysX games' back when Nvidia pushed games with those technologies either. As it stands today, there is no practical difference apart from it being called 'DXR'. There was never a feature article for other proprietary tech either such as 'Turf Effects' or HBAO+, so in that sense the articles we get are already doing the new technology (and its potential) justice. In reviews you want to highlight a setup that applies for all hardware.

Its a pretty fundamental question really. If this turns out to be a dud, you will be seen as biased if you consistently push the RTX button. And if it becomes widely supported technology, the performance we see today has about zero bearing on it. The story changes when AMD or another competitor also brings a hardware solution for it.

Something to think about, but this is why I feel focused articles that really lay out the visual and performance differences in detail are actually worth something, but another RTX bar in the bar chart most certainly is not.The only caveat there in my opinion is perf/watt. The RT perf/watt of dedicated hardware is much stronger, and if you consider the TDP of the 2080ti, there isn't really enough headroom to do everything on CUDA.

Listen to the people.

Yes, "Nvidia is trying to scam people out of their money"

And yes, Nvidia RTX surely will die because how bad they ruined it, but DXR lives on.

It was a cheap add-on feature that manufacturers producing upscaler chips included free of charge.

It took customer reluctance to pay 200+ premium per monitor, for nVidia branded version of it.

GSync branded notebooks didn't use it either, because, see above.

But once Gsync failed and FreeSync won, let's call the latter simply "Adaptive Sync" shall we, it downplays what AMD has accomplished and all.Bringing ultra-competitive low margin automotive into this is beyond ridiculous.

It took customer reluctance to pay 200+ premium per monitor sounds like free really wasn't why.

GSync module would not physically fit in a notebook. Besides, isn't using established standards exactly what is encouraged? :)

Wrong thread for this though.