Thursday, April 11th 2019

NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

NVIDIA today announced that it is extending DXR (DirectX Raytracing) support to several GeForce GTX graphics models beyond its GeForce RTX series. These include the GTX 1660 Ti, GTX 1660, GTX 1080 Ti, GTX 1080, GTX 1070 Ti, GTX 1070, and GTX 1060 6 GB. The GTX 1060 3 GB and lower "Pascal" models don't support DXR, nor do older generations of NVIDIA GPUs. NVIDIA has implemented real-time raytracing on GPUs without specialized components such as RT cores or tensor cores, by essentially implementing the rendering path through shaders, in this case, CUDA cores. DXR support will be added through a new GeForce graphics driver later today.

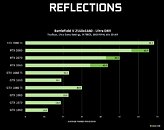

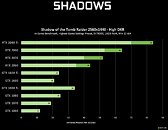

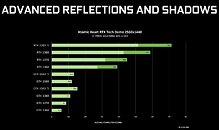

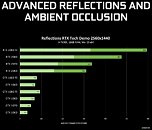

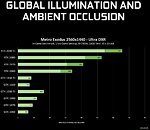

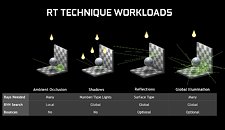

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

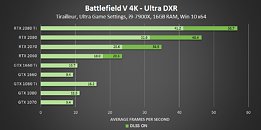

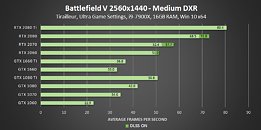

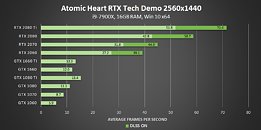

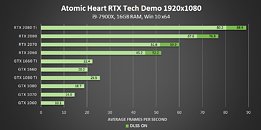

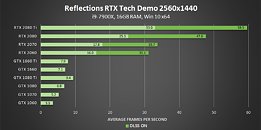

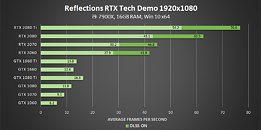

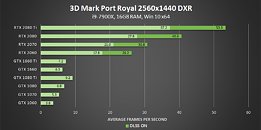

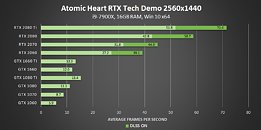

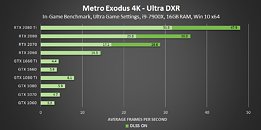

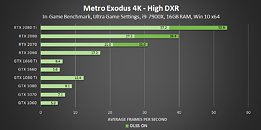

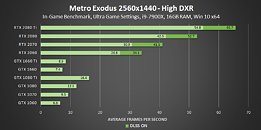

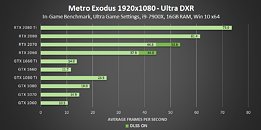

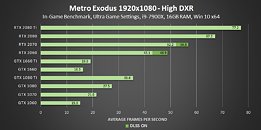

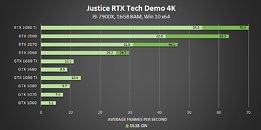

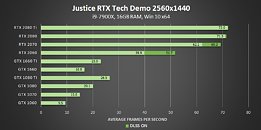

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

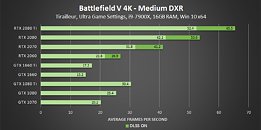

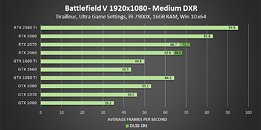

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

The GPU's CUDA cores now have to calculate BVR, intersection, reflection, and refraction. The GTX 16-series chips have an edge over "Pascal" despite lacking RT cores, as the "Turing" CUDA cores support concurrent INT and FP execution, allowing more work to be done per clock. NVIDIA in a detailed presentation listed out the kinds of real-time ray-tracing effects available by the DXR API, namely reflections, shadows, advanced reflections and shadows, ambient occlusion, global illumination (unbaked), and combinations of these. The company put out detailed performance numbers for a selection of GTX 10-series and GTX 16-series GPUs, and compared them to RTX 20-series SKUs that have specialized hardware for DXR.Update: Article updated with additional test data from NVIDIA.

According to NVIDIA's numbers, GPUs without RTX are significantly slower than the RTX 20-series. No surprises here. But at 1440p, the resolution NVIDIA chose for these tests, you would need at least a GTX 1080 or GTX 1080 Ti for playable frame-rates (above 30 fps). This is especially true in case of Battlefield V, in which only the GTX 1080 Ti manages 30 fps. The gap between the GTX 1080 Ti and GTX 1080 is vast, with the latter serving up only 25 fps. The GTX 1070 and GTX 1060 6 GB spit out really fast Powerpoint presentations, at under 20 fps.It's important to note here, that NVIDIA tested at the highest DXR settings for Battlefield V, and lowering the DXR Reflections quality could improve frame-rates, although we remain skeptical about the slower SKUs such as GTX 1070 and GTX 1060 6 GB. The story repeats with Shadow of the Tomb Raider, which uses DXR shadows, albeit the frame-rates are marginally higher than Battlefield V. You still need a GTX 1080 Ti for 34 fps.Atomic Heart uses Advanced Reflections (reflections of reflections, and non-planar reflective surfaces). Unfortunately, no GeForce GTX card manages performance over 15.4 fps. The story repeats with 3DMark Port Royal, which uses both Advanced Reflections and DXR Shadows. Single-digit frame-rates for all GTX cards. The performance is better with Justice tech-demo, although far-from playable, as only the GTX 1080 and GTX 1080 Ti manage over 20 fps. Advanced Reflections and AO, in case of the Star Wars RTX tech-demo, is another torture for these GPUs - single-digit frame-rates all over. Global Illumination with Metro Exodus is another slog for these chips.Overall, NVIDIA has managed to script the perfect advertisement for the RTX 20-series. Real-time ray-tracing on compute shaders is horrendously slow, and it pays to have specialized hardware such as RT cores for them, while tensor cores accelerate DLSS to improve performance even further.It remains to be seen if AMD takes a swing at DXR on GCN stream processors any time soon. The company has already had a technical effort underway for years under Radeon Rays, and is reportedly working on DXR.

Update:

NVIDIA posted its test data for 4K and 1080p in addition to 1440p, and medium-thru-low settings of DXR. Their entire test data is posted below.

111 Comments on NVIDIA Extends DirectX Raytracing (DXR) Support to Many GeForce GTX GPUs

Polaris and down might experience the same problem GTX 1080 Ti does, but Vega (12.5 billion transistors) may be able to keep pace with RTX 2060 in DXR if they're able to get FP32 out of some of the cores and 2xFP16 out of other cores.My two cents: it's too early to be benchmarking DXR because all results are going to be biased towards NVIDIA RTX cards by design. We really need to see AMD's response before it's worth testing. I mean, to benchmark now is just going to state the obvious (NVIDIA's product stack makes it clear which should perform better than the next).

At this point, I don't think benchmarking DXR/RTX is meant to compare Nvidia and AMD (the thought never crossed my mind till I red your post), but rather give an idea about what you're getting if you're willing to foot the bill for an RTX enabled Turing card. The fact that a sample size of one isn't representative of anything should be more than obvious.

Its more work for @W1zzard but If hes going that route might as well pick a game that can do DX11/DX12/DXR and compare all 3 and introduce the % lows. Give a bit more insight on testing scene run and duration.

The reason why benchmarks are important in DX11/DX12/Vulkan/OGL is because there are multiple, competing product stacks and there's no way to know which performs better unless it's tested. Until that is also true of DXR/VRT, I fail to see a point in it.Also that. Not many games support RTX and the game review itself can differentiate the cards in terms of RTX performance. In a review of many cards on a variety of games, RTX really has no value because so few cards are even worth trying.

It can do INT32 and FP32 concurrently.

Give this a read and watch. Tony does a good job explaining the architecture and how it is suited to accelerate real time ray tracing.

www.nvidia.com/en-us/geforce/news/geforce-gtx-dxr-ray-tracing-available-now/

Keep in mind that all GPU architectures have INT32 units for addressing memory. The only thing unique about Turing is that they're directly addressable. What I find very interesting about that PNG you referenced is how the INT32 units aren't very tasked when the RT core is enabled but are when it is not. Obviously they're doing a lot of RT operations in INT32 which begs the question: is RT core really just a dense integer ASIC with intersection detection? Integer math explains the apparent performance boost from such a tiny part of the silicon. Also explains why Radeon Rays has much lower performance: it uses FP32 or FP16 (Vega) math. It also explains why RTX has such a bad noise problem: their rays are imprecise.

Considering all of this, it's impossible to know what approach AMD will take with DXR. NVIDIA is cutting so many corners and AMD has never been a fan of doing that. I think it's entirely possible AMD will just ramp up the FP16 capabilities and forego exposing the INT32 addressability. I don't know that they'll do it via tensor cores though. AMD has always been in favor of bringing sledge hammers to fistfights. Why? Because a crapload of FP16 units can do all sorts of things. Tensor cores and RT cores are fixed function.

- The same slide clearly shows INT32 units being intermittently tasked throughout the frame. RT is computation heavy and is fairly lenient on what type of compute is used so INT32 cores are more effective than usually. Note that FP compute is also very heavy and consistent during RT part of the frame.

- RT core is a dense specialized ASIC. According to Nvidia (and at least indirectly confirmed by devs and operations exposed in APIs) RT cores do ray triangle intersection and BVH traversal.

- RT is not only INT work, it involves both INT and FP. The share of each depends on a bunch of things, algorithm, which part of the RT is being done etc. RT Cores in Turing are more specialized than simply generic INT compute. That is actually very visible empirically from the same frame rendering comparison.

- Radeon Rays have selectable precision. FP16 is implemented for it because it has a very significant speed increase over FP32. In terms of RTRT (or otherwise quick RT) precision has little meaning when rays are sparse and are denoised anyway. Denoising algorithm along with ray placement play a much larger role here.

- As for AMDs approach, this is not easy to say. The short term solution would be Radeon Rays implemented for DXR. When and if AMD wants to come out with that is in question but I suppose than answer is when it is inevitable. Today, AMD has no reason to get into this as DXR and RTRT is too new and with too few games/demos. This matches what they have said along with the fact that AMD only has Vegas that are likely to be effective enough for it (RX5x0 lacks RPM - FP16). Long term - I am speculating here but I am willing to bet that AMD will also do implementation with specialized hardware.

Adaptive Sync on desktop was different. There was no standard.

Timeline:

- October 2013 at Nvidia Montreal Event: GSync was announced with availability in Q1 2014.

- January 2014 at CES: Freesync was announced and demonstrated on Toshiba laptops using eDP.

- May 2014: DisplayPort 1.2a specification got the addition of Adaptive Sync. DisplayPort 1.2a spec was from January 2013 but did not include Adaptive Sync until then.

- June 2014 at Computex: Freesync prototype monitors were demoed.

- Nov 2014 at AMD's Future of Computing Event: Freesync monitors announced with availability in Q1 2015.

Yeah, Nvidia is evil for doing proprietary stuff and not pushing for a standard. However, you must admit it makes sense from business perspective. They control the availability and quality of the product latter of which was a serious problem in early Freesync monitors. Gsync lead Freesync by an entire year on the market. Freesync was a clear knee-jerk reaction from AMD. There was simply no way they could avoid responding.