Tuesday, April 23rd 2019

Tesla Dumps NVIDIA, Designs and Deploys its Own Self-driving AI Chip

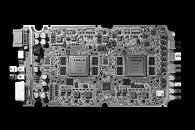

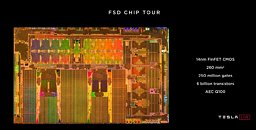

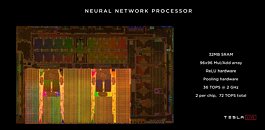

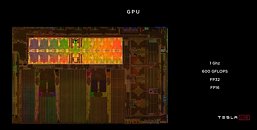

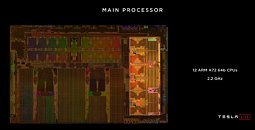

Tesla Motors announced the development of its own self-driving car AI processor that runs the company's Autopilot feature across its product line. The company was relying on NVIDIA's DGX processors for Autopilot. Called the Tesla FSD Chip (full self-driving), the processor has been deployed on the latest batches of Model S and Model X since March 2019, and the company looks to expand it to its popular Model 3. Tesla FSD Chip is an FPGA of 250 million gates across 6 billion transistors crammed into a 260 mm² die built on the 14 nm FinFET process at a Samsung Electronics fab in Texas. The chip packs 32 MB of SRAM cache, a 96x96 mul/add array, and a cumulative performance metric per die of 72 TOPS at its rated clock-speed of 2.00 GHz.

A typical Autopilot logic board uses two of these chips. Tesla claims that the chip offers "21 times" the performance of the NVIDIA chip it's replacing. Elon Musk referred to the FSD Chip as "the best chip in the world," and not just on the basis of its huge performance uplift over the previous solution. "Any part of this could fail, and the car will keep driving. The probability of this computer failing is substantially lower than someone losing consciousness - at least an order of magnitude," he added.Slides with on-die details follow.

A typical Autopilot logic board uses two of these chips. Tesla claims that the chip offers "21 times" the performance of the NVIDIA chip it's replacing. Elon Musk referred to the FSD Chip as "the best chip in the world," and not just on the basis of its huge performance uplift over the previous solution. "Any part of this could fail, and the car will keep driving. The probability of this computer failing is substantially lower than someone losing consciousness - at least an order of magnitude," he added.Slides with on-die details follow.

39 Comments on Tesla Dumps NVIDIA, Designs and Deploys its Own Self-driving AI Chip

Its being manufacturer at Samsung Austin Texas not Korea. It was one of the Q/A during their presentation.They way they described it during their presentation and during Q/A. Is that it was purpose built for cars rather then having Nvidia general purpose kit. They also said, it would save them 20% per unit/car.

Level 5 self driving ( aka, full autonomy ) by next year... would be a miracle.

This falls into the "I want to believe" UFO fanatics...

But who knows, maybe they know something that anyone else doesn't.

If it DOES happen and regulators get such cars into law, I personally couldn't be more happy, because I'm not allowed to drive due to some medical issues. But I would love to buy a car that drives me... especially one that doesn't cost me anything for "fuel" !

They just replaced the inferencing in the cars, the drive AGX platform.

2 Variants: Xavier & Pegasus

Xavier delivers 30 TOPS of performance while consuming only 30 watts of power.

Pegasus™ delivers 320 TOPS with an architecture built on two NVIDIA® Xavier™ processors and two next-generation TensorCore GPUs. (Tu104)

Took a bit of digging as they list twin TU104 gpus but it's actually a super cutdown variant the T4. Only uses 70w ea for 130 Tops/card. Also they don't sell the T4 in the SXM2 format outside the drive platform... Puts platform power at ~200w.

(original announcement said 500w tdp, but that was before the T4 which inferencing specs this matches, full quadro 5000 would be ~265w ea and hit over that 500w tdp and under the inferencing spec)

This FPGA replacement is a 144 TOPS solution, which is likely nearly as efficient as the AGX Xavier but I am not finding the power usage anywhere.

So improvement is not 21x, they quoted nvidia's solution at 20 Tops, not 30 though that might have been the gen1 unit they were replacing.

Tesla announced that they'll end partnership with Nvidia in August 2018, but the first official confirmation that they're building an AI chip was in 2017Q4. A long time ago.

And of course they're "dumping" the inference chip - the one put inside a car.

Whatever they use for learning most likely stays the same. It's very unlikely Tesla could develop a functioning GPU, let alone a competitor to DGX-1.

Of course they're looking for a way to save money, since Tesla's is once again burning cash.Not happening.No.It will take decades before we reach Level 5, i.e. cars that can drive themselves in all conditions. Don't hold your breath.

Technologically we're around level 3, but it still needs a lot of testing and, obviously, legislation. That's another 5 years, maybe more.

21x is in reference to Frames it can process per second in comparison.

Moreover, what are CPUs and GPUs supposed to be good at, if not at computing?

And I wouldn't really call cars a "niche market"... ;-)

The really interesting part is that the chip Tesla made is rather weak for full autonomous driving. Nvidia's solution is more than twice as powerful.No offense, but you can't be serious saying things like that.

You'd be happy if a leading AI chip company left this business and focused on gaming?

That is just dumb.

1. If your car crashes in another one or a bicyclist or a pedestrian, who will pay for damages and who will go to jail for negligence? You? Will carry insurance even? The automaker?

2. If you "fuel" at the residential electrical grid, how will you pay for the infrastructure (roads/streets) that your car is using? Right now those are paid by gas tax. Will you be OK to have a GPS in the car an be taxed per mile driven?