Wednesday, June 12th 2019

NVIDIA's SUPER Tease Rumored to Translate Into an Entire Lineup Shift Upwards for Turing

NVIDIA's SUPER teaser hasn't crystallized into something physical as of now, but we know it's coming - NVIDIA themselves saw to it that our (singularly) collective minds would be buzzing about what that teaser meant, looking to steal some thunder from AMD's E3 showing. Now, that teaser seems to be coalescing into something amongst the industry: an entire lineup upgrade for Turing products, with NVIDIA pulling their chips up one rung of the performance chair across their entire lineup.

Apparently, NVIDIA will be looking to increase performance across the board, by shuffling their chips in a downward manner whilst keeping the current pricing structure. This means that NVIDIA's TU106 chip, which powered their RTX 2070 graphics card, will now be powering the RTX 2060 SUPER (with a reported core count of 2176 CUDA cores). The TU104 chip, which power the current RTX 2080, will in the meantime be powering the SUPER version of the RTX 2070 (a reported 2560 CUDA cores are expected to be onboard), and the TU102 chip which powered their top-of-the-line RTX 2080 Ti will be brought down to the RTX 2080 SUPER (specs place this at 8 GB GDDR6 VRAM and 3072 CUDA cores). This carves the way for an even more powerful SKU in the RTX 2080 Ti SUPER, which should be launched at a later date. Salty waters say the RTX 2080 Ti SUPER will feature and unlocked chip which could be allowed to convert up to 300 W into graphics horsepower, so that's something to keep an eye - and a power meter on - for sure. Less defined talks suggest that NVIDIA will be introducing an RTX 2070 Ti SUPER equivalent with a new chip as well.This means that NVIDIA will be increasing performance by an entire tier across their Turing lineup, thus bringing improved RTX performance to lower pricing brackets than could be achieved with their original 20-series lineup. Industry sources (independently verified) have put it forward that NVIDIA plans to announce - and perhaps introduce - some of its SUPER GPUs as soon as next week.

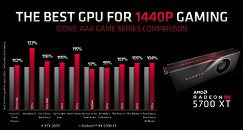

Should these new SKUs dethrone NVIDIA's current Turing series from their current pricing positions, and increase performance across the board, AMD's Navi may find themselves thrown into a chaotic market that they were never meant to be in - the RT 5700 XT for $449 features performance that's on par or slightly higher than NVIDIA's current RTX 2070 chip, but the SUPER version seems to pack in just enough more cores to offset that performance difference and then some, whilst also offering raytracing.Granted, NVIDIA's TU104 chip powering the RTX 2080 does feature a grand 545 mm² area, whilst AMD's RT 5700 XT makes do with less than half that at 251 mm² - barring different wafer pricing for the newer 7 nm technology employed by AMD's Navi, this means that AMD's dies are cheaper to produce than NVIDIA's, and a price correction for AMD's lineup should be pretty straightforward whilst allowing AMD to keep healthy margins.

Sources:

WCCFTech, Videocardz

Apparently, NVIDIA will be looking to increase performance across the board, by shuffling their chips in a downward manner whilst keeping the current pricing structure. This means that NVIDIA's TU106 chip, which powered their RTX 2070 graphics card, will now be powering the RTX 2060 SUPER (with a reported core count of 2176 CUDA cores). The TU104 chip, which power the current RTX 2080, will in the meantime be powering the SUPER version of the RTX 2070 (a reported 2560 CUDA cores are expected to be onboard), and the TU102 chip which powered their top-of-the-line RTX 2080 Ti will be brought down to the RTX 2080 SUPER (specs place this at 8 GB GDDR6 VRAM and 3072 CUDA cores). This carves the way for an even more powerful SKU in the RTX 2080 Ti SUPER, which should be launched at a later date. Salty waters say the RTX 2080 Ti SUPER will feature and unlocked chip which could be allowed to convert up to 300 W into graphics horsepower, so that's something to keep an eye - and a power meter on - for sure. Less defined talks suggest that NVIDIA will be introducing an RTX 2070 Ti SUPER equivalent with a new chip as well.This means that NVIDIA will be increasing performance by an entire tier across their Turing lineup, thus bringing improved RTX performance to lower pricing brackets than could be achieved with their original 20-series lineup. Industry sources (independently verified) have put it forward that NVIDIA plans to announce - and perhaps introduce - some of its SUPER GPUs as soon as next week.

Should these new SKUs dethrone NVIDIA's current Turing series from their current pricing positions, and increase performance across the board, AMD's Navi may find themselves thrown into a chaotic market that they were never meant to be in - the RT 5700 XT for $449 features performance that's on par or slightly higher than NVIDIA's current RTX 2070 chip, but the SUPER version seems to pack in just enough more cores to offset that performance difference and then some, whilst also offering raytracing.Granted, NVIDIA's TU104 chip powering the RTX 2080 does feature a grand 545 mm² area, whilst AMD's RT 5700 XT makes do with less than half that at 251 mm² - barring different wafer pricing for the newer 7 nm technology employed by AMD's Navi, this means that AMD's dies are cheaper to produce than NVIDIA's, and a price correction for AMD's lineup should be pretty straightforward whilst allowing AMD to keep healthy margins.

126 Comments on NVIDIA's SUPER Tease Rumored to Translate Into an Entire Lineup Shift Upwards for Turing

They throw in 3500 cuda and 90% of 2080Ti's RT capability and I might get one at $699

If there is a price adjustment downwards with the Super lineup then that would be nice but that's just a rumor right now.

It just means that there are other products on the market that can get more fps.

Plus this is just Spoiler PR BS , the cards are not hitting shelfs for months and then it won't apparently be all at the same time.

Im not linking the wccftech article.

So Trparky they are undoing the upsell Apparently, I would be livid personally.

You are right to point out newer nodes tend to be more expensive than older ones. At while they coexist.

syncedreview.com/2019/03/21/nvidia-ceo-says-no-rush-on-7nm-gpu-company-clearing-its-crypto-chip-inventory/

Plus, Nvidia really doesn't need 7nm now.

Nvidia on the other hand have a superior architecture that achieves better efficiency on an "inferior" node. They will not move to a new node until they need to, and considering the volumes of large chips shipped by Nvidia vs. AMD, Nvidia needs a more mature node before they move production.Really? So AMD assumed that Nvidia wouldn't update their lineup for the next several years?

A few years ago Nvidia used to do mid-life upgrades of their generations every year or so.

AMD had to go to 7nm out of necessity to compete. Nvidia for the time being has no need for that, so they stick to what is more affordable.