Thursday, February 27th 2020

AMD Gives Itself Massive Cost-cutting Headroom with the Chiplet Design

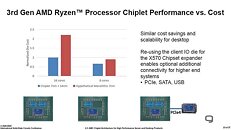

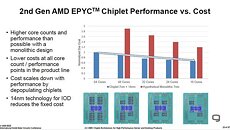

At its 2020 IEEE ISSCC keynote, AMD presented two slides that detail the extent of cost savings yielded by its bold decision to embrace the MCM (multi-chip module) approach to not just its enterprise and HEDT processors, but also its mainstream desktop ones. By confining only those components that tangibly benefit from cutting-edge silicon fabrication processes, namely the CPU cores, while letting other components sit on relatively inexpensive 12 nm, AMD is able to maximize its 7 nm foundry allocation, by making it produce small 8-core CCDs (CPU complex dies), which add up to AMD's target core-counts. With this approach, AMD is able to cram up to 16 cores onto its AM4 desktop socket using two chiplets, and up to 64 cores using eight chiplets on its SP3r3 and sTRX4 sockets.

In the slides below, AMD compares the cost of its current 7 nm + 12 nm MCM approach to a hypothetical monolithic die it would have had to build on 7 nm (including the I/O components). The slides suggest that the cost of a single-chiplet "Matisse" MCM (eg: Ryzen 7 3700X) is about 40% less than that of the double-chiplet "Matisse" (eg: Ryzen 9 3950X). Had AMD opted to build a monolithic 7 nm die that had 8 cores and all the I/O components of the I/O die, such a die would cost roughly 50% more than the current 1x CCD + IOD solution. On the other hand, a monolithic 7 nm die with 16 cores and I/O components would cost 125% more. AMD hence enjoys a massive headroom for cost-cutting. Prices of the flagship 3950X can be close to halved (from its current $749 MSRP), and AMD can turn up the heat on Intel's upcoming Core i9-10900K by significantly lowering price of its 12-core 3900X from its current $499 MSRP. The company will also enjoy more price-cutting headroom for its 6-core Ryzen 5 SKUs than it did with previous-generation Ryzen 5 parts based on monolithic dies.

Source:

Guru3D

In the slides below, AMD compares the cost of its current 7 nm + 12 nm MCM approach to a hypothetical monolithic die it would have had to build on 7 nm (including the I/O components). The slides suggest that the cost of a single-chiplet "Matisse" MCM (eg: Ryzen 7 3700X) is about 40% less than that of the double-chiplet "Matisse" (eg: Ryzen 9 3950X). Had AMD opted to build a monolithic 7 nm die that had 8 cores and all the I/O components of the I/O die, such a die would cost roughly 50% more than the current 1x CCD + IOD solution. On the other hand, a monolithic 7 nm die with 16 cores and I/O components would cost 125% more. AMD hence enjoys a massive headroom for cost-cutting. Prices of the flagship 3950X can be close to halved (from its current $749 MSRP), and AMD can turn up the heat on Intel's upcoming Core i9-10900K by significantly lowering price of its 12-core 3900X from its current $499 MSRP. The company will also enjoy more price-cutting headroom for its 6-core Ryzen 5 SKUs than it did with previous-generation Ryzen 5 parts based on monolithic dies.

89 Comments on AMD Gives Itself Massive Cost-cutting Headroom with the Chiplet Design

It might offload the CPU but you lose precious content to view.

Besides, you're comparing Intel's 2006 pricing with AMD's 2009 pricing. Core 2 Quad was not expensive in 2009.

But if that's what you needed, kudos to you.

I think we're done, and offtopic.

Both companies ask as much as they can, which means AMD product is worth $6500. End of story.Well, obviously. If Intel manages to spend less on developing new nodes and new architectures, they make more money.

Since AMD decided to build their lineup on a more advanced and modern process, they have to pay more.

That's the whole point, isn't it?

Intel is desperately milking everyone and it's against the interests of the society as a whole.

First of all: that's just the cost of silicon wafer.

But more importantly - in case you've somehow missed it: AMD outsources production to TSMC. It's a separate company.OMG you're like a semi-intelligent bot. :)

If we assume an OEM is rational and buys the cheaper product*, they would still buy a $10k EPYC over a $20k Xeon - if EPYC was worth $10k.

If AMD sells them for $6.5k, it means that's the actual value (on average).

Of course all of that is true when we assume both Intel and AMD actually ask the suggested price. There's a good chance OEMs pay Intel a lot less. Nevertheless, gross margin remains higher.Yes, all companies should give products away for free. The society would benefit for sure. :)

*) and they do, because doing otherwise could be classified as misconduct - and most large OEMs are public companies.

Oh noes, C2Q had slightly higher IPC. Whatever shall I do? Meanwhile, you had to use FSB with a really good MB to get nice clocks on the C2Qs (or buy the expensive models with higher stock clock).

Nope, I made the right choice.

You can't upgrade a Core 2 Quad system with anything.

With chiplets, the user does get higher performance, better power consumption and lower cost.

Ryzen 3000 series Zen 2 always felt like a compromise to me.

It does kind of suggest though that we'll see Zen 3 sporting a 12nm I/O die.

Though the need of large caches to offset those latency penalties do present their own issues.