Sunday, June 21st 2020

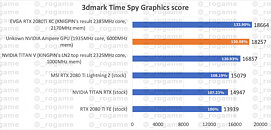

NVIDIA GeForce "Ampere" Hits 3DMark Time Spy Charts, 30% Faster than RTX 2080 Ti

An unknown NVIDIA GeForce "Ampere" GPU model surfaced on 3DMark Time Spy online database. We don't know if this is the RTX 3080 (RTX 2080 successor), or the top-tier RTX 3090 (RTX 2080 Ti successor). Rumored specs of the two are covered in our older article. The 3DMark Time Spy score unearthed by _rogame (Hardware Leaks) is 18257 points, which is close to 31 percent faster than the RTX 2080 Ti Founders Edition, 22 percent faster than the TITAN RTX, and just a tiny bit slower than KINGPIN's record-setting EVGA RTX 2080 Ti XC. Futuremark SystemInfo reads the GPU clock speeds of the "Ampere" card as 1935 MHz, and its memory clock at "6000 MHz." Normally, SystemInfo reads the memory actual clock (i.e. 1750 MHz for 14 Gbps GDDR6 effective). Perhaps SystemInfo isn't yet optimized for reading memory clocks on "Ampere."

Source:

HardwareLeaks

67 Comments on NVIDIA GeForce "Ampere" Hits 3DMark Time Spy Charts, 30% Faster than RTX 2080 Ti

and if you claim otherwise you're a fanboy :roll:

sweetie,image sharpening is hardly a feature,calling it that is a stretch in 2020.it's the easiest trick a gpu can do to produce image that may look better to the untrained eye.

nvidia has had that feature via freestlye for years.but "folks from the known camp" tend to forget about it,until amd has it 2 years later that is

and hwunboxed has their own video on dlss 2.0,I guess you missed that one.I advise you not to watch it cause it'll just make you sad how good they say it is.

let me inform you since you're slow to learn new facts

Nobody direclty compared it to the image upscaler called DLSS 2.0.

If you are too concerned about the naming:

It is notable that using neural network like processing to upscale images is not something either of the GPU manufacturers pioneered:

towardsdatascience.com/deep-learning-based-super-resolution-without-using-a-gan-11c9bb5b6cd5Sad is the reality distortion thing, there is nothing sad about upscaling doing its job.

here,even your favorite amd-leaning data manipulating channel has to admit that

at 1440p that was 25% maybe

this is rtx whangdoodle the thread should say

This does not mean there is just one dlss 2.0 review out there.

and dlss 2.0 is not trained on a per-game basis.

but how would you know that,there's been reviews for 4 months and you don't even know

why would anyone compare image sharpening to image resonstruction really ? make sense much ?

nvidia vs amd image sharpening in driver makes sense.

dlss vs image sharpening ? why ?

to get a 40% performance uplift from using resolution drop + sharpening you have to drop the resolution by 40% and apply tons of sharpening that may look good to an untrained eye but real bad on closer inspection.

you're not getting same image quality as native resolution vs dlss quality preset with said performance uplift.

maybe dlss performance vs res scale drop + sharpening would be comparable in terms of quality,but then again with performance preset you're getting double the framerate

www.purepc.pl/nvidia-dlss-20-test-wydajnosci-i-porownanie-jakosci-obrazu?page=0,8

sorry,but what you're arguing here is just irreleveant.

This is a Ampere performance rumour thread.

Soo on topic.

Dya see the pciex version of a100.

250 watts not 400 like sxm and only 10% performance loss.

To me this indicates they really are pushing the silicon passed it's efficiency curve ,150 watts for that last 10%

We're expecting upto 300 watts Tdp on a 3080ti so it seems like they're pushing that curve.

tdp is unknown

Based on cooling and hypothetical common sense.

so yeah,400w and up to 300w are kinda different,right ?

Because marketing.Puzzled where you've seen the perf consumption figures.

why compare resolution drop w. image reconstruction to just a pure,simple resolution scale drop.

is resolution dropping a new feature now ? :laugh:

www.techpowerup.com/forums/threads/nvidia-announces-a100-pcie-tensor-core-accelerator-based-on-ampere-architecture.268842/#post-4294639

sxm uses 400watts, it says so.

pciex uses 250 watts ,it says so.

2080ti uses near 300 watts TPU showed.

the cooler we saw a week or two ago was /300 watts

Stay on topic and keep it civil

Thank You and Have a Good Day

I mean I could understand a $1000 2080Ti cause the competition wasn't there (still isn't 2 yrs later,might not be this year),but for a +30-35% over 1080Ti - not really