Saturday, September 19th 2020

NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

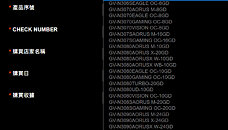

A GIGABYTE webpage meant for redeeming the RTX 30-series Watch Dogs Legion + GeForce NOW bundle, lists out eligible graphics cards for the offer, including a large selection of those based on unannounced RTX 30-series GPUs. Among these are references to a "GeForce RTX 3060" with 8 GB of memory, and more interestingly, a 20 GB variant of the RTX 3080. The list also confirms the RTX 3070S with 16 GB of memory.

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

Source:

VideoCardz

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

157 Comments on NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

And for the last time, there was no way to launch new SKUs without hurting the sales of the older ones, it's natural. As soon as the 3080 20GB comes out, the 10GB version will be much less desirable, that's how it goes.

And the fact their lineup was confusing, it may have been part of their strategy, or maybe they don't think it is important. Just look at their mobile lineup. There was no pressure from AMD there, and it's still all over the place with supers and max-q SKU where you have no idea to expect in terms of performance if you haven't watched 10 comparative reviews.

Both Navi and (some) Super cards were launched in July 2019, right? If this was a response, shouldn't it be after Navi release? How are the super cards neutered...by software or laser? You cant enable more bits, so they seem laser cut. While rumors are abound, if this was a response and not something planned, I find it difficult to believe they can software or laser cut to neuter the dies and get them out that fast. It wasn't like they didn't know AMD would be coming out with something. They didn't read a rumor on wccftech and suddenly start looking for ways to get a more competitive product out there.

So yes, the timing was likely in response, sure, but to think these were not planned beforehand feels a bit myopic to me. I'd bet my life Super cards would have come out regardless of AMD.

Did I miss something?

Frankly, I think that this leak (the fact that they are preparing a 20GB version) hurts their image much more than the fact that the competition has 16GB. 12GB would've been enough for 4 years at 4k.Well, it's almost impossible to get solid proof of this, but from what I have heard from people in the industry, they are an extremely agile company, capable of taking a decision in a matter of days and implementing it in a matter of weeks. For the Supers, they managed to get info about the performance and the pricing of the 5700XT, and I would argue that their response, albeit rushed, was much less botched than that of AMD, who had to apply a last-minute overclock on the card and drop its price, which lead to problems with the card being too hot, loud and power-hungry.

That is probably why AMD had finally learned their lesson and there are almost no leaks coming out this time.

Loudness and temperatures are much more disturbing, only next summer will start users complaining about their 3080's, since all cooling solutions seem to be good quality.

AMD has forced NV to respond with supers.

5700 series still sold beautifully despite being rather pricey by AMD's standards.

2070, 2070S,2080, 2080S, 2080 Ti), with confusion about which SKUs were discontinued and which weren't, etc. My impression is that Nvidia wanted to demonstratively preempt AMD's launch while also seeing an opportunity to sell lower binned (partially disabled) dice that they previously had no use for, letting them allocate fully enabled chips entirely to higher margin enterprise products. This also speaks to the possibility of there being worse yields of Turing than initially planned, as a pure price cut would otherwise have made more sense, though there's also an argument here that Nvidia didn't want to establish a precedent for a $499 RTX xx80 series. Either way, even if Nvidia was early it was clearly a response from their side. Was it planned months before? Obviously. Was it part of their roadmap for Turing all along? I highly doubt that. So was it a response to AMD becoming more competitive in these market segments, even if AMD's GPUs weren't out yet? Absolutely.Nvidia came out with cards and price points that fit the market at the time. Knowing AMD would respond eventually, surely they had something being cooked up.Doubt it all you want... video cards aren't pulled out of assess (just Jensen's oven... :p). The Supers were all a part of things, surely.

We'll agree to disagree and move forward. ;)

Edit: nice ninja edit btw ;)

RE: The ninja edit... what's your point? It changed nothing. :rolleyes:

So basically, although it's got more performance, it's offered at a lower price, and it was launched around the launch of the 5600XT and managed to steal the thunder of the AMD card and many reviewers recommend the KO.

So no, this is not an EVGA move, it's just another brilliant marketing move from Nvidia, made in collaboration with a trusted partner.

And for alleged immoral or anti-consumer practices, the companies that you are talking about are doing very well, which means they are doing what they should be doing. It's AMD who has to improve. You either adapt to the market, or you disappear.

I'm not saying I agree with it and I certainly vote with my wallet as my conscience points me, but that doesn't change reality, most people are not that disciplined, knowledgeable, or they simply do not care about these issues.

The only things that really stop companies from behaving very badly are existing laws and market acceptance. Even existing laws are not a hard line, because in many cases it is more profitable for these companies to break the rules and drag it out in court, instead of simply obeying them. Both Nvidia and Intel did this in the past (I don't know much about Apple) and it allowed them to stomp certain of their competitors. This is the reality and understanding is not naive, quite the contrary.

In the end, success or failure of advances in GPUs will be down to how devs (get to) utilize it. Whether or not they understand it and whether or not its workable. I'm seeing the weight move from tried and trusted VRAM capacity to different areas. Not all devs are going to keep up. In that sense its sort of a good sign that Nvidias system for Ampere is trying to mimick next gen console features, but still. This is going to be maintenance intensive for sure.

I'll shut up about it now, I've had enough attention for my thoughts on the subject ;)