Saturday, September 19th 2020

NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

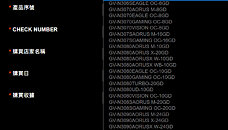

A GIGABYTE webpage meant for redeeming the RTX 30-series Watch Dogs Legion + GeForce NOW bundle, lists out eligible graphics cards for the offer, including a large selection of those based on unannounced RTX 30-series GPUs. Among these are references to a "GeForce RTX 3060" with 8 GB of memory, and more interestingly, a 20 GB variant of the RTX 3080. The list also confirms the RTX 3070S with 16 GB of memory.

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

Source:

VideoCardz

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

157 Comments on NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

Price ~$350

Perform ~RTX2070

As for the desperation part: I agree. Better to wait than to buy the first released, inferior product.

RTX 3060 8G 4864 ~~ RTX 2080/S ~~ 60% of RTX 3081

RTX 3080 10G 8704 new shader is 31% faster than 4352 turing, and the memory is only 23% faster,

so to get the average of 31% the shader must be pulling ahead with being 39% faster ~~6144 shaders fp32 and 2560 int32.

As for creating a bottleneck, it's still going to be quite a while until GPUs saturate PCIe 4.0 x16 (or even 3.0 x16), but SSDs use a lot of bandwidth and need to communicate with the entire system, not just the GPU. Sure, the GPU will be what needs the biggest chunks of data the quickest, but chaining an SSD off the GPU still makes far less sense than just keeping it directly attached to the PCIe bus like we do today. That way everything gets near optimal access. The only major improvement over this would be the GPU using the SSD as an expanded memory of sorts (like that oddball Radeon Pro did), but that would mean the system isn't able to use it as storage. And I sincerely doubt people would be particularly willing to add the cost of another multi-TB SSD to their PCs without getting any additional storage in return.

3060 8GB 4864 cuda

there you have it, 8GB version is 30% faster,

Guess Nvidia doesn't agree and brings us the real deal after the initial wave of stupid bought the subpar cards.

Well played, Huang.

Why only 10 GB of memory for RTX 3080? How was that determined to be a sufficient number, when it is stagnant from the previous generation?

-

Justin Walker, Director of GeForce product management

We’re constantly analyzing memory requirements of the latest games and regularly review with game developers to understand their memory needs for current and upcoming games. The goal of 3080 is to give you great performance at up to 4k resolution with all the settings maxed out at the best possible price.

In order to do this, you need a very powerful GPU with high speed memory and enough memory to meet the needs of the games. A few examples. If you look at Shadow of the Tomb Raider, Assassin’s Creed Odyssey, Metro Exodus, Wolfenstein Youngblood, Gears of War 5, Borderlands 3 and Red Dead Redemption 2 running on a 3080 at 4k with Max settings (including any applicable high res texture packs) and RTX On, when the game supports it, you get in the range of 60-100fps and use anywhere from 4GB to 6GB of memory.

Extra memory is always nice to have but it would increase the price of the graphics card, so we need to find the right balance.

-

According to spec 3070S should land around 70% of 3080, 2080Ti being 76%. pretty close.

I could see a future Radeon X700+ series and NVIDIA X070+ series of GPUs and their professional equivalents incorporating an option to install an nVME PCIe 4.0 (or 5.0, since that's tech supposedly due late next year or 2022 and expected to last for quite awhile) onto the card, as a way to boost available memory for either professional or gaming purposes. Or maybe Intel could beat the competition to market, using Optane add-ons to their own respective GPUs, acting more like reserve VRAM expansion thanks to higher Read/Write performance than typical NVMe, but less than GDDR/HBM.

but i'm sure you could utilitize all that memory one day, as some storage.