Saturday, September 19th 2020

NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

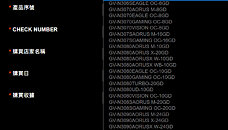

A GIGABYTE webpage meant for redeeming the RTX 30-series Watch Dogs Legion + GeForce NOW bundle, lists out eligible graphics cards for the offer, including a large selection of those based on unannounced RTX 30-series GPUs. Among these are references to a "GeForce RTX 3060" with 8 GB of memory, and more interestingly, a 20 GB variant of the RTX 3080. The list also confirms the RTX 3070S with 16 GB of memory.

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

Source:

VideoCardz

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

157 Comments on NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

i know its old tech but i think if i can get a cheap 6gb card .......

That being said the reason more memory is used on the 2080ti is because the more memory available the more allocations are going to take place. Similar to how Windows is going to report higher memory usage when more RAM is available. Memory allocation requests are queued up and may not take place at the exact time they are issued by the application.

even if Nvidia released 3080 with 384bit bus I still wouldn't buy it. save the money for later when we can have a decent 1008TBs 12GB card on 6nm EUV that clocks 30% higher.

Example: playing The Witcher 3 on an Xbox One with washed out textures at 900p is not the same as playing it on a similarly aged high-end PC with ultra settings at 1080p or 1440p.

The 3080 PCB already has the space for 2 extra VRAM modules

I had to lower some details on pretty much top of the line GPUs like Titan X Maxwell, 1080 Ti and 2080 Ti during the first year of owning them, not even at 4K. Ultra details setting is pretty much only used for benchmarks, IRL people tend to prefer higher FPS rather than some IQ you can't distinguish unless zooming 4x into a recording LOL.

The new norm for 2021 onward should be RT Reflections + High Details, let just hope so :D

Console performances have been above midrange PC of the same era in the past - PS3/Xbox360 were at the level of (very) high-end PC at launch. PS4/XB1 were (or at least seemed to be) an outlier with decidedly mediocre hardware. XBSX/PS5 are at the level of high-end hardware as of half a year ago when their specs were finalized which is back to normal. XBSS is kind of the weird red-headed stepchild that gets bare minimum and we'll have to see how it fares. PC hardware performance ceiling has been climbing for a while now. Yes, high-end stuff is getting incredibly expensive and gets worse and worse in terms of bang-per-buck but it is getting relatively more and more powerful as well.

Has many reasons to put RTX 30xx with "small" or "big" VRAM size in history. Regardless of whether and to what extent they are justified.

That being said, future-proofing by adding more VRAM is a poor solution. Sure, the GPU needs to have enough VRAM, there is no question about that. It needs an amount of VRAM and a bandwidth that both complement the computational power of the GPU, otherwise it will quickly become bottlenecked. The issue is that VRAM density and bandwidth both scale (very!) poorly with time - heck, base DRAM die clocks haven't really moved for a decade or more, with only more advanced ways of packing more data into the same signal increasing transfer speeds. But adding more RAM is very expensive too - heck, the huge framebuffers of current GPUs are a lot of the reason for high end GPUs today being $700-1500 rather than the $200-400 of a decade ago - die density has just barely moved, bandwidth is still an issue, requiring more complex and expensive PCBs, etc. I would be quite surprised if the BOM cost of the VRAM on the 3080 is below $200. Which then begs the question: would it really be worth it to pay $1000 for a 3080 20GB rather than $700 for a 3080 10GB, when there would be no perceptible performance difference for the vast majority of its useful lifetime?

The last question is particularly relevant when you start to look at VRAM usage scaling and comparing the memory sizes in question to previous generations where buying the highest VRAM SKU has been smart. Remember, scaling on the same with bus is either 1x or 2x (or potentially 4x I guess), so like we're discussing here, the only possible step is to 20GB - which brings with it a very significant cost increase. The base SKU has 10GB, which is the second highest memory count of any consumer-facing GPU in history. Even if it's comparable to the likely GPU-allocated amount of RAM on upcoming consoles, it's still a very decent chunk. On the other hand, previous GPUs with different memory capacities have started out much lower - 3/6GB for the 1060, 4/8GB for a whole host of others, and 2/4GB for quite a few if you look far enough back. The thing here is: while the percentage increases are always the same, the absolute amount of VRAM now is massively higher than in those cases - the baseline we're currently talking about is higher than the high end option of the previous comparisons. What does that mean? For one, you're already operating at such a high level of performance that there's a lot of leeway for tuning and optimization. If a game requires 1GB more VRAM than what's available, lowering settings to fit that within a 10GB framebuffer will be trivial. Doing the same on a 3GB card? Pretty much impossible. A 2GB reduction in VRAM needs is likely more easily done on a 10GB framebuffer than a .5GB reduction on a 3GB framebuffer. After all, there is a baseline requirement that is necessary for the game to run, onto which additional quality options add more. Raising the ceiling for maximum VRAM doesn't as much shift the baseline requirement upwards (though that too creeps upwards over time) as it expands the range of possible working configurations. Sure, 2GB is largely insufficient for 1080p today, but 3GB is still fine, and 4GB is plenty (at settings where GPUs with these amounts of VRAM would actually be able to deliver playable framerates). So you previously had a scale from, say, .5-4GB, then 2-8GB, and in the future maybe 4-12GB. Again, looking at percentages is misleading, as it takes a lot of work to fill those last few GB. And the higher you go, the easier it is to ease off on a setting or two without perceptibly losing quality. I.e. your experience will not change whatsoever, except that the game will (likely automatically) lower a couple of settings a single notch.

Of course, in the time it will take for 10GB to become a real limitation at 4k - I would say at minimum three years - the 3080 will likely not have the shader performance to keep up anyhow, making the entire question moot. Lowering settings will thus become a necessity no matter the VRAM amount.

So, what will you then be paying for with a 3080 20GB? Likely 8GB of VRAM that will never see practical use (again, it will more than likely have stuff allocated to it, but it won't be used in gameplay), and the luxury of keeping a couple of settings pegged to the max rather than lowering them imperceptibly. That might be worth it to you, but it certainly isn't for me. In fact, I'd say it's a complete waste of money.

It's true that consoles get more and more powerful with every generation, but so do PCs, and I honestly can't see a $500 machine beat a $2,000 one ever. And thus, game devs will always have to impose limitations to make games run as smoothly on consoles as they do on high-end PCs, making the two basically incomparable, even in terms of VRAM requirements.

- PS3's (Nov 2006) RSX is basically hybrid of 7800GTX (June 2005, $600) and 7900GTX (March 2006, $500).

- XBox360's (Nov 2005) Xenos is X1800/X1900 hybrid with X1800XL (Oct 2005, $450) probably the closest match.

While more difficult to compare, CPUs were high-end as well. Athlon 64 X2s came out in mid-2005. PowerPC-based CPUs in both consoles were pretty nicely multithreaded before that was a mainstream thing for PCs.

All I tried to say is, there's no point in drawing conclusions regarding VRAM requirements on PC based on how much RAM the newest Xbox and Playstation have (regardless of who started the conversation). Game devs can always tweak settings to make games playable on consoles, while on PC you have the freedom to choose from an array of graphics settings to suit your needs.

The best way to find out whether you have enough VRAM or not is to find the spot where you can measure symptoms of insufficient VRAM, primarily by measuring frame time consistency. Insufficient VRAM will usually cause significant stutter and sometimes even glitching.

As for the debate that keeps repeating itself with every new generation of hardware, RAM. Folks, just because @W1zzard did not encounter any issues with 10GB of VRAM in the testing done at this time does not mean that 10GB will remain enough in the future(near or otherwise). Technology always progresses. And software often precedes hardware. Therefore, if you buy a card now that has a certain amount of RAM on it and you just go with bog standard you may find yourself coming up short in performance later. 10GB seems like a lot now. But then so did 2GB just a few years ago. Shortsighted planning always ends poorly. Just ask the people that famously said "No one will ever need more that 640k of RAM."

A 3060, 3070 or 3080 with 12GB, 16GB or 20GB of VRAM is not a waste. It is an option that offers a level of future proofing. For most people that is an important factor because they only ever buy a new GPU every 3 or 4 years. They need all the future-proofing they can get. And before anyone says "Future-proofing is a pipe-dream and a waste of time.", put a cork in that pie-hole. Future-proofing a PC purchase by making strategic choices of hardware is a long practiced and well honored buying methodology.

20GB is $300 more expensive, for what. so that at unclear point in the future, when it would be obsolete anyway, so you could be granted one more game that runs a little better.

16GB price is very close to the 10GB card so you have to trade 30% less performance for 6GB more. I mean you are deliberately going to make a worse choice again because of some future uncertainty.

12GB only AMD will make a 192 bit card at this point. 3060 will be as weak as 2070. so why put 12GB on it.

Example? The GTX770. The 2GB version is pretty much unusable for modern games, but the 4GB versions are still relevant as they are still playable because of the additional VRAM. Even though the GPU dies are the same, the extra VRAM make all the difference. And it made a big difference then too. The cost? A mere $35 extra over the 2GB version. The same has been true throughout the history of GPU's regardless of who made them.

Your comparison to the 770 is as such a false equivalency: that comparison must then also assume that GPUs in the (relatively near) future will have >10GB of VRAM as a minimum, as that is what would be required for this amount to truly become a bottleneck. The modern titles you speak of need >2/<4 GB of VRAM to run smoothly at 1080p. Even the lowest end GPUs today come in 4GB SKUs, and two generations back, while you did have 2 and 3GB low-end options, nearly everything even then was 4GB or more. For your comparison to be valid, the same situation must then be true in the relatively near future, only 2GB gets replaced with 10GB. And that isn't happening. Baseline requirements for games are not going to exceed 10GB of VRAM in any reasonably relevant future. VRAM is simply too expensive for that - it would make the cheapest GPUs around cost $500 or more - DRAM bit pricing isn't budging. Not to mention that the VRAM creep past 2GB has taken years. To expect a sudden jump of, say, >3x (assuming ~3GB today) in a few years? That would be an extremely dramatic change compared to the only relevant history we have to compare to.

Besides, you (and many others here) seem to be mixing up two similar but still different questions:

a) Will 10GB be enough to not bottleneck the rest of this GPU during its usable lifespan? (i.e. "can it run Ultra settings until I upgrade?") and

b) Will 10GB be enough to not make this GPU unusable in 2-3 generations? (i.e. "will this be a dud in X years?")

Question a) is at least worth discussing, and I would say "maybe not, but it's a limitation that can be easily overcome by changing a few settings (and gaming at Ultra is kind of silly anyhow), and at those settings you'll likely encounter other limitations beyond VRAM". Question b), which is what you are alluding to with your GTX 770 reference, is pretty much out of the question, as baseline requirements (i.e. "can it run games at all?") aren't going to exceed 10GB in the next decade no matter what. Will you be able to play at 4k medium-high settings at reasonable frame rates with 10GB of VRAM in a decade? No - that would be unprecedented. But will you be able to play 1080p or 1440p at those types of settings with 10GB of VRAM? Almost undoubtedly (though shader performance is likely to force you to lower settings - but not the VRAM). And if you're expecting future-proofing to keep your GPU relevant at that kind of performance level for that kind of time, your expectations of what is possible is fundamentally flawed. The other parts of the GPU will be holding you back far more than the VRAM in that scenario. If the size of your framebuffer is making your five-year-old high-end GPU run at a spiky 10fps instead of, say, 34, does that matter at all? Unless the game in question is extremely slow-paced, you'd need to lower settings anyhow to get a reasonable framerate, which will then in all likelihood bring you below the 10GB limitation.

I'm all for future-proofing, and I absolutely hate the shortsighted hypermaterialism of the PC building scene - there's a reason I've kept my current GPU for five years - but adding $2-300 (high end GDDR chips cost somewhere in the realm of $20/GB) to the cost of a part to add something that in all likelihood won't add to its longevity at all is not smart future-proofing. If you're paying that to avoid one bottleneck just to be held back by another, you've overpaid for an unbalanced product.

Its not exactly the most niche game either... I guess 'Enthusiast' only goes as far as the limits Nvidia PR set for you with each release? Curious ;) Alongside this, I want to stress again TWO previous generations had weaker cards with 11GB. They'd run this game better than a new release, most likely.

Since its a well known game, we also know how the engine responds when VRAM is short. You get stutter, and its not pretty. Been there done that way back on a 770 with measly 2GB. Same shit, same game, different day.

So sure, if all you care about is playing every console port and you never mod anything, 10GB will do you fine. But a 3070 will then probably do the same thing for you, won't it? Nvidia's cost cutting measure works both ways, really. We can do it too. Between a 3080 with 20GB (at whatever price point it gets) and a 3070 with 8GB, the 3080 10GB is in a very weird place. Add consoles and a big Navi chip with 16GB and it gets utterly strange, if not 'the odd one out'. A bit like the place where SLI is now. Writing's on the wall.

The balance is shifting and its well known there is tremendous pressure on production lines for high end RAM. We're paying the price with reduced lifecycles on expensive product. That is the real thing happening here.

Radeon VII performance number ?

Funny that Radeon VII is faster than 1080 Ti and Titan XP while slower than 2070 Super, 2080 and 2080 Super there.