Saturday, September 19th 2020

NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

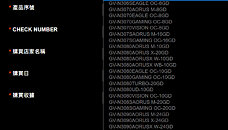

A GIGABYTE webpage meant for redeeming the RTX 30-series Watch Dogs Legion + GeForce NOW bundle, lists out eligible graphics cards for the offer, including a large selection of those based on unannounced RTX 30-series GPUs. Among these are references to a "GeForce RTX 3060" with 8 GB of memory, and more interestingly, a 20 GB variant of the RTX 3080. The list also confirms the RTX 3070S with 16 GB of memory.

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

Source:

VideoCardz

The RTX 3080 launched last week comes with 10 GB of memory across a 320-bit memory interface, using 8 Gbit memory chips, while the RTX 3090 achieves its 24 GB memory amount by piggy-backing two of these chips per 32-bit channel (chips on either side of the PCB). It's conceivable that the the RTX 3080 20 GB will adopt the same method. There exists a vast price-gap between the RTX 3080 10 GB and the RTX 3090, which NVIDIA could look to fill with the 20 GB variant of the RTX 3080. The question on whether you should wait for the 20 GB variant of the RTX 3080 or pick up th 10 GB variant right now, will depend on the performance gap between the RTX 3080 and RTX 3090. We'll answer this question next week.

157 Comments on NVIDIA Readies RTX 3060 8GB and RTX 3080 20GB Models

This is just a software overhaul (directX) with hardware acceleration being added in

Memory consumption of GPU's is primarily due to textures, and textures get 'streamed' from GPU memory sort of say without the game pulling them from the HDD/SSD/RAM which is obviously slower. Side effects like AA to store temporarily frames inside of it etc. Please note that the 4GB vs 8GB version in the RX480/580 is not a good test as the 4GB has slower ram (1750Mhz vs 2000Mhz). But to put it equal the cards would perform the same on the same clocks.

I had a Geforce 2MX 64MB back in the days; compared that to a Geforce 2MX 32MB, zero difference, since the GPU was'nt powerfull enough to run on higher resolutions where the extra ram would be usefull. Even a 4GB fury of AMD still can cope up just fine on everything you throw at it. It's just at some point, that the GPU itself wont be powerfull enough to continue rendering the frames in a desired framerate-span.

www.techpowerup.com/268211/amd-declares-that-the-era-of-4gb-graphics-cards-is-over

They are running tests here on Ultra and it's obvious that big textures require alot of ram. If a GPU cant store any more it's being stored in the RAM of your system, which obviously takes a latency in account when fething that data. My RX580 has 8GB of ram but it's not too powerfull still to run fully maxed out at 2560x1080 ... Even if the game just utilitizes 6GB of 8GB in total. The GPU behind it has to take advantage, otherwise it's another "Oh this one has more GHz/MB/MBPS" decision.

www.vgamuseum.info/index.php/component/k2/item/989-sis-315

This one was the first if i'm correct that loaded up to 128MB on a single VGA card, but was in a way completely useless. The card was'nt even faster then a regular Geforce 2MX. Nvidia released the Gf2mx as well with both 32MB and 64MB, as it pretty much did nothing in regards of gaming.

The GTX 980 with 4GB VRAM is still a capable GPU even today, pretty much match RX 580 8GB in any DX11 games (Maxwell kinda suck at DX12 and Vulkan)

Here is how GTX 980 perform in FS 2020, a game that will fill any VRAM available

Nvidia has had memory compression for ages, Ampere is already the 6th iteration of it (Turing being the 5th).

Here is 2080 Ti using less VRAM than 5700XT and Radeon VII in FS 2020, even though the 2080 Ti is much more powerful than both

At 4K FS2020 will try to eat as much VRAM as possible (>11GB) but that doesn't translate to real performance.

This generation, it's going to be consoles with 16GB with probably 4-6GB on the system and 10-12GB-ish for the GPU. When your GPU in your cutting edge PC has less VRAM than or the same (10GB GPU-optimized memory in Xbox Series X) as your console is dedicating to its GPU, then there's a problem because you're going to want expanded headroom to make up for the fact that DirectStorage will not get as much use on PC as it will on Xbox where it's an absolute given. On PC, it's an optional feature for only the latest version of cards and only for users with the absolute fastest NVME drives (of which most people won't have), which means it won't be used for years to come. Look how long RTX adoption took and is taking.

So yeah. Having more VRAM makes your $700 investment last longer. Like 1080 Ti-levels of lasting. Nvidia thinks they can convince enough people to buy more cards with less memory, which is why these early launch cards are going without the extra memory. It'll look fine right now, but by the end of next year, people will start feeling the pinch, but it won't be until 2022 that they'd really notice. If you buy your card and are fine replacing it by the time games are being fully designed for Xbox Series X and PS5 instead of Xbox One X and PS4, then buy the lower memory cards and rock on.

I don't buy a GPU but once in a while, so I'll be waiting for the real cards to come. Don't want to get stuck having to buy a new card sooner than I like because I was impatient.

In all honesty, i've got a 980 4GB that can still game (at lower settings) on my second 1440p screen (its only 60hz, so its usable) and it also handles VR just fine.

The RX580 8GB? Sure the VRAM doesnt run out, but the GPU cant handle the performance needed for anything that benefits from the 8GB.

There are times when low VRAM cards come out and its just stupid to buy them (1060 3GB, which was a crap amount of ram AND a weaker GPU than the 6GB version) and i wouldnt buy less than 8GB *now* in 2020, but just like CPU cores the genuine requirements are very very slow to creep up - my view is that by the time 10GB is no longer enough (3-4 years?), i'll just upgrade my GPU anyway.

In the case of the RTX 30 series and all this fancy new shit like hardware accelerated streaming from NVME, i think VRAM requirements aren't going to be as harsh for a few years... in supported games you get a speed boost to prevent texture streaming stuttering, and at lower settings they'll be coded for the average user anyway still running a 1050ti 4GB on a basic SATA SSD.

Quite often the higher VRAM versions have drawbacks, either slower VRAM or higher heat/wattage hurting overall performance... for a long, long time the higher VRAM versions were always crippled.

I see no issue with turning textures from ultra to high, and upgrading twice as often (since i can afford to... i dont expect the 3080 20GB to be cost efficient)

Pretty much any GPU you buy today, wether thats a 4, 8, 10, 12, 16, or even 20GB model; it still works for the way it was intended. Like a 1080p card. or a 4K gaming card. Graphic vendors are'nt stupid. And games that put a load through the ram is mostly due to caching which overall brings like 1 to 3% performance increase rather then streaming this from ram or even disc.

The PS5's SSD is such fast that it could load in less then a second a complete terrain. No need for a GPU with huge chunks of memory anymore. They just portion what they need and stream the rest off SSD. And Nvidia is cheating. Their texture compression is such aggresive that there is a quality difference in both brands, AMD and Nvidia. In my opinion AMD just looks overall better and that might explain why AMD cards tend to be a tad slower then Nvidia's.

PS5, new Xbox and directX all are just using their own branded version of the same concept: accelerate the shit out of decompressing the files, so we can bypass the previous limits given to us by mechanical hard drives

Lazy devs have people so confused and bamboozled, and NVIDIA is more than willing to create cards for this currently perceived need for large amounts of VRAM. They will make lots more profit over this perceived need as well.

By the time these higher than 10-12GB of VRAM are needed, the GPU’s themselves will be obsolete.

For 8K i can imagine we need more, but these are not 8K cards - the 3090 can scrape by for that with DLSS, but thats really running at a lower res anyway... oh that means my 10GB will be fine for even longer, wooo.

The 3070 16GB will be a joke of a card, the VRAM will totally be pointless unless they use it to speed up loading times in fortnite or some other niche gimmick.

Old games dont set the norm and this still doesnt explain why two previous gens had more. Didnt that same game exist during the release of a 2080ti? Precisely.

If you expect less than 4-5 years of solid gaming off this hardware you're probably doing it wrong, too.

Sounds like lowering the texture details from Ultra to High is too hard for some people :D, also those exact same people who would complain about performance per dollars too, imagine having useless amount of VRAM would do to the perf/usd...

That might be seen as an indication that a more powerful GPU might need more than 10GB of RAM, but then you need to remember that consoles are developed for 5-7-year life cycles, not 3-year ones like PC dGPUs. 10GB on the 3080 is going to be more than enough, even if you use it for more than three years. Besides, VRAM allocation (which is what all software reads as "VRAM use") is not the same as VRAM that's actually being used to render the game. Most games have aggressively opportunistic streaming systems that pre-load data into VRAM in case it's needed. The entire point of DirectStorage is to reduce the amount of unnecessarily loaded data - which then translates to a direct drop in "VRAM use". Sure, it also frees up more VRAM to be actually put to use (say, for even higher resolution textures), but the chances of that becoming a requirement for games in the next few years is essentially zero.

Also, that whole statement about "I guarantee more games will use that extra VRAM much more quickly than start using DirectStorage, especially nvidia's custom add-on to it that requires explicit programming to use fully" does not compute. I mean, DirectStorage is an API, so obviously you need to put in the relevant API calls and program for it for it to work. That's how APIs work, and it has zero to do with "Nvidia's custom add-on to it" - RTX-IO is from all we know a straightforward implementation of DS. Anything else would be pretty stupid of them, given that DS is in the XSX and will as such be in most games made for that platform in the near future, including PC ports. For Nvidia to force additional programming on top of this would make no sense, and it would likely place them at a competitive disadvantage given the likelihood that AMD will be adding DS-compatibility to their new GPUs as well...Some people apparently see it as deeply problematic when a GPU that could otherwise deliver a cinematic ~24fps instead delivers 10 due to a VRAM limitation. Oh, I know, there have been cases where the FPS could have been higher - even in playable territories - if it wasn't for the low amount of VRAM, but that's exceedingly rare. In the vast majority of cases, VRAM limitations kick in at a point when the GPU is already delivering sub-par performance and you need to lower settings anyway. But apparently that's hard for people to accept, as you say. More VRAM has for a decade or so been the no-benefit upsell of the GPU business. It's really about time people started seeing through that crap.

To stick with that time frame, the Fury X (4GB) today is a great example - despite its super fast HBM and bandwidth - that sucks monkey balls in VRAM intensive games today. The card has lost performance over time. Substantially. And it performs relatively worse than 980ti does today and worse than it did at time of launch.

The same thing is what I am seeing here. We're looking at a very good perf jump this gen, while the VRAM is all over the place. Turing already cut some things back, and we remained not only stagnant, but actually lost VRAM relative to raw performance this gen. I'm not sure about your crystal ball, but mine is showing me a similar 2GB > 4GB generational jump where the lower VRAM cards are going to fall off faster. That specific jump also made us realize the 7970 with its 3GB was pretty damn future proof for its days, remember... A 10GB card with performance well above the 2080ti, especially at 4K, should simply be having more than that. I don't care what a PR dude from Nvidia thinks about it. I do care about the actual specs of actual consoles launching and doing pseudo-4K with lots of internal scaling. The PC will be doing more than that, but makes do with slightly less VRAM? I don't follow that logic and I'm not buying it either - especially not at a price tag of 700+.

To each his own. Let's revisit this in 4-5 years time. All of this is even without considering the vast amount of other stuff people can do on a PC with their GPUs to tax VRAM further, within the same performance bracket: most notably modding. Texture mods; adding assets and effects will rapidly eat up those precious GBs. I like my GPUs capable to have that freedom.There you have it. Who decided PC dGPU is developed for a 3 year life cycle? It certainly wasn't us. They last double that time without any hiccups whatsoever, and even then hold resale value. Especially the high end. I'll take 4-5 if you don't mind. The 1080 I'm running now, makes 4-5 just fine, and then some. The 780ti I had prior, did similar, and they both had life in them still.

Two GPU upgrades per console gen is utterly ridiculous and unnecessary, since we all know the real jumps happen with real console gen updates.

Nvidia's marketing is top notch, of course they would never admit that VRAM is going to be a limitation in some regards and provide sensible arguments. But then again, they were also the same people who told everyone that they believe unified shaders are not the future for high performance GPUs back when ATI introduced them for the first time as well.It wont do that on 1080p, nice diversion.

The 980 or any other 4GB card isn't even included, you can guess why. You should have also read what they said :The evidence that VRAM is a real limiting factor is everywhere, you just have to stop truing a blind eye to it.

- Xbox: 64 MB > Xbox 360: 512MB > Xbox One/X: 8/12GB > Xbox Series S/X: 10/16GB (8/10GB fast RAM)

- PS: 2+1MB > PS2: 32+4MB > PS3: 256+256MB > PS4: 8GB (+256MB/1GB) > PS5: 16GB

In percentages:

+700% > +1500% > +25%

+1100% > +1322% > +1550% > +93%

1. How much gen on gen improvement there is in each camp

2. how much VRAM he can eat out of a 24GB card

3. what it takes to finally make him cry

Also you can see 2060 6GB being faster than 1080 8GB there, even at 4K Ultra. Nvidia improves the memory compression algorithm every generation that 8GB VRAM on Ampere does not act the same way as 8GB VRAM on Turing or Pascal (AMD is even further off).

Just look at the VRAM usage between 2080 Ti vs 3080, the 3080 always use less VRAM, that how Nvidia memory compression works...

I would rather have a hypothetical 3080 Ti with 12GB VRAM on 384 bit bus rather than 20GB VRAM on 320bit bus, bandwidth over useless capacity anyday. At least higher VRAM bandwidth will instantly give higher performance on today games, not 5 years down the line when these 3080 can be had for 200usd...