Saturday, October 31st 2020

Intel Storms into 1080p Gaming and Creator Markets with Iris Xe MAX Mobile GPUs

Intel today launched its Iris Xe MAX discrete graphics processor for thin-and-light notebooks powered by 11th Gen Core "Tiger Lake" processors. Dell, Acer, and ASUS are launch partners, debuting the chip on their Inspiron 15 7000, Swift 3x, and VivoBook TP470, respectively. The Iris Xe MAX is based on the Xe LP graphics architecture, targeted at compact scale implementations of the Xe SIMD for mainstream consumer graphics. Its most interesting feature is Intel DeepLink, and a powerful media acceleration engine that includes hardware encode acceleration for popular video formats, including HEVC, which should make the Iris Xe MAX a formidable video content production solution on the move.

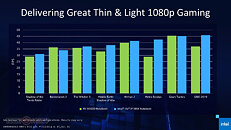

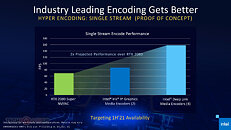

The Iris Xe MAX is a fully discrete GPU built on Intel's 10 nm SuperFin silicon fabrication process. It features an LPDDR4X dedicated memory interface with 4 GB of memory at 68 GB/s of bandwidth, and uses PCI-Express 4.0 x4 to talk to the processor, but those are just the physical layers. On top of these are what Intel calls Deep Link, an all encompassing hardware abstraction layer that not only enables explicit multi-GPU with the Xe LP iGPU of "Tiger Lake" processors, but also certain implicit multi-GPU functions such as fine-grained division of labor between the dGPU and iGPU to ensure that the right kind of workload is split between the two. Intel referred to this as GameDev Boost, and we detailed it in an older article.Deep Link goes beyond the 3D graphics rendering domain, and also provides augmentation of the Xe Media Multi-Format Encoders of the iGPU and dGPU to linearly scale video encoding performance. Intel claims that a Xe iGPU+dGPU combine offers more than double the encoding performance of NVENC on a GeForce RTX 2080 graphics card. All this is possible because a common software framework ties together the media encoding capabilities of the "Tiger Lake" CPU and Iris Xe MAX GPU that ensures the solution is more than the sum of its parts. Intel refers to this as Hyper Encode.Deep Link also scales up AI deep-learning performance between "Tiger Lake" processors and the Xe MAX dGPU. This is because the chip has a DLBoost DP4a accelerator. As of today, Intel has onboarded major brands in the media encoding software ecosystem to support Deep Link—Hand Brake, OBS, XSplit, Topaz Gigapixel AI, Huya, Joyy, etc., and is working with Blender, Cyberlink, Fluendo, and Magix for full support in the coming months.Under the hood, the Iris Xe MAX, as we mentioned earlier, is built on the 10 nm SuperFin process. This is a brand new piece of silicon, and not a "Tiger Lake" with its CPU component disabled, as its specs might otherwise suggest. It features 96 Xe execution units (EUs), translating to 768 programmable shaders. It also has 96 TMUs and 24 ROPs. It features an LPDDR4X memory interface, which 68 GB/s of memory bandwidth. The GPU is clocked at 1.65 GHz. It talks to "Tiger Lake" processors over a common PCI-Express 4.0 x4 bus. Notebooks with Iris Xe MAX have their iGPUs and dGPUs enabled to leverage Deep Link.Media and AI only paint half the picture, the other being gaming. Intel is taking a swing at the 1080p mainstream gaming segment with the Iris Xe MAX offering over 30 FPS (playable) in AAA games at 1080p. It trades blows with notebooks that use the NVIDIA GeForce MX450 discrete GPU. We reckon that most e-sports titles should be playable at over 45 FPS at 1080p. Over the coming months, one should expect Intel and its ISVs to invest more in Game Boost, which should increase performance further. The Xe LP architecture features DirectX 12 support, including Variable Rate Shading (tier-1).But what about other mobile platforms, and desktop, you ask? The Iris Xe MAX is debuting exclusively with thin-and-light notebooks based on 11th Gen Core "Tiger Lake" processors, but Intel has plans to develop desktop add-in cards with Iris Xe MAX GPUs sometime in the first half of 2021. We predict that if priced right, this card could sell in droves to the creator community, who could leverage the card's media encoding and AI DNN acceleration capabilities. It should also appeal to the HEDT and mission-critical workstation crowds that require discrete graphics, as they minimize their software sources.Update Nov 1st: Intel clarified that the desktop Iris Xe MAX add-in card will be sold exclusively to OEMs for pre-builts.

The complete press-deck follows.

The Iris Xe MAX is a fully discrete GPU built on Intel's 10 nm SuperFin silicon fabrication process. It features an LPDDR4X dedicated memory interface with 4 GB of memory at 68 GB/s of bandwidth, and uses PCI-Express 4.0 x4 to talk to the processor, but those are just the physical layers. On top of these are what Intel calls Deep Link, an all encompassing hardware abstraction layer that not only enables explicit multi-GPU with the Xe LP iGPU of "Tiger Lake" processors, but also certain implicit multi-GPU functions such as fine-grained division of labor between the dGPU and iGPU to ensure that the right kind of workload is split between the two. Intel referred to this as GameDev Boost, and we detailed it in an older article.Deep Link goes beyond the 3D graphics rendering domain, and also provides augmentation of the Xe Media Multi-Format Encoders of the iGPU and dGPU to linearly scale video encoding performance. Intel claims that a Xe iGPU+dGPU combine offers more than double the encoding performance of NVENC on a GeForce RTX 2080 graphics card. All this is possible because a common software framework ties together the media encoding capabilities of the "Tiger Lake" CPU and Iris Xe MAX GPU that ensures the solution is more than the sum of its parts. Intel refers to this as Hyper Encode.Deep Link also scales up AI deep-learning performance between "Tiger Lake" processors and the Xe MAX dGPU. This is because the chip has a DLBoost DP4a accelerator. As of today, Intel has onboarded major brands in the media encoding software ecosystem to support Deep Link—Hand Brake, OBS, XSplit, Topaz Gigapixel AI, Huya, Joyy, etc., and is working with Blender, Cyberlink, Fluendo, and Magix for full support in the coming months.Under the hood, the Iris Xe MAX, as we mentioned earlier, is built on the 10 nm SuperFin process. This is a brand new piece of silicon, and not a "Tiger Lake" with its CPU component disabled, as its specs might otherwise suggest. It features 96 Xe execution units (EUs), translating to 768 programmable shaders. It also has 96 TMUs and 24 ROPs. It features an LPDDR4X memory interface, which 68 GB/s of memory bandwidth. The GPU is clocked at 1.65 GHz. It talks to "Tiger Lake" processors over a common PCI-Express 4.0 x4 bus. Notebooks with Iris Xe MAX have their iGPUs and dGPUs enabled to leverage Deep Link.Media and AI only paint half the picture, the other being gaming. Intel is taking a swing at the 1080p mainstream gaming segment with the Iris Xe MAX offering over 30 FPS (playable) in AAA games at 1080p. It trades blows with notebooks that use the NVIDIA GeForce MX450 discrete GPU. We reckon that most e-sports titles should be playable at over 45 FPS at 1080p. Over the coming months, one should expect Intel and its ISVs to invest more in Game Boost, which should increase performance further. The Xe LP architecture features DirectX 12 support, including Variable Rate Shading (tier-1).But what about other mobile platforms, and desktop, you ask? The Iris Xe MAX is debuting exclusively with thin-and-light notebooks based on 11th Gen Core "Tiger Lake" processors, but Intel has plans to develop desktop add-in cards with Iris Xe MAX GPUs sometime in the first half of 2021. We predict that if priced right, this card could sell in droves to the creator community, who could leverage the card's media encoding and AI DNN acceleration capabilities. It should also appeal to the HEDT and mission-critical workstation crowds that require discrete graphics, as they minimize their software sources.Update Nov 1st: Intel clarified that the desktop Iris Xe MAX add-in card will be sold exclusively to OEMs for pre-builts.

The complete press-deck follows.

74 Comments on Intel Storms into 1080p Gaming and Creator Markets with Iris Xe MAX Mobile GPUs

To be impressive, this would have to beat the GTX 1650 in mobile.

Can't find any mention of which codecs are supported for encoding, beyond H.265.

But I guess OEMs are happy as Intel will likely give these chips away practically for free to claim design wins.

aka have this cpu in your system and let the gpu part of it doing the streaming work?

As we've discussed in previous underwhelming press release, that's the maximum capability of current-gen Iris Xe. If Xe MAX can only keep up with MX350, which is actively being phased out either by underclocked/TDP-capped 1650, or less powerful but more efficient Vega iGPs on 4000-series Ryzen, it can only mean that competitive Intel dGPUs aren't even close to being ready. Just the spec&perf show clearly that it's an overclocked G7 iGPU, only modified to have its own framebuffer.

AMD and Nvidia are focusing at cards that cost over $200-$300, leaving the low end market to older models, APUs and integrated graphics. But new and cheap graphics cards are needed in HTPCs for example. An older CPU without integrated graphics could use a discrete Intel graphics card that costs less than $100 and offers QuickSync and hardware support for all modern video codecs. If AMD and Nvidia start ignoring the market of cards under $100, Intel might have a chance to sell some more GPUs to consumers.

Totally worthless bench, theyve wasted a dozen slides and our time because they still havent got anything here.

Xe is thus far going nowhere. Is this a start? They are not really competing with anything here that is more than glorified IGP. Weve been there with Broadwell already... and that was done with just a simple i7 CPU!

At the moment they are about on par with ten year old tech

Still, it is a small edge (feature-wise) Intel has for now, and if they're able to go further with a SAM-like equivalent, they could potentially squeeze out a bit more performance that way. That said, it'll be a while until they can sufficiently catch up in actual performance, unless AMD or NVIDIA trips up hard.