Saturday, October 31st 2020

Intel Storms into 1080p Gaming and Creator Markets with Iris Xe MAX Mobile GPUs

Intel today launched its Iris Xe MAX discrete graphics processor for thin-and-light notebooks powered by 11th Gen Core "Tiger Lake" processors. Dell, Acer, and ASUS are launch partners, debuting the chip on their Inspiron 15 7000, Swift 3x, and VivoBook TP470, respectively. The Iris Xe MAX is based on the Xe LP graphics architecture, targeted at compact scale implementations of the Xe SIMD for mainstream consumer graphics. Its most interesting feature is Intel DeepLink, and a powerful media acceleration engine that includes hardware encode acceleration for popular video formats, including HEVC, which should make the Iris Xe MAX a formidable video content production solution on the move.

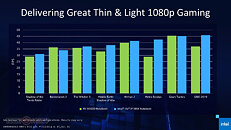

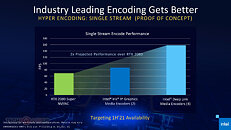

The Iris Xe MAX is a fully discrete GPU built on Intel's 10 nm SuperFin silicon fabrication process. It features an LPDDR4X dedicated memory interface with 4 GB of memory at 68 GB/s of bandwidth, and uses PCI-Express 4.0 x4 to talk to the processor, but those are just the physical layers. On top of these are what Intel calls Deep Link, an all encompassing hardware abstraction layer that not only enables explicit multi-GPU with the Xe LP iGPU of "Tiger Lake" processors, but also certain implicit multi-GPU functions such as fine-grained division of labor between the dGPU and iGPU to ensure that the right kind of workload is split between the two. Intel referred to this as GameDev Boost, and we detailed it in an older article.Deep Link goes beyond the 3D graphics rendering domain, and also provides augmentation of the Xe Media Multi-Format Encoders of the iGPU and dGPU to linearly scale video encoding performance. Intel claims that a Xe iGPU+dGPU combine offers more than double the encoding performance of NVENC on a GeForce RTX 2080 graphics card. All this is possible because a common software framework ties together the media encoding capabilities of the "Tiger Lake" CPU and Iris Xe MAX GPU that ensures the solution is more than the sum of its parts. Intel refers to this as Hyper Encode.Deep Link also scales up AI deep-learning performance between "Tiger Lake" processors and the Xe MAX dGPU. This is because the chip has a DLBoost DP4a accelerator. As of today, Intel has onboarded major brands in the media encoding software ecosystem to support Deep Link—Hand Brake, OBS, XSplit, Topaz Gigapixel AI, Huya, Joyy, etc., and is working with Blender, Cyberlink, Fluendo, and Magix for full support in the coming months.Under the hood, the Iris Xe MAX, as we mentioned earlier, is built on the 10 nm SuperFin process. This is a brand new piece of silicon, and not a "Tiger Lake" with its CPU component disabled, as its specs might otherwise suggest. It features 96 Xe execution units (EUs), translating to 768 programmable shaders. It also has 96 TMUs and 24 ROPs. It features an LPDDR4X memory interface, which 68 GB/s of memory bandwidth. The GPU is clocked at 1.65 GHz. It talks to "Tiger Lake" processors over a common PCI-Express 4.0 x4 bus. Notebooks with Iris Xe MAX have their iGPUs and dGPUs enabled to leverage Deep Link.Media and AI only paint half the picture, the other being gaming. Intel is taking a swing at the 1080p mainstream gaming segment with the Iris Xe MAX offering over 30 FPS (playable) in AAA games at 1080p. It trades blows with notebooks that use the NVIDIA GeForce MX450 discrete GPU. We reckon that most e-sports titles should be playable at over 45 FPS at 1080p. Over the coming months, one should expect Intel and its ISVs to invest more in Game Boost, which should increase performance further. The Xe LP architecture features DirectX 12 support, including Variable Rate Shading (tier-1).But what about other mobile platforms, and desktop, you ask? The Iris Xe MAX is debuting exclusively with thin-and-light notebooks based on 11th Gen Core "Tiger Lake" processors, but Intel has plans to develop desktop add-in cards with Iris Xe MAX GPUs sometime in the first half of 2021. We predict that if priced right, this card could sell in droves to the creator community, who could leverage the card's media encoding and AI DNN acceleration capabilities. It should also appeal to the HEDT and mission-critical workstation crowds that require discrete graphics, as they minimize their software sources.Update Nov 1st: Intel clarified that the desktop Iris Xe MAX add-in card will be sold exclusively to OEMs for pre-builts.

The complete press-deck follows.

The Iris Xe MAX is a fully discrete GPU built on Intel's 10 nm SuperFin silicon fabrication process. It features an LPDDR4X dedicated memory interface with 4 GB of memory at 68 GB/s of bandwidth, and uses PCI-Express 4.0 x4 to talk to the processor, but those are just the physical layers. On top of these are what Intel calls Deep Link, an all encompassing hardware abstraction layer that not only enables explicit multi-GPU with the Xe LP iGPU of "Tiger Lake" processors, but also certain implicit multi-GPU functions such as fine-grained division of labor between the dGPU and iGPU to ensure that the right kind of workload is split between the two. Intel referred to this as GameDev Boost, and we detailed it in an older article.Deep Link goes beyond the 3D graphics rendering domain, and also provides augmentation of the Xe Media Multi-Format Encoders of the iGPU and dGPU to linearly scale video encoding performance. Intel claims that a Xe iGPU+dGPU combine offers more than double the encoding performance of NVENC on a GeForce RTX 2080 graphics card. All this is possible because a common software framework ties together the media encoding capabilities of the "Tiger Lake" CPU and Iris Xe MAX GPU that ensures the solution is more than the sum of its parts. Intel refers to this as Hyper Encode.Deep Link also scales up AI deep-learning performance between "Tiger Lake" processors and the Xe MAX dGPU. This is because the chip has a DLBoost DP4a accelerator. As of today, Intel has onboarded major brands in the media encoding software ecosystem to support Deep Link—Hand Brake, OBS, XSplit, Topaz Gigapixel AI, Huya, Joyy, etc., and is working with Blender, Cyberlink, Fluendo, and Magix for full support in the coming months.Under the hood, the Iris Xe MAX, as we mentioned earlier, is built on the 10 nm SuperFin process. This is a brand new piece of silicon, and not a "Tiger Lake" with its CPU component disabled, as its specs might otherwise suggest. It features 96 Xe execution units (EUs), translating to 768 programmable shaders. It also has 96 TMUs and 24 ROPs. It features an LPDDR4X memory interface, which 68 GB/s of memory bandwidth. The GPU is clocked at 1.65 GHz. It talks to "Tiger Lake" processors over a common PCI-Express 4.0 x4 bus. Notebooks with Iris Xe MAX have their iGPUs and dGPUs enabled to leverage Deep Link.Media and AI only paint half the picture, the other being gaming. Intel is taking a swing at the 1080p mainstream gaming segment with the Iris Xe MAX offering over 30 FPS (playable) in AAA games at 1080p. It trades blows with notebooks that use the NVIDIA GeForce MX450 discrete GPU. We reckon that most e-sports titles should be playable at over 45 FPS at 1080p. Over the coming months, one should expect Intel and its ISVs to invest more in Game Boost, which should increase performance further. The Xe LP architecture features DirectX 12 support, including Variable Rate Shading (tier-1).But what about other mobile platforms, and desktop, you ask? The Iris Xe MAX is debuting exclusively with thin-and-light notebooks based on 11th Gen Core "Tiger Lake" processors, but Intel has plans to develop desktop add-in cards with Iris Xe MAX GPUs sometime in the first half of 2021. We predict that if priced right, this card could sell in droves to the creator community, who could leverage the card's media encoding and AI DNN acceleration capabilities. It should also appeal to the HEDT and mission-critical workstation crowds that require discrete graphics, as they minimize their software sources.Update Nov 1st: Intel clarified that the desktop Iris Xe MAX add-in card will be sold exclusively to OEMs for pre-builts.

The complete press-deck follows.

74 Comments on Intel Storms into 1080p Gaming and Creator Markets with Iris Xe MAX Mobile GPUs

Whats the point of 94 whr battery if your not gonna use it?

Fact is a mobile CPU + decent IGP under load with a good battery can last 3-4 hrs playing games when properly configured. I can get nearly 60 FPS out of a 25-watt Ryzen 4800U in GTA V at 720p yet in a similar test with say a 1660 Ti you get about 15-20. That said maybe the use case doesn't apply to you. But it does apply to me and at this point with each new generation. Battery performance gets worse and worse.

So if the Intel GPU is competitive and low power enough its possible to get a good on the go gaming experience on battery power while still getting 4-5 hrs of use when paired with a larger battery.

So it really comes down to how much power this thing will use if its below 30-watts it avoids the throttling issue thats a win for me. hopefully future RDNA based IGPs from AMD are coming soon.

It's for thin and lights, so the single most important metric is performance/Watt.

I skimmed all those slidees, and didn't see a single mention.

Lets, just for one second, assume it has competitive performance/Watt - and I very much doubt that, because if it did Intel would be shouting how great their efficiency was from the rooftops - how much does the damn thing cost?

Fact is you're coming up with a configuration that I don't really care about, and it should tell you something about the end user. I'm not going to run some easy to run game, GTA V, on battery at 720p.

I do use the 94Whr battery actually, depends on what kind of laptop it is. As long as the machine is not overly heavy, it will come in handy. Though it's more of a thing with gaming and workstation laptops that have 240W chargers.

Furthermore, if you look at the machines in the slides, you will see convertible laptops and a Swift. Those are not gaming laptops and when you want to game with them, you probably will want to try to get the performance out properly by plugging it to the wall... or you'll not only get worse performance, but also run out of battery because your thin convertible doesn't come with a 94Whr battery.

I was waiting for the bit at the end that said, But wait, there's more.

We'll even throw in six FREE steak knives. :laugh:

I can't believe I am saying this but Intel, of all people, can't seem to comprehend that they need mindshare. You simply can't enter this space with lowest imaginable tier of performance, this will seem like a joke to people and by the time they scrap something together that's higher performance it may as well be over for them.

It's basically the same speed as the Tiger Lake iGPU, but gives added flexibility and speed for those content creation workloads.

"Storms into the market" LMAO

www.anandtech.com/show/15846/jim-keller-resigns-from-intel-effective-immediately

He resigned due to pretty much personal, and proberly health issues.

And I reckon that players of such titles won't be satisfied with that performance. I really don't think 96 EUs at 1.65 GHz will be enough to "storm into" 1080p gaming, especially if the chips are only sold to laptop manufacturers and OEMs. Don't get me wrong, I'd be happy if AMD and nvidia had some competition in the gaming GPU market - but I can't see it happening just yet.

Bad news for NV MX series though.

I think that most are too focused on the gaming aspects of this chip. While yes, you can game on this and it's gaming performance is comparable to a MX350/GTX1050, that's not the main selling point of DG1. You don't even buy a laptop with a MX350 for gaming, do you? You mainly want it to improve content creation. Case in point, the three laptop it launches in. Not meant for gaming whatsoever.

The combined power of the Iris Xe and Iris Xe Max is nothing to be scoffed at considering the power enveloppe. FP16 => 8TFLOPS. That's GTX 1650m level.

I don't know if anybody here uses their computer for work but Gigapixel AI acceleration, video encoding, ... these things really matter for content creators.

I this multi-stream video encoding test, it beat a RTX2080 & i9-10980HK combination. Can you imagine that a puny lightweight Acer Swift 3 laptop beating a >€2000 heavy gaming laptop in any task? I would say mission accomplished.

We're going have to wait another year before Intel launches their actual gaming product, DG2.