Wednesday, May 19th 2021

NVIDIA Adds DLSS Support To 9 New Games Including VR Titles

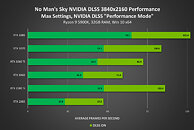

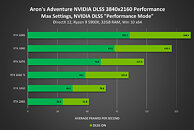

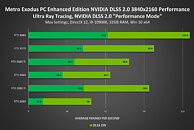

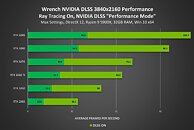

NVIDIA DLSS adoption continues at rapid pace, with a further 9 titles adding the game-changing, performance-accelerating, AI and Tensor Core-powered GeForce RTX technology. This follows the addition of DLSS to 5 games last month, and the launch of Metro Exodus PC Enhanced Edition a fortnight ago. This month, DLSS comes to No Man's Sky, AMID EVIL, Aron's Adventure, Everspace 2, Redout: Space Assault, Scavengers, and Wrench. And for the first time, DLSS comes to Virtual Reality headsets in No Man's Sky, Into The Radius, and Wrench.

By enabling NVIDIA DLSS in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing in AMID EVIL, Redout: Space Assault, and Wrench. For gamers, only GeForce RTX GPUs feature the Tensor Cores that power DLSS, and with DLSS now available in 50 titles and counting, GeForce RTX offers the fastest frame rates in leading triple-A games and indie darlings.Complete Games List

Source:

NVIDIA

By enabling NVIDIA DLSS in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing in AMID EVIL, Redout: Space Assault, and Wrench. For gamers, only GeForce RTX GPUs feature the Tensor Cores that power DLSS, and with DLSS now available in 50 titles and counting, GeForce RTX offers the fastest frame rates in leading triple-A games and indie darlings.Complete Games List

- AMID EVIL

- Aron's Adventure

- Everspace 2

- Metro Exodus PC Enhanced Edition

- No Man's Sky

- Redout: Space Assult

- Scavengers

- Wrench

- Into The Radius VR

49 Comments on NVIDIA Adds DLSS Support To 9 New Games Including VR Titles

What was so great about Pascal? Only 1080 Ti was truly great in my eyes, today it's not even considered mid-end tho and 1070/1080 was/is by RTX 2060 6GB especially in DLSS supported titles.

My 980 Ti with custom firmware performed on 1070Ti/1080 level. 1620 MHz 3D clocks. Did not even consider Pascal when it first came out because of this fact.

My 1080 Ti performed only on 2070 level overall and lacked RTX features. 3080 was a night and day upgrade. Biggest in many years.

I don't use RT ever, or I actually do in Metro Exodus EE because its needed, but on lowest settting, with all other settings maxed out (aka ultra) + DLSS Quality for 150-200 fps gameplay at 1440p. Looks sharp and crisp. If I disable DLSS, fps drops to 85-120 or so. Huge difference on a 1440p/165 Hz monitor.

Some people like RT tho and DLSS makes it useable. RT performance is beyond crap on AMD cards with no FSR to boost performance. So yeah, RT on AMD cards are still pretty much a NO-GO.

If FSR does work on those (and it seems it will), you may see them in a totally different light in comparison to 2000 and 3000 series.

DLSS 3.0 should work in all games which has TAA, which is alot.

Also, with native support in Unreal Engine and Unity, most AAA games are pretty much covered going forward.

AMD are years behind on both RT and this. FSR is not going to come out and make 5 year old GPUs relevant again.

AMD talked about FSR for a long time now, showed nothing, why do you think? Talk is cheap.

It doesn't even have to be as good in performance boost as you claim DLSS is. All FSR needs is easy implementation for any given game which it will have. Open source which will give all available cards performance boost regardless. Even if FSR gave half the performance of what DLSS can it would've still be a win for huge number of users.

Well I'm just tired of hearing AMD talk about this feature without showing anything at all. Talk is cheap.

If you are tired then lie down and close your eyes for a minute. How can you show something that's coming out next month? People are just exited about seeing this actually happening. What's wrong with that? DLSS is a great feature, no doubt about it and everybody knows these facts but there are none for FSR and your claims about it are bold.

If AMD were ANYWHERE NEAR a release date, why not show a few glimts of the tech? Not promising if you ask me. So yeah, expect years before implementation is widespread and works. We can revive this thread when FSR vs DLSS comparisons are out in 6-12 months. Hopefully. If you will ever see a game feature both DLSS and FSR that is. If not around 75% gain should be the goal, which is what DLSS Quality mode delivers.

An open standard is great but I highly doubt FSR will be able to deliver what DLSS already delivers. Again, I will be amazed if AMD does that. And then AMD GPU is once again up for consideration next time.

AMD said June so if they are saying it's going to be released in June why would I doubt that? If NV said DLSS 2.1 release is in July would you said 'nowhere near' as well if they didn't show anything?

To be fair, I'm exited for the DLSS 2.1 to show what it can do. Honestly it might be a killer but I will refrain from any speculation or guesses.

If anything, AMD's talk may turn out to be cheap not people being exited about it and sharing thoughts.

DLSS 3.0 is what is going to be good; All games with TAA support will be able to use DLSS.

Nvidia don't talk about DLSS, they act. They released 2.0 out of nowhere and same with 2.1. They TALK when it's FINISHED. AMD talk first.

Nvidia does not like to talk about DLSS much, without RT is mentioned too. DLSS was supposed to be a feature to make RT useful .. most people use DLSS to boost performance immensely with RT disabled instead.

DLSS works great as AA on top of boosting performance too. Jaggies goes away. Text and textures sharpen.

www.rockpapershotgun.com/outriders-dlss-performance

So yeah, if AMD can bring anything thats even remotely close, I will be amazed. I guess we will found out, in some months, maybe... :laugh:

I DON'T HATE AMD. I have a full AMD RIG in my house - I just HATE when people TALK. ACT PLEASE.

Is it great that DLSS boost frame rate? Yes

But is it enjoying as gamer? No, for me there is more negative than there is positive especially when it comes to motion when you stop and move it leaves that trail from past frames and you see a filter on the frame that makes it looks fury and low resolution.

BTW. The TAA support automatically DLLS 3.0 is a fake news or at least wishful thinking. nothing has been confirmed by NV yet.NV said they are going to release 2.0 DLSS and that it is in works for a while before its release. Not shocking though considering how DLSS 1.0 has been received.What are you about? We're just talking and sharing info. Isn't that right @las?

For instance I had no idea DLSS 2.1 is already in the play here and that it's dedicated VR feature.

7 month old arcticle, again, nothing important for most people since version 2.0 has most covered

I never "praised" 2.1 :D I has no use for it, I only has experience with 1.0 which sucked and especially 2.0 which is great

First time I tried DLSS, was in OG Metro Exodus I think. Disabled it instantly. Blurry. DLSS 2.0 opened my eyes. It's an amazing tech when implemented right.

I can see that I sound like a Nvidia fanboy, I simply look forward to see FSR and Im tired of reading rumours about it. I want to see what they can do without dedicated hardware. I said that if they can match or even get close to what DLSS 2.0 can do, I will be amazed

I just hope FSR support will take off faster than DLSS did

Thanks for sharing the article :) btw.Too bad it's just one game. I'll dig in deeper for changes vs DLSS 2.0.There's nothing wrong with being a fan of a feature or anything for that matter. I wouldn't use a fanboy in your case although some people here might take it that way.

Frankly I'm amazed AMD Is releasing it regardless how good or bad it will be. Thought this will take ages. They have pulled all the stops to catch up.

Sort of the place where RDNA2 is right now, as well.We're quite the same then, but this is a forum, which is meant for talking :)

As much as Nvidia is ready to pre-empt and frontier with new technology, they're also the proprietary bosses that we never like to see. Its when DLSS can move to mainstream and GPU agnostic that we can start cheering universally, IMHO. I'm totally unimpressed when companies can fine tune a single title with manual work. Wooptiedoo, tons of hours went into making something nice, except you're done looking at it after a tiny fraction of said hours. That math doesn't check out and won't survive the market.

Nvidias does the entire screen at once, where AMD can leave say mirandas ass in mass effect in full 4K, while anything in motion or the far distance could be dropped to 720p

both approaches have their merits, and i look forward to seeing both polished results fight it out. It's rare for a technology to give us better performance, and not less.

On a side note. 720p? does that apply for all resolutions or you are talking specifically about 1080p drop to 720p?

Custom 980 Ti's beat custom 1070's out of the box. Maxwell overclocked way better than Pascal overall. Maxwell had alot left in the tank. Pascal did not. GPU Boost worked much better in Pascal, so headroom was lower.

GM200 especially had insane OC potential and headroom. Custom versions delivered like 15-20% performance over reference, and you could easily gain an additional 15-20% more.

There was a night and day difference between reference 980 Ti and a custom card with max OC. Nvidia gimped the reference card alot. Altho they still hit around 1450 MHz post OC, but could get noisy, custom cards were much cooler and quieter post OC. 980 Ti reference only ran around 1200 MHz 3D clocks at stock. Thats like 300-400 MHz lower than it potentially could.

At 1400-1450 MHz you were beating 1070 with ease. At 1550-1600 MHz you were around 1080 level and 6GB vs 8GB never really meant anything back then, 980 Ti had 384 bit bus afterall. 1070/1080 only 256 bit. Today 6 vs 8 might matter but none of the cards can max out games at 1440p anyway, so VRAM requirement won't be as high. Pretty much no games break 6GB usage at 1440p maxed out today. Only modded ones.

1080 Ti is the only Pascal card I remember as being truly great. Yet I would barely consider it being mid-end today.

I AM NOT SAYING 1080 is a bad card, I just say it's nothing spectacular. Maxwell was great too and IMO there was not much difference between Maxwell and Pascal.

970 was insane value even with the "3.5GB". Still holds up very well today in 1080p gaming. It was almost too close to 980. Nvidia separated x70 and x80 more since 900 series as a result (I guess - because 970 sold like crazy compared to 980)

The best GPUs in the last 10 years probably is 980 Ti, 1080 Ti, 7970 and 7950. Random order. All of them aged very well and/or overclocked alot.

Hell 7970 was released like 3 times; 7970 -> 7970 GHz Edition -> 280X

I remember when Cata 12.11 hit, Tahiti really took off then.

7970 @ 1200/1600 was my last AMD GPU.

Long post to say you actually mostly agree ;)

Topic seems to be each other's opinions and who is right.

Anymore insults/jabs will be dealt with.

Now, stay on the topic.

Thank You.

4K high refresh gaming is becoming more accessible now thanks to DLSS, that even a 3060 Ti can get good framerates (without RT of course).