Intel's Upcoming Core i9-13900K Appears on Geekbench

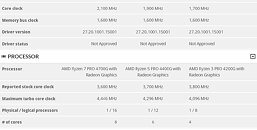

New week, new leak, as an engineer sample of Intel's upcoming Raptor Lake based Core i9-13900K has appeared in the infamous Geekbench database. It seems to be one of the ES samples that has been making the rounds over the past few weeks, but this is the first time we get an indication of what the performance might be like. There are no real surprises in terms of the specifications, we're looking at a base clock of 3 GHz, with a boost clock of 5.5 GHz, which has already been reported for these chips. The 24-core, 32-thread CPU was paired with 32 GB of 6400 MHz DDR5 memory and an ASUS ROG Maximus Z690 Extreme motherboard. Unfortunately the test results are reported as invalid, due to "an issue with the timers" on the system.

That said, we can still compare the results with a similar system using a Core i9-12900K on an ASUS ROG Strix Z690-F Gaming board, that's also paired up with 32 GB of 6400 MHz DDR5 memory. The older Alder Lake system is actually somewhat faster in the single core tests where it scores 2,142 points versus 2133 points for the Raptor Lake based system, despite having a maximum frequency of 5.1 GHz. The Raptor Lake system is faster in the multi-core test at 23,701 vs. 21312 points. However, it's no point doing any kind of analysis here, as the Raptor Lake results are all over the place, with it beating the Alder Lake CPU by a significant amount in some tests and losing against it in others, where it shouldn't be falling behind, simply based on the higher clock speed and additional power efficient cores. At least this shows that Raptor Lake is running largely as intended on current 600-series motherboards, so for those considering upgrading to the 13th gen of Intel CPUs, there shouldn't be any big hurdles to overcome.

That said, we can still compare the results with a similar system using a Core i9-12900K on an ASUS ROG Strix Z690-F Gaming board, that's also paired up with 32 GB of 6400 MHz DDR5 memory. The older Alder Lake system is actually somewhat faster in the single core tests where it scores 2,142 points versus 2133 points for the Raptor Lake based system, despite having a maximum frequency of 5.1 GHz. The Raptor Lake system is faster in the multi-core test at 23,701 vs. 21312 points. However, it's no point doing any kind of analysis here, as the Raptor Lake results are all over the place, with it beating the Alder Lake CPU by a significant amount in some tests and losing against it in others, where it shouldn't be falling behind, simply based on the higher clock speed and additional power efficient cores. At least this shows that Raptor Lake is running largely as intended on current 600-series motherboards, so for those considering upgrading to the 13th gen of Intel CPUs, there shouldn't be any big hurdles to overcome.