Intel Plays the Pre-Zen AMD Tune: Advocates Focus Shift from "Benchmarks to Benefits"

Intel CEO Bob Swan, in his Virtual Computex YouTube stream, advocated that the industry should focus less on benchmarks, and more on the benefits of technology, a line of thought strongly advocated by rival AMD in its pre-Ryzen era, before the company began getting competitive with Intel again. "We should see this moment as an opportunity to shift our focus as an industry from benchmarks to the benefits and impacts of the technology we create," he said, referring to technology keeping civilization and economies afloat during the COVID-19 global pandemic, which has thrown Computex among practically every other public gathering out of order.

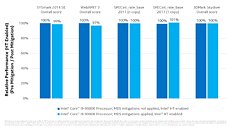

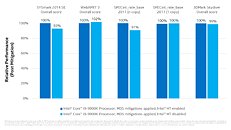

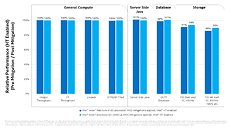

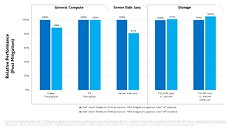

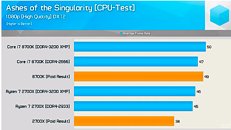

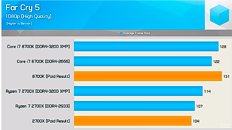

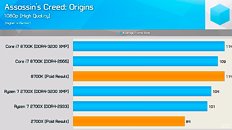

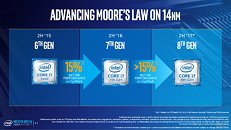

"The pandemic has underscored the need for technology to be purpose-built so it can meet these evolving business and consumer needs. And this requires a customer-obsessed mindset to stay close, anticipate those needs, and develop solutions. In this mindset, the goal is to ensure we are optimizing for a stronger impact that will support and accelerate positive business and societal benefits around the globe," he added. An example of what Swan is trying to say is visible with Intel's 10th generation Core "Cascade Lake XE" and "Ice Lake" processors, which feature AVX-512 and DL-Boost, accelerating deep learning neural nets; but lose to AMD's flagship offerings on the vast majority of benchmarks. Swan also confirmed that the company's "Tiger Lake" processors will launch this Summer.The Computex video address by CEO Bob Swan can be watched below.

"The pandemic has underscored the need for technology to be purpose-built so it can meet these evolving business and consumer needs. And this requires a customer-obsessed mindset to stay close, anticipate those needs, and develop solutions. In this mindset, the goal is to ensure we are optimizing for a stronger impact that will support and accelerate positive business and societal benefits around the globe," he added. An example of what Swan is trying to say is visible with Intel's 10th generation Core "Cascade Lake XE" and "Ice Lake" processors, which feature AVX-512 and DL-Boost, accelerating deep learning neural nets; but lose to AMD's flagship offerings on the vast majority of benchmarks. Swan also confirmed that the company's "Tiger Lake" processors will launch this Summer.The Computex video address by CEO Bob Swan can be watched below.