Intel Announces 10th Gen Core X Series and Revised Pricing on Xeon-W Processors

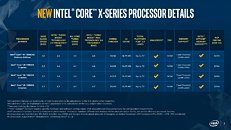

Intel today unveiled its latest lineup of Intel Xeon W and X-series processors, which puts new classes of computing performance and AI acceleration into the hands of professional creators and PC enthusiasts. Custom-designed to address the diverse needs of these growing audiences, the new Xeon W-2200 and X-series processors are targeted to be available starting November, along with a new pricing structure that represents an easier step up for creators and enthusiasts from Intel Core S-series mainstream products.

Intel is the only company that delivers a full portfolio of products precision-tuned to handle the sustained compute-intensive workloads used by professional creators and enthusiasts every day. The new Xeon W-2200 and X-series processors take this to the next level, as the first high-end desktop PC and mainstream workstations to feature AI acceleration with the integration of Intel Deep Learning Boost. This offers an AI inference boost of 2.2 times more compared with the prior generation. Additionally, this new lineup features Intel Turbo Boost Max Technology 3.0, which has been further enhanced to help software, such as for simulation and modeling, run as fast as possible by identifying and prioritizing the fastest available cores.

Intel is the only company that delivers a full portfolio of products precision-tuned to handle the sustained compute-intensive workloads used by professional creators and enthusiasts every day. The new Xeon W-2200 and X-series processors take this to the next level, as the first high-end desktop PC and mainstream workstations to feature AI acceleration with the integration of Intel Deep Learning Boost. This offers an AI inference boost of 2.2 times more compared with the prior generation. Additionally, this new lineup features Intel Turbo Boost Max Technology 3.0, which has been further enhanced to help software, such as for simulation and modeling, run as fast as possible by identifying and prioritizing the fastest available cores.