Monday, November 5th 2018

Intel Announces Cascade Lake Advanced Performance and Xeon E-2100

Intel today announced two new members of its Intel Xeon processor portfolio: Cascade Lake advanced performance (expected to be released the first half of 2019) and the Intel Xeon E-2100 processor for entry-level servers (general availability today). These two new product families build upon Intel's foundation of 20 years of Intel Xeon platform leadership and give customers even more flexibility to pick the right solution for their needs.

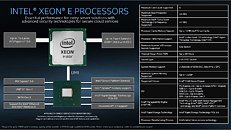

"We remain highly focused on delivering a wide range of workload-optimized solutions that best meet our customers' system requirements. The addition of Cascade Lake advanced performance CPUs and Xeon E-2100 processors to our Intel Xeon processor lineup once again demonstrates our commitment to delivering performance-optimized solutions to a wide range of customers," said Lisa Spelman, Intel vice president and general manager of Intel Xeon products and data center marketing.Cascade Lake advanced performance represents a new class of Intel Xeon Scalable processors designed for the most demanding high-performance computing (HPC), artificial intelligence (AI) and infrastructure-as-a-service (IaaS) workloads. The processor incorporates a performance optimized multi-chip package to deliver up to 48 cores per CPU and 12 DDR4 memory channels per socket. Intel shared initial details of the processor in advance of the Supercomputing 2018 conference to provide further insight to the company's extended innovations in workload types.

Cascade Lake advanced performance processors are expected to continue Intel's focus on offering workload-optimized performance leadership by delivering both core CPU performance gains1 and leadership in memory bandwidth constrained workloads. Performance estimations include:

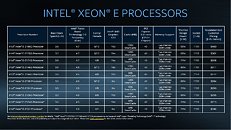

Small businesses deploying Intel Xeon E-2100 processor-based servers will benefit from the processor's enhanced performance and data security. They will allow businesses to operate smoothly by supporting the latest file-sharing, storage and backup, virtualization, and employee productivity solutions.Intel Xeon E-2100 processors are available today through Intel and leading distributors.

"We remain highly focused on delivering a wide range of workload-optimized solutions that best meet our customers' system requirements. The addition of Cascade Lake advanced performance CPUs and Xeon E-2100 processors to our Intel Xeon processor lineup once again demonstrates our commitment to delivering performance-optimized solutions to a wide range of customers," said Lisa Spelman, Intel vice president and general manager of Intel Xeon products and data center marketing.Cascade Lake advanced performance represents a new class of Intel Xeon Scalable processors designed for the most demanding high-performance computing (HPC), artificial intelligence (AI) and infrastructure-as-a-service (IaaS) workloads. The processor incorporates a performance optimized multi-chip package to deliver up to 48 cores per CPU and 12 DDR4 memory channels per socket. Intel shared initial details of the processor in advance of the Supercomputing 2018 conference to provide further insight to the company's extended innovations in workload types.

Cascade Lake advanced performance processors are expected to continue Intel's focus on offering workload-optimized performance leadership by delivering both core CPU performance gains1 and leadership in memory bandwidth constrained workloads. Performance estimations include:

- Linpack up to 1.21x versus Intel Xeon Scalable 8180 processor and 3.4x versus AMD EPYC 7601

- Stream Triad up to 1.83x versus Intel Scalable 8180 processor and 1.3x versus AMD EPYC 7601

- AI/Deep Learning Inference up to 17x images-per-second2 versus Intel Xeon Platinum processor at launch.

Small businesses deploying Intel Xeon E-2100 processor-based servers will benefit from the processor's enhanced performance and data security. They will allow businesses to operate smoothly by supporting the latest file-sharing, storage and backup, virtualization, and employee productivity solutions.Intel Xeon E-2100 processors are available today through Intel and leading distributors.

36 Comments on Intel Announces Cascade Lake Advanced Performance and Xeon E-2100

Anyone praising the "better than Epyc" numbers now should wait until actual review testing, you look very foolish. It may be true but its really too early to believe anything Intel says. Intel has a habit of fudging numbers for PR stunts.

And what's wrong with this 3.4x anyway? Skylake-SP has two AVX-512 execution unit per core and zen1 has two 128-bit ADD and two 128-bit MUL instead. No surprise a crushing advantage in LINPACK. It has always been the case in HPC.

More, they are testing without security patches (read small print below their results) on both Windows and Linux (tests on Linux done in 2017 on 3.10.0 kernel).

It is a shame what Intel is falling down to.

I suppose.... But who's gonna turn HTT off when they throw this chip into a machine? Why would they even put it on the chip in the first place if performance is so great without it? I'm guessing that they just cherry-pick a few programs that benefit from disabling it, run some tests, and say "see 3.4X!"

I see they also disabled it for their DL inference.

@mat9v

They write "2 AMD EPYC" in the config details slide.... Also, whatever the "stream triad" test is, they run results from a June 2017 test of Epyc...

I dunno, they may as well hand the chips over to Principled Technologies, lol.

They are comparing not to actual working system based on 2x48 cores MPC but to PROJECTIONS of its performance !!!!

Anyway, this monster will not be cheap by any standards, hopefully not more then 20k$ but who knows, it also will not be a drop-in replacement so it will be present in new servers only. By the time it will hit the market EPYC2 with 64 cores will be selling (probably) and from what info is available, it will probably be even cheaper then EPYC1 to manufacture.

If the 8x8 + IOX configuration is real, Intel will be so deep in trouble it is not even funny.

My prediction was correct that they eventually wouldn't have a choice but to "glue together" dies.Horrendous, no doubt. Intel is getting dangerously close to having a CPU that requires extreme cooling and when you have an entire floor of these that's going to be very problematic.

That being said, it looks like Rome will be in a league of its own when it comes down to just about everything.

G̶l̶u̶e̶l̶e̶s̶s̶ ̶D̶e̶s̶i̶g̶n̶Although buying corporate customers still works, AMD EPYC is flying past intel.

Your own

engineeringmarketing team thinks its crap!