NEC Launches Their SX-Aurora TSUBASA Vector Engine

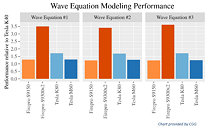

NEC Corporation (TSE: 6701) today announced the launch of a new high-end HPC product line, the SX-Aurora TSUBASA. This new platform drastically increases processing performance and scalability on real world applications, aiming for the traditional application areas, such as science and engineering, but also targeting the new fields of Machine Learning, Artificial Intelligence and Big Data analytics. With this new technology, NEC opens supercomputing to a wide range of new markets, in addition to the traditional HPC arena.

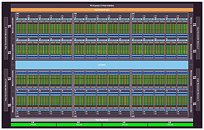

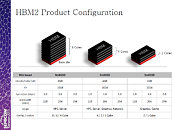

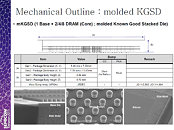

Utilizing cutting-edge chip integration technology, the new product features a complete multi-core vector processor in the form of a card-type Vector Engine (VE), which is developed based on NEC's high-density interface technology and efficient cooling technology. Kimihiko Fukuda, Executive Vice President, NEC Corporation, said, "The new product addresses the needs of scalar computational capability while still providing the efficiency of a vector architecture. This is accomplished through a tightly integrated complete vector system in the form of a Vector Engine Card."

Utilizing cutting-edge chip integration technology, the new product features a complete multi-core vector processor in the form of a card-type Vector Engine (VE), which is developed based on NEC's high-density interface technology and efficient cooling technology. Kimihiko Fukuda, Executive Vice President, NEC Corporation, said, "The new product addresses the needs of scalar computational capability while still providing the efficiency of a vector architecture. This is accomplished through a tightly integrated complete vector system in the form of a Vector Engine Card."