AMD to Simultaneously Launch 3rd Gen Ryzen and Unveil Radeon "Navi" This June

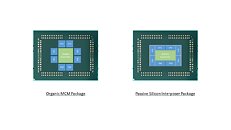

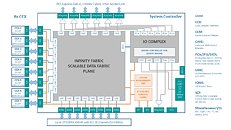

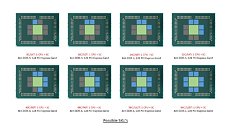

TAITRA, the governing body behind the annual Computex trade-show held in Taipei each June, announced that AMD CEO Dr. Lisa Su will host a keynote address which promises to be as exciting as her CES keynote. It is revealed that Dr. Su will simultaneously launch or unveil at least four product lines. High up the agenda is AMD's highly anticipated 3rd generation Ryzen desktop processors in the socket AM4 package, based on "Zen 2" microarchitecture, and a multi-chip module (MCM) codenamed "Matisse." This launch could be followed up by a major announcement related to the company's 2nd generation EPYC enterprise processors based on the "Rome" MCM.

PC enthusiasts are in for a second major announcement, this time from RTG, with a technical reveal or unveiling of Radeon "Navi," the company's first GPU designed from the ground up for the 7 nm silicon fabrication process. It remains to be seen which market-segment AMD targets with the first "Navi" products, and the question on everyone's minds, whether AMD added DXR acceleration, could be answered. Lastly, the company could announce more variants of its Radeon Instinct DNN accelerators.

PC enthusiasts are in for a second major announcement, this time from RTG, with a technical reveal or unveiling of Radeon "Navi," the company's first GPU designed from the ground up for the 7 nm silicon fabrication process. It remains to be seen which market-segment AMD targets with the first "Navi" products, and the question on everyone's minds, whether AMD added DXR acceleration, could be answered. Lastly, the company could announce more variants of its Radeon Instinct DNN accelerators.