MSI Launches Two CMP 30HX MINER Series Cards

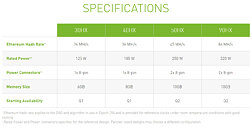

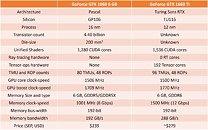

MSI has recently announced two new CMP (Cryptocurrency Mining Processor) 30HX MINER Series cards the MSI CMP 30HX MINER, and MSI CMP 30HX MINER XS. These new cards borrow the coolers from the ARMOR series and VENTUS XS respectively, MSI is one of the first manufacturers to offer multiple versions of a CMP card so it will be interesting to see the differences in performance and pricing given the two cards feature identical specifications. The two cards both feature the TU116-100 GPU with 1408 cores and a base clock of 1530 MHz along with a boost clock of 1785 MHz and 6 GB GDDR6 memory. The cards also lack any display outputs as per all CMP cards and pricing or availability information is not available.