Thursday, October 1st 2009

NVIDIA Unveils Next Generation CUDA GPU Architecture – Codenamed ''Fermi''

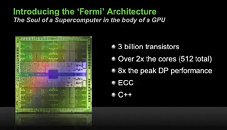

NVIDIA Corp. today introduced its next generation CUDA GPU architecture, codenamed "Fermi". An entirely new ground-up design, the "Fermi" architecture is the foundation for the world's first computational graphics processing units (GPUs), delivering breakthroughs in both graphics and GPU computing.

"NVIDIA and the Fermi team have taken a giant step towards making GPUs attractive for a broader class of programs," said Dave Patterson, director Parallel Computing Research Laboratory, U.C. Berkeley and co-author of Computer Architecture: A Quantitative Approach. "I believe history will record Fermi as a significant milestone."Presented at the company's inaugural GPU Technology Conference, in San Jose, California, "Fermi" delivers a feature set that accelerates performance on a wider array of computational applications than ever before. Joining NVIDIA's press conference was Oak Ridge National Laboratory who announced plans for a new supercomputer that will use NVIDIA GPUs based on the "Fermi" architecture. "Fermi" also garnered the support of leading organizations including Bloomberg, Cray, Dell, HP, IBM and Microsoft.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

As the foundation for NVIDIA's family of next generation GPUs namely GeForce, Quadro and Tesla − "Fermi" features a host of new technologies that are "must-have" features for the computing space, including:

"NVIDIA and the Fermi team have taken a giant step towards making GPUs attractive for a broader class of programs," said Dave Patterson, director Parallel Computing Research Laboratory, U.C. Berkeley and co-author of Computer Architecture: A Quantitative Approach. "I believe history will record Fermi as a significant milestone."Presented at the company's inaugural GPU Technology Conference, in San Jose, California, "Fermi" delivers a feature set that accelerates performance on a wider array of computational applications than ever before. Joining NVIDIA's press conference was Oak Ridge National Laboratory who announced plans for a new supercomputer that will use NVIDIA GPUs based on the "Fermi" architecture. "Fermi" also garnered the support of leading organizations including Bloomberg, Cray, Dell, HP, IBM and Microsoft.

"It is completely clear that GPUs are now general purpose parallel computing processors with amazing graphics, and not just graphics chips anymore," said Jen-Hsun Huang, co-founder and CEO of NVIDIA. "The Fermi architecture, the integrated tools, libraries and engines are the direct results of the insights we have gained from working with thousands of CUDA developers around the world. We will look back in the coming years and see that Fermi started the new GPU industry."

As the foundation for NVIDIA's family of next generation GPUs namely GeForce, Quadro and Tesla − "Fermi" features a host of new technologies that are "must-have" features for the computing space, including:

- C++, complementing existing support for C, Fortran, Java, Python, OpenCL and DirectCompute.

- ECC, a critical requirement for datacenters and supercomputing centers deploying GPUs on a large scale

- 512 CUDA Cores featuring the new IEEE 754-2008 floating-point standard, surpassing even the most advanced CPUs

- 8x the peak double precision arithmetic performance over NVIDIA's last generation GPU. Double precision is critical for high-performance computing (HPC) applications such as linear algebra, numerical simulation, and quantum chemistry

- NVIDIA Parallel DataCache - the world's first true cache hierarchy in a GPU that speeds up algorithms such as physics solvers, raytracing, and sparse matrix multiplication where data addresses are not known beforehand

- NVIDIA GigaThread Engine with support for concurrent kernel execution, where different kernels of the same application context can execute on the GPU at the same time (eg: PhysX fluid and rigid body solvers)

- Nexus - the world's first fully integrated heterogeneous computing application development environment within Microsoft Visual Studio

49 Comments on NVIDIA Unveils Next Generation CUDA GPU Architecture – Codenamed ''Fermi''

now we'd need some benchies ... i believe Wizz does know more about the new card then we

Market share does not [directly] come from having the performance crown. It comes from having the best price-performance ratio. In the GT200 vs HD4000 era Nvidia had the fastest GPU but ATI gained market-share because of it's better value.

Of course the halo-effect can have a small influence, but it is generally not great.

www.anandtech.com/video/showdoc.aspx?i=3651

DC4.0 and 4.1 are avalible to DX10 and 10.1 hardware respectively.

DX11(and DX10) were not focussed on parallel computing, and don't compete with CUDA/Streams/OpenCL. You can't use DX11/DirectCompute to do the things that are possible with CUDA/Streams/OpenCL.

And exactly, erocker. Microsoft gets to do the evil laughing and thumb twiddling instead of NVIDIA. :roll:

So Nvidia will run DX11 compute through CUDA, OpenCL through CUDA, etc.

CUDA= Compute Unified Device Architecture <- It says it all.

The problem is that the media has mixed things badly, giving the name CUDA to the software, when it's not.

CUDA is going to be used in a supercomputer so that alone means a lot of cards sold. I don't know the number, but it could mean 20.000 Tesla cards. At $4000 each, do the calculations.

In the meantime the consumer market will not be affected at all. G80 and GT200 were already focused on general computing and they did well in gaming. People have nothing to worry about.

All super computers still run racks of CPUs (almost 300,000 of them in one :eek:) and very, very few GPUs (no more than display for management). That could change with Larrabee but I doubt it will change before then. Why? CUDA nor Stream are fully programmable: Larrabee is. Make a protein folding driver and you got a protein folding Larrabee card. Make a DX11 driver and you got a gaming Larrabee card. Every card, so long as it has an appropriate driver, is made to order for the task at hand. Throw that on a Nehalem-based Itanium mainframe and you got a giant bucket of kickass computing. :rockout:

www.dvhardware.net/article38174.html

Or here's another example, instead of creating a 8000 CPU (1000 8p servers) supersomputer they used only 48 servers with Tesla.

wallstreetandtech.com/it-infrastructure/showArticle.jhtml?articleID=220200055&cid=nl_wallstreettech_daily

Or this: www.embedded-computing.com/news/Technology+Partnerships/14323 Cray supercomputer on a desk using Tesla.

You are outdated when it comes to CUDA programability too: www.techpowerup.com/105013/NVIDIA_Introduces_Nexus_The_Industry%E2%80%99s_First_IDE_for_Developers_Working_with_MS_VS.html

Fermi can run C/C++ and Fortran natively, Larrabee lost every bit of advantage it had there and it's nowhere to be found and it will not be until late 2010 or even 2011.

Larrabee is slated for Q2 2010.

Bottom line , what they can do new now after some time of screaming CUDA and STREAMS :

1.they can encode in video , for professionals it's not an option , not to many options on the encoder and if you start to give it some deinterlacing stuff/filters sutff to do and some huge bitrate the video card will be very limited in what it can do to help , i tried it and it's crap , it's good for youtube and PS3/xbox360 but not freaks who want the best quality

2. folding home is not my thing

3. it can speed up some applications like adobe and sony vegas , again very very limited , cpu is still the best upgrade if you do this kind of things

4. some new decoders are helped by video cards but for people who have at least a dual core it's like "so now it runs partially on my video card? wow , i would've never known"

5. physics in games , it's probably the only thing we actually see it as a real progress from all the bullshit they serve us

6. something i forgot :)

Bottom line is they talked about what the video card can do and how great things will be but time passed and nothing , the encoding which was supposed to be top notch and fast on a gpu and look , a year has passed and it's basic and limited in what you can do , it's so basic that most of us who want better quality never has an option using badaboom/cyberlink espresso , they are crapppp.

I will not stand for more bullshit about "supercomputing" on the GPU , SHOW ME WHAT YOU CAN DO OTHER THAN GRAPHICS THAT COULD CHANGE MY PRIORITIES IN BUYING HARDWARE!!!

@leonard_222003

The GPU architecture has changed, and you are talking about the past implementations. And it's been 2 years since it started. How much do you think it took the x86 CPUs to gain traction and substitute other implementations? More than that. New things get time.

Also what the man with vreveal shows me , is that all the GPU can do ? that program is so uselles you can't even imagine it before you use it , just try it and then talk.

"Unification"? On Windows? Think bigger, better, more... OpenX. You are now imposing limitation to the idea, not just the actual product. If Windows is what you think about, then your idea is to "help" ATI, not the developers and the users. There is life outside Windows you know. DirectCompute is not the answer... it belongs to Microsoft.

I don't think people realize that CUDA and Stream will be here for ever. Why is that? Because all these so called "open" or "unified" standards run on CUDA/Stream. There is the GPU, then there is CUDA/Stream, then there's everything else. This things are not really APIs, they are just wrappers to the CUDA/Stream APIs.

This is why coding something for CUDA/Stream is more efficient then using OpenCL, DirectCompute or whatever.

So I must point out the obvious, because people also think that it's nVidia's fault that CUDA is used and not OpenCL or whatever. The coders choose to use CUDA. nVidia supports just as well OpenCL or whatever.

Another obvious point, would be that there are far more things using CUDA then there are using Stream. This is not because nVidia is the big bad wolf, it is because it has a pro-active mentality the opposite of ATI's wait and see approach.

ATI is pushing games. DX11. That's it. nVidia is struggling to change the market mentality by pushing GPGPU. And they a paying for it. Everyone has something to say about them, then points out that the competition (namely ATI) is doing things much better... the truth is they are not doing it.

For example PhysX/Havok. ATI doesn't have Havok anymore, they "employed" a shady 3rd rate company to "create" an "open" physics standard. I don't think they intend to complete the project, they just need something "in the works" to compete with PhysX. So it seems that PhysX does matter.

Only 5x faster then you'd normally do using an expensive high end CPU. Performance increase is always useless...

It's the intended purpose of the application, it's not a GPU functions' showcase.

Why do people post before thinking?

Why does everybody only care about what is good for them? All this GPU computing is free* right now, you just have to choose the correct program. So if it's free, where's the problem? Nvidia right now sell their cards competitively according to graphics performance, but they offer more. That extra things are not for everybody? And what? Let those people have what they DO want, what they do find useful. TBH, it's as if it bothered you that other people had something that you do not want anyway.

*The programs are not free, but they don't cost more because of that feature, it's a free added feature.

@Sihastru

Wel said.

Regarding CUDA/Stream I think that the best way of explaining it is that DX11 and OpenCL are more like BASIC (programming language) and CUDA/Stream are more like ASSEMBLY language, in the sense that's what the GPUs run natively. But c for CUDA (programming API) is a high level language with direct relation to the low level CUDA (architecture and ISA). CUDA is so good because it's a high level and low level language at the same time, and that is very useful in the HPC market.