Tuesday, October 13th 2009

Intel IGPs Use Murky Optimisations for 3DMark Vantage

Apart from being the industry's leading 3D graphics benchmark application, 3DMark has had a long history of 3D graphics hardware manufacturers cheating with their hardware using application-specific optimisations against Futuremark's guidelines to boost 3DMark scores. Often, this is done by drivers detecting the 3DMark executable, and downgrading image quality, so the graphics processor has to handle lesser amount of processing load from the application, and end up with a higher performance score. Time and again, similar application-specific optimisations have tarnished 3DMark's credibility as an industry-wide benchmark.

This time around, it's neither of the two graphics giants in the news for the wrong reasons, it's Intel. Although the company has a wide consumer base of integrated graphics, perhaps the discerning media user / very-casual gamer finds it best to opt for integrated graphics (IGP) solutions from NVIDIA or AMD. Such choices rely upon reviews evaluating the IGPs performance at accelerating video (where it's common knowledge that Intel's IGPs rely heavily on the CPU for smooth video playback, while competing IGPs fare better at hardware-acceleration), synthetic and real-world 3D benchmarks, among other application-specific tests.

Here's a shady trick Intel is using to up its 3DMark Vantage score: the drivers, upon seeing the 3DMark Vantage executable, change the way they normally function, ask the CPU to pitch in with its processing power, and gain significant performance according to an investigation by Tech Report. While the image quality of the application isn't affected, the load on the IGP is effectively reduced, deviating from the driver's usual working model. This is in violation of Futuremark's 3DMark Vantage Driver Approval Policy (read here), which says:

Source:

The Tech Report

This time around, it's neither of the two graphics giants in the news for the wrong reasons, it's Intel. Although the company has a wide consumer base of integrated graphics, perhaps the discerning media user / very-casual gamer finds it best to opt for integrated graphics (IGP) solutions from NVIDIA or AMD. Such choices rely upon reviews evaluating the IGPs performance at accelerating video (where it's common knowledge that Intel's IGPs rely heavily on the CPU for smooth video playback, while competing IGPs fare better at hardware-acceleration), synthetic and real-world 3D benchmarks, among other application-specific tests.

Here's a shady trick Intel is using to up its 3DMark Vantage score: the drivers, upon seeing the 3DMark Vantage executable, change the way they normally function, ask the CPU to pitch in with its processing power, and gain significant performance according to an investigation by Tech Report. While the image quality of the application isn't affected, the load on the IGP is effectively reduced, deviating from the driver's usual working model. This is in violation of Futuremark's 3DMark Vantage Driver Approval Policy (read here), which says:

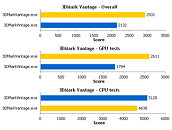

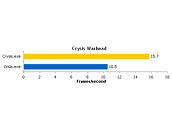

With the exception of configuring the correct rendering mode on multi-GPU systems, it is prohibited for the driver to detect the launch of 3DMark Vantage executable and to alter, replace or override any quality parameters or parts of the benchmark workload based on the detection. Optimizations in the driver that utilize empirical data of 3DMark Vantage workloads are prohibited.There's scope for ambiguity there. To prove that Intel's drivers indeed don't play fair at 3DMark Vantage, Tech Report put an Intel G41 Express chipset based motherboard with Intel's latest 15.15.4.1872 Graphics Media Accelerator drivers, through 3DMark Vantage 1.0.1. The reviewer simply renamed the 3DMark executable, in this case from "3DMarkVantage.exe" to "3DMarkVintage.exe", and there you are: a substantial performance difference.A perfmon (performance monitor) log of the benchmark as it progressed, shows stark irregularities in the CPU load graphs between the two, during the GPU tests, although the two remained largely the same during the CPU tests. An example of one such graphs is below:When asked for a comment to these findings, Intel replied by saying that its drivers are designed to utilize the CPU for some parts of the 3D rendering such as geometry rendering, when pixel and vertex processing saturates the IGP. Call of Juarez, Crysis, Lost Planet: Extreme Conditions, and Company of Heroes, are among other applications that the driver sees and quickly morphs the way the entire graphics subsystem works. A similar test run on Crysis Warhead yields a similar result:Currently, Intel's 15.15.4.1872 drivers for Windows 7 aren't in Futuremark's list of approved drivers, none of Intel's Windows 7 drivers do. For a complete set of graphs, refer to the source article.

45 Comments on Intel IGPs Use Murky Optimisations for 3DMark Vantage

The industry too much depends on artificial benchmark programs ,

ex; when I was a noob with an old nonworking pc , I send it to a repair man , at a private service and computer shop down town , the problem was a el cheapo too weak PSU , they changed it with a new one , most of the decision they made they saw me as a nonaware customer , but they put another el cheapo in , the cheapest you can get , and then some other guy showed up , looked like an unprofesional student working in the shop , and that guy actually replaced the PSU and he made "various" tests with 3Dmark 06 for the afternoon for the last day, he claimed he run 4 tests , each test for 2 hours , and that were all successful with no power downs or freezes (before, they actually requested the win login pass when I was leaving the pc there) ... so I paid 16 euros , lol , the PC was there for about 4-5 days , turned it up and saw 3Dmark installed as well as some folders thrown around containing some result files I did not understand fully back then , in 15 minutes the ol' PSU screaming noise began and in a matter of week the PSU was as good as the old one.

There is not certain if 3Dmark really did run successfully with that "wannabe pc pro" guy , but I played games that were set as high as possible and it failed.

What it really comes down to is, "does my game/application run as I expected".

Does anyone really buy graphics cards (or IGPs) based on the synthetic benchmark scores?

When you buy a pre-built system, non of the manufacurers make any claims of fps in any given scenarios. They simply say that you get extreme (barf) performance or whatever, without eluding to what exactly that means of where you get that performance.

Caveat Emptor.

In the second case there's almost no problem, but in the first case, the buyer has to believe every word they hear because they don't know anything. In that case, there are much shop assistants without heart who they don't mind cheating the buyer to dipose of that old crappy comp that no one wants. And there comes many legends like the most important thing to a vga is the dedicated memory, it still remains nowadays, so is normal seeing people thinking, for example, a 9300gs with 1gb dedicated memory can outperform a 8800gt with 512mb dedicated memory or many people thinking that they need a powerful vga to see photos and that sort of things.

I work as shop assistant in a electronics shop and many people comes saying this sort of things because another shop assistant said them that sort of things only to make the buyer get the computer he wants. I know how many shop assistants do their work :nutkick: I see many people complaining that crysis doesn't work in his comp and when I ask about the comp, is a crap with intel igp but the assistant said is fine for gaming. It happens quite times.

I agree with you completely. But if anyone goes into any kind of transaction (whether buying or selling) and is ill prepared to do business, then they get what they deserve. There is no excuse these days for being uninformed. anyone can get a boatload of information off the internet in a matter of minutes. To blindly trust a sales person at any shop (even if they have good intentions, like you) is foolish.

That is why I ended my last post with "Caveat Emptor", which is Latin for "Let the buyer beware".

My job is to sell what the customer needs and make a good service, then that customer will eventually come back to me whenever he needs me. If you ask a cop where to go to the nearest police station and he sends you the other way, you think is your fault because you could use a street guide instead of asking? That's not fair.

Sry for the off topic. I'll go to lash myself in penitence xDNo, ati and nvidia's counterparts are better in terms of performance. I talked once with an Intel's commercial counselor and he told me the performance of a GMA x3000 would be around a Geforce 4 MX400. For what he said me, the x4500 would be the same but with enhanced support for decoding HD media formats and some instruction sets for enhance the performance in autocad and that sort of programs. I don't know if is true all this because I never got any interest in it. Could be marketing strategy :D

:nutkick:

Since obviously if the exe files content change you wont be able to detect it anymore, even tho the optimizations would still probably work just fine for this "new" patched exe file...

2) don't know, doubt they do something

3) wrong

4) malware hurts the system, this just optimizes the driver in an application specific way, which is done for almost every game out there.

2) Renaming the executable (every download from the server generates a new executable name) causing a change in performance clearly shows they aren't. They could in the future but that's their call.

3) There's no evidence they have (#2 proving this).

4) DRM, like SecuROM, is very similar to the extent you must go to determine if something is legitimate or not. SecuROM causes startup delays and false positive error messages (both attributes common in malware). Having to exhaustively search for an executable means frequent, system heavy searches that would naturally lead to a decline in performance (just as seen with SecuROM). That performance drag on the system could lead your driver to be labeled malware.

securom's implementation sucked that's why it got flak for being drm. had they done it right it wouldnt have made the media. per your definition a resident antivirus would be malware too

I have no reason to create a near impossible application to detect so I'll have to pass on that. Should I have to, every time to application is ran, it would create a clone of itself in a different directory, alter its binary code as well as name and other signatures (file size, hash, native language, version, etc.). It would update all shortcuts to point to the new executable and on close, pass a delete command to the shell to remove itself. Next time the user starts the application, there's very little that didn't change.

It would effectively be the equivilent of HIV for x86 computers.

As the old saying goes: "where there is a will, there is a way."

where there is a will, there is a way. - exactly. works in reverse too :) even though i doubt the scientific accuracy of the statement.

Bottom line: The day computers programming themselves becomes common place, we're screwed.

But I digress. This is 22nd/23rd century stuff. I think Futuremark should consider randomizing their binary names and the application specific optimizations would most likely disappear.