Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

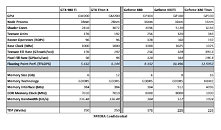

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

Hmmm... what to do...

Well, it is only rumors, but if it turns out to be true, I will both laugh and cry at the same time. I still don't know which.

Is it "some TPU guy just made this up"? =/

This is basically just some guys "randomly" guessing what a new generation could look like by increasing every feature by 50-100%, just for the attention. Almost everyone who has been following the news could probably end up guessing the next generation specs with abouth ~80% accuracy, unless there is a huge change in architecture like Kepler was. These early on wild guesses has been the norm for every generation, e.g. Fermi, Kepler, Maxwell... and they've never been right. The actual specs are usually leaked within a month or so before the product release.

- X80 does not really sound well when you think of cut down chips. X75?? For the Pascal 970? Nahhh

- X does not fit the lower segments at all. GTX in the low end becomes a GT. So X becomes a.... Y? U? Is Nvidia going Intel on us? It's weird.

- X(number) makes every card look unique and does not denote an arch or a gen. So the following series is... X1? That would result in an exact repeat of the previous naming scheme with two letters omitted. Makes no sense.

- They are risking exact copies of previous card names, especially those of the competitor.

Then on to the specs.

- Implementing both GDDR5 and HBM controllers on one chip is weird

- How are they differentiating beyond the Titan? Titan was never the fastest gaming chip. It was and is always the biggest waste of money with a lot of VRAM. They also never shoot all out on the first big chip release. X80ti will be 'after Titan' not before.

- HBM2 had to be tossed in here somehow, so there it is. Right? Right.

- How are they limiting bus width on the cut down versions? Why 6 GB when the previous gen AMD mid range already hits 8?

devblogs.nvidia.com/parallelforall/tag/memory/