Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

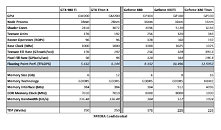

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

As for the 8gb part, it is overkill in most cases but beyond 4gb itself is not. It just means if there is a need for beyond 4gb we will still be a ok. I do agree its overkill, but I would put better safe than sorry.

People who think future titles and 4k gaming is realistic with 4GB is wrong. Actually, if people thought 4GB was enough for 1080p gaming there wouldn't be artards complaining about the 970 still like it's still a way to troll.

GeForce PX 4000

GeForce PX 5500 Ti

GeForce PX 6500

GeForce PX 7500

GeForce PX 8500 Ti

GeForce PX2 9500 (dual-GPU)

GeForce PX Titan

GeForce PX Titan M

The very first thing I thought of when I saw this were Ti 4200 and Ti4400.

but as other have said keeping at the leading edge is f-cking expensive and as soon as you catch up the goal posts get f-cking moved.. he he

as old as i am e-peen still plays a part in my thinking.. maybe it shouldnt but at least i am honest enough to admit that it does. .:)

years ago when Rolls Royce were ask the power output of their cars they had one simple reply.. they used to say "adequate".. i aint so sure that "adequate" is good enough now though.. :)

trog

but back on topic i recon 6 gigs is okay for the next generation of graphics cards.. there will always be something higher for those with the desire and the cash for more..

in this day and age market hype aint that uncommon.. he he.. who the f-ck needs 4K anyway.. 4K is market hype.. maybe the single thing that drives it all.. i would guess the next thing will be 8K.. the whole game will start again then.. including phones with 6 inch 8K screens.. he he

i like smallish controlled jumps with this tech malarkey.. that way those who bought last year aint that pissed off this year.. i recon its all planned this way anyway.. logically it has to be..

trog

ps.. if one assumes the hardware market drives the software market which i do 6 gigs for future cards is about right.. the games are made to work on mass market hardware.. not the other way around..

lower system latency means faster rendering..

4k is not market hype, it literally looks a ton crisper than 1080p and 1440p. You can't sit here and say 4x the average resolution is "market hype", it's the next hump in the road whether some people want to admit it or not. I got my TV lower than most large format 4k monitors so I took the dive knowing Maxwell performance isn't to par, but with 4k I don't need any real amounts of AA either. 2x at most in some areas depending on the game. That being said, I'd rather not have small incremental jumps in performance because some either can't afford it or find a way to afford it. That's called stagnation, and nobody benefits from that. Just look at CPUs for a clean cut example of why we don't need stagnation.

Games are made to work on consoles, not for PCs unless specified. That hardly happens, because money is in the console market. That doesn't mean that shit ports won't drive up the amount of VRAM needed regardless of texture streaming. I agree though, 6GB for midrange next gen should be plenty as I doubt the adoption to 4k will great during the Pascal/Polaris generation. That'll be left to Volta.

I don't "like"/"want" 4K right now only because of 60hz but that is another subject completely!

When 4K hits 144hz I will move up but going from 144hz to 60hz hurts my eyes in a real way. 4K is amazing to look at just don't move fast LOL

Besides the AMD red fanboy claims about pricing, GP100 having both HBM and GDDR5(x) on that chart is bit suspect as to how close to true those are. Could like be x80ti using HBM but not gonna be our til later this year like current ti was

fudzilla.com/news/graphics/40261-nvidia-leak-suggests-rather-dull-pascal-gpu-naming-scheme

X means 10 in roman numerals, it looks even more stupid when you write shit like gtx1080.

Nobody is copying from competitors, AMD does not have any cards named X80.

Though, finally! Compute be back, be able to have work horses again for other things. :p

Be interesting though if the X80 and/or X80 Ti have the GDDR5 or GDDR5X. I would like to see comparison with those overclocked to a Titan with HBM2 that is overclocked. Just see if there be a difference in performance with the RAM. Though, I could care less about RAM speed most of the time. Since I don't even game at high resolutions. *sites in corner with 1080P 60Hz screen*