Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

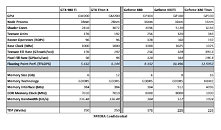

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

:)

Edit: No man it's coming back to me, they went down to x12xx, but those were integrated graphics, the dedicated GPU's started in x1300.

I wouldn't give any credence to these suggested specs.

Also not sure why you picked up on the GTX 680 as being notable, when that naming system began with GT200 and the GTX 280. (Or the GTS 150, depending on who you ask.)While that's cool, it seems weird to have put so much time and money into the build-up of the "GeForce GTX" brand only to kill it off just because they need to figure out some different numbers to put in the name. It seems more likely to just decide on some new numbers, keep the decade old branding that everyone already recognizes, and move on.

which in reality means not all that much.. 80 fps instead of 60 fps or 40 fps instead of 30 fps..

my own view is that once you add in g-sync or free-sync anything much over 75 fps dosnt show any gains..

4 K gaming for those that must have it will become a little more do-able but not my much.. life goes on..

affordable VR will also become a bit more do-able..

trog

damn, should have read other comments too.. :)

1) "That's so too super expensive - you must be fanboyz to buy it", or

2) "Nvidia crushed AMD, Team red is going bust lozers", or

3) some other alpha numerical variant with a possible 'Titan' slipped in.

And yes, I'm, pushing this pointless 'news' piece to get to the 100 post mark. Come on everyone, chip in to make futility work harder.

maybe monitors need rating the same way.. PPI.. pixels per inch.. your 4K 48 inch TV makes sense to me but when i see 4K on a 17 inch laptop it just makes a nonsense of it..

its like the mega pixel race with still cameras.. for web viewing you dont need that many.. to make errr 48 inch prints you do though..

4K is 8 million pixels.. at what size point (or viewing distance) it simply becomes unnoticeable i havnt a clue but there must be one..

my 1080 24 inch monitor at my normal viewing distance looked okay to me.. my 1440 27 inch monitor at the same viewing distance still looks okay to me..

however quite what sticking my nose 12 inches away from a 48 inch TV would make of things i dont know.. :)

4K dosnt come free.. at a rough guess i would say it takes 4 x the gpu power to drive a game at than 1080 does..

i would also guess that people view a 48 inch TV from a fair distance away.. pretty much like they do with large photo prints..

but unless viewing distances and monitor size are taken into account its all meaningless..

trog

I remember when 320 X 200 resolution was considered cool on my C64. I used Display List Interrupts (Raster Interrupts) using Machine Language to put more Sprites on screen at once than would have normally been possible for the hell of it. Rose colored glasses? Yes, but it was good times for us.