Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

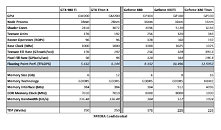

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

As far as i know the Diablo 2 update allows the game to run on modern PCs and operating system (64bit OSes), considering most people were playing it on Windows 9x back in the day.

8 years later my current end of the line intel cpu is running at 4.5 gig.. very similar to what my 8 year old intel chip could do.. and very similar to what the latest generation intel chips can do..

it does make one wonder just what would have happened if that chip had not been deliberately down clocked.. where would we be now.. he he

one thing is for sure.. intel have had a pretty easy 8 years.. :)

trog

No points for consistency Nvidia :P

(Yeah I know the was a 100 series before the 200 but it was OEM only rebrands).

-leprechaun

-goblin

-grinch

-ipkiss

-lantern

-t.m.n.t.

-wazowski

-hulk

-kermit

-yoda

-shrek

.....

plenty of green characters to choose from....

i couldn't resist :D:D:D:D:D:D:D:D

What's in storage goes into system memory and into CPU cache and goes into vRAM where do you think it fetches and accesses those textures from? If you run out of VRAM your next logical fast access high storage density bottleneck is your system memory followed by your actual storage which is more of a non volatile cache for memory.

Try playing a game that loads textures on the fly like World of Warcraft or Diablo 3 with a traditional HD and see what happens stutter stutter stutter when you run out of available faster access VRAM on the GPU what do you think is going to happen stutter stutter stutter.

That's also where higher bandwidth lower latency ram helps as well and explains why it drastically impacts APU's as they don't have dedicated VRAM and rely on system memory.

Please edit posts instead of double posting... they don;t like that 'round these parts. :p