Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

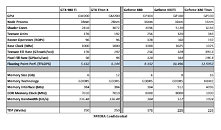

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

that chart surely debunks this as FUD, doesn't it? I mean, find one difference:

And clearly, Fury X is, to quote the original statement you had no problem with "so far behind".

Firstly, you're looking at a benchmark put on WCCF which is known to spread all kinds of FUD. So yea, calling that right there. Second, the single doesn't even match up either meaning the others won't and sites like Hard, Guru, and techspot also favor that fact.

Third, you didn't address the initial post properly. I said and I quote "you keep spamming this like anybody gives a shit" because anybody worth their salt looking at these threads knows the card lineup AND knows the picture you keep posting constantly is in fact, FUD.MSI AB and eVGA OSD, not with each card, that was running single. I only recently put the second card back in my machine and Blops 3 came out a while ago.

TPU shows the Fury X being at 100 and 980ti at 102 without exclusions while the FUD shows as 97% for both. Now, let's go on a limb here and say exclusions are ok and that the 102/102 and 97/97 are matching sure, but what about TItan X. Can you not count chief? TItan X is 4% higher than the matching, and 6% higher than the actual Fury X figure. Oh, those FUD #s also differ from the above mentioned Crossfire benchmarks or do I need to quote those for you so you know what to Google? I'm not doing the work for you. And it still doesn't detest my original statement. It's not my opinion, you get ignored practically every time you post that chart until now.

TPU site shows both 980Ti and Fury X at 102%, in case "we didn't get a penny from nVidia (c) Project Cars" is excluded. Oh, so it is in DGLee review (which lists exactly which games it tested, and "we didn't get a penny from nVidia" clearly isn't one of them), same results too.

Now Titanium X (single card vs single cards) is merely 4% faster than Fury X on TPU site, 3% faster in DGLee review, which is well within error margins, especially, taking into account that set of tested games is actually different.

Now, the fact that crossfire scales better than SLI should be known to a person who visits tech review sites regularly (unless most time is spent sharing with people your valuable opinion on who gives a flying f*ck about what) so looks not even remotely suspicious.

PS

Good Lord, just realized what you meant bay "ahaaaa, on one site both are 97% while on the other they are at 102%". Jeez. Think about what 100% are in both cases.

Take it to PM guys... if I wanted to see/read/hear incessent bitching, I would go home to your wives. o_O :laugh:

BOOM.

:laugh::laugh:

Maybe that will work better than my plea earlier.

With that, across their testing, the Titan X is 3% faster than the 980Ti and Fury X with a single card...

There is only so much information you can squeeze into a bar. In DGLee's chart I can see that, say, FuryX from Crossfire onward is faster than Titanium X in Alien Isolation, or Metro 2033, Metro Last Night.

Just saying that, in single card, its nearly impossible to tell. As you use more cards, you can see more of a difference between games. In the end, its a terrible way, particulary with a single card, to discern that data from the graph.

wccftech.com/amd-radeon-r9-fury-quad-crossfire-nvidia-geforce-gtx-titan-quad-sli-uhd-benchmarks/

......... that link shows the different resolutions, not games (as the TPU reviews DO show indivdual game performance.

Again, single card, its nearly impossible to tell, but as the number of cards go up, you can tell.

etc.I posted link earlier, post number 125 in this thread.FFS, bloody results MATCH RESULTS OF TPU...

Just an example; I have a 4GB card, when I run Rise of Tomb Raider, can my GPU handle it? Yes. Can my amount of VRAM handle it? No. When I run the benchmark, my FPS drops from 50 to 1 depending what new sections need to be loaded, not to mention the texture pop-ins. I suppose there are people that haven't run into these problems because they can always afford the best, perhaps they will stay unconvinced since they won't run into the problem or rarely. Exactly the same problem that Linus had with Shadows of Mordor.

Perhaps some of you will remember when Battlefield 4 launched, most people that still had 2GB VRAM cards complained that they have a lot of stuttering and weird graphical pop-in issues etc. When they replaced it with newly released nVidia GFX cards with 4GB VRAM, no more complaints, how weird huh?

I hope this video will also help convince those that weren't and those that had doubts that they really should understand, that VRAM MATTERS!

P.S.

I still fermly belive that 3GB of VRAM are more than enough of running a game in 1080p with Ultra(Max) Details and SMAA Antialiasing. So far there is no game I had issues...