Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

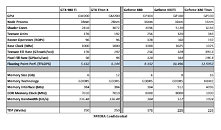

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

That's ok. I'm on an old school gaming kick again so most of my time is spent on my 98 box lately. It'll tide me over enough and 2x Titan X under water is more than plenty to max the frame cap in Rocket League, even with INI mods.

2.160/1.080 = 2

2*2 = 4

Good guess. But it only takes one glance at the numbers to see it is 4x the pixels :cool:

But the 16:9 aspect ratio is so yesteryear. Can't believe that the adoption of 21:9 takes so long that even tech savvy people like here on tpu still don't have it on their radar.

I'd prefer 3.440 * 1.440 over 3.840x2.160 anyday. Can't wait for the arrival of 1.440p Ultrawide 144Hz HDR. 4K is dogshit and needs 67.44% more horsepower - but anyone foolish enough believing all that marketing crap deserves to pay the price...

However, if you use a hack, it looks like this :)))

i have been experimenting with turning it off at 1440.. running the heaven or valley benchmarks and looking very carefully is a good test to use.. 8 x AA creates a blurry image.. turning it off creates a sharper more detailed image.. can i see jaggies.. maybe in certain situations but as a general rule the image just looks sharper with better defined edges..

but i am currently running my games at 1440 with AA ether off or very low.. i am into a power saving mode which is why i dont just run everything balls out on ultra settings..

again this all comes down to screen size and viewing distances.. i know for fact it does with high quality still images.. so far i cant see any reason the same principle should not apply to moving images..

currently i am lowering settings to see just what i can get away with before it noticeably affects game play visuals..

trog

Plus, I have not game on my rig for the past several months. Been busy playing the Wii U instead. :pYep, 24/7 to the WALL! Plus, I abuse the crap out of GPUs. I have a dead GTX Titan to my name (good thing for EVGA warrenty). Though, I do more than just fold. Main rig is my power house jack of trades, folding, boincing, 3D rendering, RAW photos, etc. Though, I did just get two old IBM servers. Woot, now to get some RAM and SCSI HDD for them and get them to working.

Fury X is a good card - it pretty much equals (or exceeds) an out of the box 980ti. It just doesn't match it when overclocked and as a lot of people point out - most non techy folk don't overclock.Wow! That's a metaphysical state of knowing you're unsure about even knowing if you want to start thinking about it. I bow to your supreme state of mental awareness. :respect:

Funny coincidence, what you see on screen at 4K is roughly the area of mini map in original:

one chip is cruising (can go faster) the other chip is running close to balls out (cant go faster) trying to keep up..

this is also why the winning teams products (at stock) will use less power generate less heat and make less noise.. its a three way win..

in a way its a rigged race with one team running just fast enough to stay in the lead..

the winning teams currently are intel and nvidia.. not a lot else to say..

trog

You do know that AMD processors overclock more % wise on average, right? Also, non fury products(read rX series) tend to overclock quite well... there are exceptions on both sides. ;)

But there are other reasons why performamce, power use, and overclocking are different.

Creative, that thinking...I will give you that!

www.techpowerup.com/207265/nvidia-breathes-life-into-kepler-with-the-gk210-silicon.html

That sacrifice on Maxwell allowed the clocks to go higher with a lower power draw. Fiji kept a relevant chunk of compute and in the next process shrink it may turn out quite well for them.

I had a 7970 that clocked at 1300Mhz (huge back then but a lot of them did it). My Kepler card didn't clock so well (compute is hot to clock) but with Maxwell and lower compute, the clocks are good. The base design of Fiji, which Polaris will be based on(?) has good pedigree for DX12. If Nvidia drop the ball (highly unlikely) AMD could take a huge lead in next gen gfx.

As it is, I don't believe Nvidia will drop the ball. I think they'll bring something quite impressive to the 'A' game. At the same time, I think AMD will also be running with something powerful. We can only wait and see.

FTR - there are many forums out there that believe AMD are worth something.

the team that is struggling to keep up dosnt have this luxury.. it in simple terms it has to try harder.. trying harder means higher power usage more heat and more noise..

from a cpu point of view amd have simply given up trying to compete.. they are still trying with their gpus..

when you make something for mass sale it needs a 5 to 10 percent safety margin to avoid too many returns

back in the day when i moved from an amd cpu to an intel.. i bought a just released intel chip.. it came clocked at 3 gig.. at 3 gig it pissed all over the best amd chip.. within a few days i was benching it at 4.5 gig without much trouble..

a pretty good example of what i am talking about.. a 50% overclock is only possible if the chip comes underclocked in the first place..

trog

wccftech.com/amd-radeon-r9-fury-quad-crossfire-nvidia-geforce-gtx-titan-quad-sli-uhd-benchmarks/