Wednesday, April 20th 2016

AMD's GPU Roadmap for 2016-18 Detailed

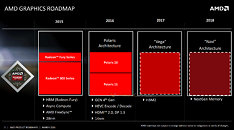

AMD finalized the GPU architecture roadmap running between 2016 and 2018. The company first detailed this at its Capsaicin Event in mid-March 2016. It sees the company's upcoming "Polaris" architecture, while making major architectural leaps over the current-generation, such as a 2.5-times performance/Watt uplift and driving the company's first 14 nanometer GPUs; being limited in its high-end graphics space presence. Polaris is rumored to drive graphics for Sony's upcoming 4K Ultra HD PlayStation, and as discrete GPUs, it will feature in only two chips - Polaris 10 "Ellesmere" and Polaris 11 "Baffin."

"Polaris" introduces several new features, such as HVEC (h.265) decode and encode hardware-acceleration, new display output standards such as DisplayPort 1.3 and HDMI 2.0; however, since neither Polaris 10 nor Polaris 11 are really "big" enthusiast chips that succeed the current "Fiji" silicon, will likely make do with current GDDR5/GDDR5X memory standards. That's not to say that Polaris 10 won't disrupt current performance-thru-enthusiast lineups, or even have the chops to take on NVIDIA's GP104. First-generation HBM limits the total memory amount to 4 GB over a 4096-bit path. Enthusiasts will have to wait until early-2017 for the introduction of the big-chip that succeeds "Fiji," which will not only leverage HBM2 to serve up vast amounts of super-fast memory; but also feature a slight architectural uplift. 2018 will see the introduction of its successor, codenamed "Navi," which features an even faster memory interface.

Source:

VideoCardz

"Polaris" introduces several new features, such as HVEC (h.265) decode and encode hardware-acceleration, new display output standards such as DisplayPort 1.3 and HDMI 2.0; however, since neither Polaris 10 nor Polaris 11 are really "big" enthusiast chips that succeed the current "Fiji" silicon, will likely make do with current GDDR5/GDDR5X memory standards. That's not to say that Polaris 10 won't disrupt current performance-thru-enthusiast lineups, or even have the chops to take on NVIDIA's GP104. First-generation HBM limits the total memory amount to 4 GB over a 4096-bit path. Enthusiasts will have to wait until early-2017 for the introduction of the big-chip that succeeds "Fiji," which will not only leverage HBM2 to serve up vast amounts of super-fast memory; but also feature a slight architectural uplift. 2018 will see the introduction of its successor, codenamed "Navi," which features an even faster memory interface.

43 Comments on AMD's GPU Roadmap for 2016-18 Detailed

I'm surprised they aren't calling it HDMI 2.0a (supports FreeSync) too. I guess they want to get the message out that Polaris will have HDMI 2 because Fiji not having it was pretty disappointing.

HDMI plans on officially supporting Dynamic-Sync in HDMI 2.0b.

I feel sorry for those peps driving 4K screens and wanting to game something on them with some reasonable FPS...

I'm glad AMD is going to address this. When I was buying my last video card I was looking for one to drive a 4K UHD Smart TV which only had HDMI 2.0 inputs. Therefore AMD was taken out of the running automatically and I would have preferred to have had a viable choice between AMD and nVidia rather then just nVidia.

and for the terrible car analogy bollocks.

Essentially, the 295X2 is the fastest card when all conditions are met.

But those conditions aren't met enough of the time (same for sli).

Single card FTW.

Truly more power isn't the issue... the space, and cold air is... the hotter it gets the more throttle it can create again, thus getting out of sycn and stutter... yet another struggle.

I really hope that DX12 will do something about that... ditch the old AFR as such... the next hope is nvlink... if nvidia leaves one link for adding SLI using that connection, thus making possible to access neighboring RAM pool without latency tax, it would be also something.

All this needs just good coders... the hardware is there as usualy... we lack time.