Thursday, July 14th 2016

Futuremark Releases 3DMark Time Spy DirectX 12 Benchmark

Futuremark released the latest addition to the 3DMark benchmark suite, the new "Time Spy" benchmark and stress-test. All existing 3DMark Basic and Advanced users have limited access to "Time Spy," existing 3DMark Advanced users have the option of unlocking the full feature-set of "Time Spy" with an upgrade key that's priced at US $9.99. The price of 3DMark Advanced for new users has been revised from its existing $24.99 to $29.99, as new 3DMark Advanced purchases include the fully-unlocked "Time Spy." Futuremark announced limited-period offers that last up till 23rd July, in which the "Time Spy" upgrade key for existing 3DMark Advanced users can be had for $4.99, and the 3DMark Advanced Edition (minus "Time Spy") for $9.99.

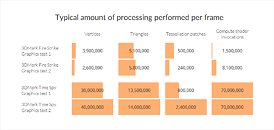

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.DOWNLOAD: 3DMark with TimeSpy v2.1.2852

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.DOWNLOAD: 3DMark with TimeSpy v2.1.2852

91 Comments on Futuremark Releases 3DMark Time Spy DirectX 12 Benchmark

Not too bad.

7100 on gpu for mine, noticed a few others with gtx1080 got 7800 but their cpu is clocked a bit higher so that or some weird little setting they got dif then me.

www.3dmark.com/spy/17592

edit: just reran the testing using near same clock other guy did and got 7650

www.3dmark.com/3dm/13220015?

3DMark Time Spy Graphics "text" 2

This ain't your granddaddy's text!

On the other hand this is good news. If that dynamic load balancing that Nvidia cooked there, works, It means that developers will have NO excuse to not use async in their games, which will mean at least a 5-10% better performance in ALL future titles.

There's a massive philisophical and scientific misunderstanding about Asynchronous compute and Async hardware. ACE are proprietary to AMD and only help in DX12, Async settings. Nvidia's design works on all API's at it's near optimum. It's as if in a 10K road race, Nvidia always start the race fast and keeps that speed up. AMD on the other hand starts slower and without shoes on (in a pre-DX12 race) but gets into a good stride as the race progresses, starting to catch up on Nvidia. In a DX12 race, AMD actually starts the race with good shoes and 'terrain' dependent, might start faster.

ACE's are AMD's DX12 Async running shoes. Nvidia hasn't changed shoes because they still work.

The reason we see no pascal gains in other apps using async compute (AotS in particular) is probably because they blacklist it blatantly on nvidia drivers, even Pascal ones. Why? Few people are running pascal, and it's easier to read a GPUs brand than properly detect a GPUs abilities. That said, it's lazy and should stop if that's really what's going on.

Think i need a new System :fear::laugh:

My 2x GTX 970 is about as fast as a single R9 Fury X. Yay for NVIDIA's async compute illiteracy.

www.3dmark.com/3dm/13200518

Techreport wrote some interesting things regarding this in their 1080-review.

techreport.com/review/30281/nvidia-geforce-gtx-1080-graphics-card-reviewed/2

GTX 980 Ti: 2816 cores, 5632 GFlop/s

Fury X: 4096 cores, 8602 GFlop/s

(The relation is similar with other comparable products with AMD vs. Nvidia)

Nvidia is getting the same performance from far fewer resources using a way more advanced scheduler. In many cases their scheduler has more than 95% computational utilization, and since the primary purpose of async shaders is to utilize idle resources for different tasks, there is really very little to use for compute (which mostly utilizes the same resources as rendering). Multiple queues is not overhead free either, so in order for it to have any purpose there have to be a significant performance gain. This is basically why AofT gave up on Nvidia hardware and just disabled the feature, and their game was fine tuned for AMD in the first place.

It has very little to do with Direct3D 11 vs 12.This benchmark proves Nvidia can utilize async shaders, ending the lie about lacking hardware features once and for all.

AMD is drawing larger benefits because they have more idle resources. Remember e.g. Fury X has 53% more Flop/s than 980 Ti, so there is a lot to use.

This benchmark also ends the myth that Nvidia is less fit for Direct3D 12.

X58 just wont die or give up, darn solid platform. I mean my CPU has been clokket at 4 GHz for almost 4 years now and it still keep going and going.

As Rejzor stated, NV is doing it with brute force. As long as they can ofc its fine. Anand should have shown Maxwell with async on/off for comparison.

www.3dmark.com/spy/38286

I need a GPU upgrade - seriously considering 980 Ti, prices are around $400 even for hybrid water-cooled models. And they're available right now, unlike the new cards. If I wait a few months, they'll get even lower...