Thursday, July 14th 2016

Futuremark Releases 3DMark Time Spy DirectX 12 Benchmark

Futuremark released the latest addition to the 3DMark benchmark suite, the new "Time Spy" benchmark and stress-test. All existing 3DMark Basic and Advanced users have limited access to "Time Spy," existing 3DMark Advanced users have the option of unlocking the full feature-set of "Time Spy" with an upgrade key that's priced at US $9.99. The price of 3DMark Advanced for new users has been revised from its existing $24.99 to $29.99, as new 3DMark Advanced purchases include the fully-unlocked "Time Spy." Futuremark announced limited-period offers that last up till 23rd July, in which the "Time Spy" upgrade key for existing 3DMark Advanced users can be had for $4.99, and the 3DMark Advanced Edition (minus "Time Spy") for $9.99.

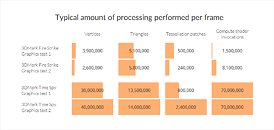

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.DOWNLOAD: 3DMark with TimeSpy v2.1.2852

Futuremark 3DMark "Time Spy" has been developed with inputs from AMD, NVIDIA, Intel, and Microsoft, and takes advantage of the new DirectX 12 API. For this reason, the test requires Windows 10. The test almost exponentially increases the 3D processing load over "Fire Strike," by leveraging the low-overhead API features of DirectX 12, to present a graphically intense 3D test-scene that can make any gaming/enthusiast PC of today break a sweat. It can also make use of several beyond-4K display resolutions.DOWNLOAD: 3DMark with TimeSpy v2.1.2852

91 Comments on Futuremark Releases 3DMark Time Spy DirectX 12 Benchmark

steamcommunity.com/app/223850/discussions/0/366298942110944664/

All of the current games supporting Asynchronous Compute make use of parallel execution of compute and graphics tasks. 3D Mark Time Fly support concurrent. It is not the same Asynchronous Compute....

So yeah... 3D Mark does not use the same type of Asynchronous compute found in all of the recent game titles. Instead.. 3D Mark appears to be specifically tailored so as to show nVIDIA GPUs in the best light possible. It makes use of Context Switches (good because Pascal has that improved pre-emption) as well as the Dynamic Load Balancing on Maxwell through the use of concurrent rather than parallel Asynchronous compute tasks. If parallelism was used then we would see Maxwell taking a performance hit under Time Fly as admitted by nVIDIA in their GTX 1080 white paper and as we have seen from AotS.

Sources:

www.reddit.com/r/Amd/comments/4t5ckj/apparently_3dmark_doesnt_really_use_any/

www.overclock.net/t/1605674/computerbase-de-doom-vulkan-benchmarked/220#post_25351958

As indicates the user "Mahigan", the future games will use the asynchronous computation, that it allows, of parallel form, the execution of tasks of calculation and graphs, but the surprise comes when the own description of Time Spy indicates that the asynchronous calculation is in use for superposing to a great extent passes of rendered to maximize the utilization of the GPU, some kind of Concurrent Computing called (Concurrent Computation).

The Concurrent Computation is a form of computation in that several calculations execute during periods of time superposed - concurrently - instead of sequentially (one that ends before it begins the following one), and obviously, it is not the asynchronous computation about which they brag games as the DOOM to take advantage of the real potential of a gpu AMD Radeon, in this case, under the API DirectX 12, which is where the software is executed. On not having used the asynchronous computation, 3DMark it seems to be adapted specifically in order to show the best possible performance in a GPU Nvidia. It being uses Context Switches's Changes (that it is something positive for Pascal, improvement pre-emption) as well as the Dynamic Load Balancing in Maxwell across the use of the asynchronous simultaneous tasks of computation instead of parallel.

Asynchronous computation AMD The architecture AMD GCN not only can handle these tasks, but it improves even more when the Parallelism is used, test of it they are DOOM's results under the API Vulkan. How? On having reduced the latency for frame across the executions in parallel of the graphs and having calculated the tasks. A reduction in the latency for - frame means that every frame needs of less time to be executed and processed. The net profit is a major speed of images per second, but 3DMark he lacks this one. If 3DMark It Time Spy it had implemented both the concurrence and the parallelism, a Radeon Fury X had reached in performance the GeForce GTX 1070 (In DOOM, the Fury X not only reaches her, but it overcomes it in performance).

If so AMD like Nvidia they are executing the same code that Pascal, it would be to win a bit or even to lose performance. This one is the reason for Bethesda the asynchronous computation did not allow to activate + AMD's graphs for Pascal. In his place, Pascal will have his own optimized route. The one that also they will be call an asynchronous computation making believe to the people who is the same thing when actually they are two completely different things. So it itself is happening, not all the implementations of asynchronous computation are equal.

You clearly don't understand how a GPU processes a pipeline. Each queue needs to have as few dependencies as possible, otherwise they will just stall rendering the splitting of queues pointless. Separate queues can do physics(1), particle simulations(2), texture (de)compression, video encoding, data transfer and similar. (1) and (2) are computational intensive and does utilize the same hardware resources as rendering, and having multiple queues competing for the same resources does introduce overhead and clogging. So if a GPU is going to get a speedup for (1) or (2), it needs to have a significant amount of such resources idling. If a GPU with a certain pipeline is utilized ~98%, and the overhead for splitting some of the "compute" tasks is ~5%, then you'll get a net loss of ~>3% for splitting the queues. This is the reason why most games do and many games will continue to disable async shaders for Nvidia hardware. But in cases where there are more resources idling, splitting might help a bit, like proven in the new 3DMark.

Free Market? Since when has the CPU and GPU market been free? Intel have been using monopoly tactics against AMD since the start (and were even forced to pay a small amount in court because of it) and Nvidia is only a little bit better with it's GameWorks program. Screwing over it's own customers and AMD video cards is "The way it's meant to be payed" by Nvidia.

So, not so much the fault of Futuremark, just the usual cover-up from the green team.

There is very limited number of games that support async and even less that support it for both vendors. Sure there might be more in next year or two, but it's irrelevant if you're only going to play 1 or 2 of those, and by the time it'll be relevant, we'll already have at least one or two new generations of gpus out.

I'm quite sure that there are more people playing Minecraft than those who are playing AotS, therefore it only makes sense for you to base your purchase not on some synthetic benchmarks or games that you'll never play, but based on what best matches your needs.

PS. Based on minecraft requirements, no one should care about discrete GPUs.

its par for the course..

having said that.. benchmarks like time spy are all about high end.. people that download and run them dont do it to to prove how crap their PC is.. which is why its on my gaming desktop and not on my atom powered windows 10 tablet.. he he

trog

0%/50%/100% async, for direct comparisons.