Wednesday, June 28th 2017

AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

A lucky customer has already gotten his hands on one of these coveted, sky-powered AMD graphics cards, and is currently in the process of setting up his system. Given the absence of review samples from AMD to any outlet - a short Vega Frontier Edition supply ensured so - there isn't any other real way to get impressions on this graphics card. As such, we'll be borrowing Disqus' user #define posts as a way to cover live pics and performance measurements of this card. Expect this post to be updated as new developments arise.

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

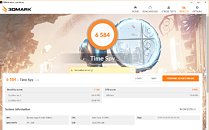

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

Sources:

#define @ Disqus, Spec.org, Spec.org

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

200 Comments on AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

another review:

quite a fiasco for amd ...

It's just wrong if this card is judged solely on its gaming performance.

Also, we still don't know if RX Vega comes with HBM or not. If not, the production cost would be significantly reduced and a much cheaper price is quite feasible while maintaining some margin.

The video should be called I don't know how to work my machine because he keeps saying "It's gonna crash, i think its gonna crash!!!" :laugh:

This was a pro benchmarkers move. When hes using the drivers that came with the card there is a part where he is running test, Hes looks at the info display and says "I might have something running in the background? Yeah, I have something running in the background" o_O

My brain is bleeding too for the utter lack of competence of this guy.

When you make a claim like that in a forum, you need to be able to back it up, which you seem to be unable/unwilling to do.

@1:42:00+

Its funny how he says its Thermal throttling 3seconds after loading W3 and the screen with Afterburner the temp says 51c.

end of discussion.

So it's a Fury X at 1600Mhz with shittier memory then?

I just call em like I see em. Based on all of the posts I've seen in the last couple months, I see this as a 1600Mhz Fury X, which isn't bad... just not great.

A fury x with polaris uarch would be a lot better than this...that's why this is just a joke.

Before you try to say I'm a fanboy, my best card is a 1080. However, I have two Amd cards and two Nvidia in my four running machines. When the consumer/gaming version of Vega drops I'm either getting one or another 1080ti. I'm not going to sit here bashing without knowing what I'm talking about though like 50% of people here.

www.techpowerup.com/vgabios/193092/193092

Edit:

58 KB is pretty small for an AMD bios though, it must be incomplete.

Support your claims or continue to be grouped with the other clueless muppets blowing smoke.