Wednesday, June 28th 2017

AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

A lucky customer has already gotten his hands on one of these coveted, sky-powered AMD graphics cards, and is currently in the process of setting up his system. Given the absence of review samples from AMD to any outlet - a short Vega Frontier Edition supply ensured so - there isn't any other real way to get impressions on this graphics card. As such, we'll be borrowing Disqus' user #define posts as a way to cover live pics and performance measurements of this card. Expect this post to be updated as new developments arise.

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

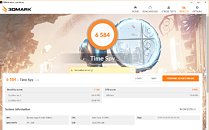

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

Sources:

#define @ Disqus, Spec.org, Spec.org

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

200 Comments on AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

Want to check latest similar fan design from AMD, here is TPU RX 480 review.

Now add 100W more heat to be transferred off even beefier fin array.

5000 rpm, 2600 rpm etc.

RX480 doesn't need to push fanspeed so far, but vega fe seems to do it.

Longer fin array from pictures, but the fan itself is what is creating the noise for the most part. The fan itself looks different than the RX480 stock fan in small ways. I'm by no means saying it is silent, but the guy with one said clearly that its not noisy, and he has a watercooled CPU so I am guessing if it were noisy he would know the difference.5870's were quiet for the day, but I hated the way mine sounded before the water block went on.

Hype is "this will blow X product out of the water" Realism is "They had a whole year to get this at least on OK levels"- And this isn't OK from where i'm sitting. Most of the users/people/whatever do actually care about price/performance more so when we're talking about PC parts. But please permit me to doubt that the same chip in a "gaming" version will do a far better job.

Theory crafting, if it turns up to be 5% bellow or above the Ti then good that means the market will have competition and it will force a reaction thus pushing the industry further (note Intel's reaction to Ryzen).

People buy whatever is suited for their needs. If they feel the need for a $2000 GPU/CPU/whatever they will throw money at it.

I don't really care if it's red blue green purple or has polka-dots on it as long as it is incentive for innovation.

My bad on the usage of "Everyone" should've sticked with "the majority".

The amount of ...less than spectacular displays of knowledge... posts show how many are in need of doing research before

guessingposting.In the meantime, please dont forget this card is not a gaming card and the drivers could be considered as beta level. :cool:

Makers usually specify the base clock and typical/average boost clock. But this time AMD have chosen to specify typical clock and peak boost clock, probably to get a higher peak throughput number to "beat" the competition. However, if we employ the same method to Nvidia, the rated "performance" of Titan Xp would become 14.2 Tflop/s (assuming 1850 MHz peak clock).

Edit:Seems pretty much spot on. The Vega will also deliver what what was promised. The first "prosumer" card, lol. Ever since ATI became AMD that's all they've seemed to be able to sell--prosumer cards. The architecture always seems so generic and too future-proof. Too open and reliant on people devoting their free time to help "the cause"...

It's not a gaming card, and at $1000 it can't compete with similar offerings.

It's not a workstation card, as it gets beaten by much smaller (die) ws gpus...

The only reason I can think of as to why is AMD wasting Vega chips on this abomination (and not on RX) is probably due to the early chips being utter crap. Hence the delay between FE and RX Vega.

A jack of all trades, but pretty mediocre at all of them.

Now, if they could hit 2Ghz.... Nvidia seems to have whooped AMD's ass on the way to 2Ghz. Oh well, you can't win ALL of the Ghz battles, AMD.

The slogan should have been "Vega--The Bulldozer of GPUs," the RX Vega's will be "RX vega--the Piledriver of GPU's," and hopefully by the time Navi 2.0 comes out we will have something better than Ryzen to compare it too.

I hate to hate AMD, but I hope the next revision of Ryzen will offer something other than more cores and a mediocre IPC.

Done ranting for the night. Later guys! :pimp:

And my lord, there are so many terrible, stupid, comments filled with BS that I wish I had more faces and palms.

But i hear ya, it's facepalm galore.

Maybe in another couple of generations AMD will catch up, but I'm not holding my breath.

Perhaps the upcoming gaming oriented RX Vega will be better, but I doubt it.

Looking forward to RX Vega in a little over a month and some real reviews. Reference AMD cards tend to be hot and loud, so tack on another month for custom cooling solutions to appear.

I guess you gotta rile up fanboys for attention, though.

Might as well ignore all posts for a couple months.

And yeah I know I know next to squat about GPU electronic engineering yadayada.