Wednesday, June 28th 2017

AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

A lucky customer has already gotten his hands on one of these coveted, sky-powered AMD graphics cards, and is currently in the process of setting up his system. Given the absence of review samples from AMD to any outlet - a short Vega Frontier Edition supply ensured so - there isn't any other real way to get impressions on this graphics card. As such, we'll be borrowing Disqus' user #define posts as a way to cover live pics and performance measurements of this card. Expect this post to be updated as new developments arise.

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

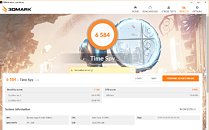

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

Sources:

#define @ Disqus, Spec.org, Spec.org

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

200 Comments on AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

(not that there are many though.....)

Vega score between 21000 and 23000 in GPU score. My two GTX 970 in sli score stock 21539 and then maximum overclock 25274 in GPU score and then GTX 1080 TI score between 26000 and 29000. I am so far not impress by vega. It might be missing driver optimisasion.

And if we go to numbers that ain gonna help. TPD at 300 or 375 watts and price at 999 US or 1500 US compared to GTX 1080 TI TDP 250 watt and from 700 US. The only advantage i se so far for vega is that it has more vram. There may be other features i am not aware of, if yes please tell me.

Well with other words. vega is not gonna change my mind about GPU upgrade. i will still choose GTX 1080 TI.

By the way here is my system fire strike scores to compare.

every thing runs stock here.

www.3dmark.com/3dm/17979523?

This is the oc i run CPU and GPU at for every day use.

www.3dmark.com/3dm/17980205?

And then i torture my system to its knees.

www.3dmark.com/3dm/18112484?

Lets just say i expected more from vega than this so far. When thinking on price and the rated tdp.

Links would be awesome...I'm not going through another thread of 'take hugh at his word when others posted supporting links' again...

...lets see it bud. :)

Will buy the uber-super-GTi-turbo-injection-PowPow edition, whenever it's out.

(and i'll still feel bad, because Ryzen deserved my money too. Show it with your wallet, or soon enough there won't be anything for you to show anymore and we'll all be buying 4core Intels for $500 a pop. That's my very simple philosophy)

1. No driver update in this world would boost it by 30-35% (where the hype-train showed it would land).

2. Prosumer card or not it's marketed with a "GAMING MODE". Last time I checked the Titan didn't perform worse than the "gaming" version although the Titan is clearly aimed at gaming (no pro driver support) but it also has some deep-learning usage where it is 20-25% faster than a 1080 Ti.

3. The price is outrageous for what it can do (don't get me wrong the Titan is a rip-off as well) in both gaming and/or pro use

4. AMD did state they won't be supplying the card for reviewers. Yet they were the ones that made a comparison vs a Titan in the first place (mind you in pro usage with the Titan on regular drivers - Thanks nVidia for that).

5. No sane person would pick this vs a Quardo or hell even a WX variant for pro usage.

6. AMD dropped the ball when they showed a benchmark vs a Titan (doesn't even matter what was the object of the bench itself). nVidia being them never showed a slide where the competition is mentioned they only compare the new gen vs the current gen (tones down the hype).

7. Apart from the 700 series every Titan delivered what was promised(and that's cuz they didn't use the full chip in the Titan).

Long story short rushed, unpolished release. Jack all support. Denying the reviewers a sample. AMD is trying to do what nVidia does with the Titan but it's clearly not in the position to do so. In other words AMD just pulled another classic "AMD cock-up". Again this is my POV, had high hopes for this ... but then again I should've known better...

+1

@mrthanhnguyen I was never aboard any hype train, that's for children and dysfunctionals. I value performance in one kind of rig, price/performance in another, usage depending. I spend my money accordingly and feel good while at it (supporting the "better".. other.. smaller team). The rest is you people exaggerating and/or putting way too much weight in a simple product that end of the day?

Won't change your life either way, sorry :)

Many of the games without API stated can run in dx12 mode it seems.

www.tomsitpro.com/articles/nvidia-quadro-m6000,2-898-2.html

There are a LOT of hardware features that AMD may be giving away now to take the compute market back from Nvidia, so again, gaming means shit on a Pro card. Giving medium sized businesses better performance for their money is where AMD can make up ground they lost to compute.

Also, what end is nigh?

Expect rx vega to be half vega FE's price or so for both air and water. All the improvements over the next month or so will make it a 1080 priced just about 1080 ti competitor with a lot of potential for future otimized dx11, dx12 and vulkan games. DiRT 4 will love vega hopefully!

You were asking for pro tests results as follows ((SPECPerfview12_1_1):

Now proper shock. Finished SPECPerfview12_1_1 test on VEGA FE and is almost on par with Quadro P6000 and P5000 with the same settings. Biggest difference is that VEGA FE price was £977 and is running in my PC and both Quadro benchmark results are taken from spec.org as follows:

P6000

www.spec.org/gwpg/g...

P5000

www.spec.org/gwpg/g...

...and of course both are in workstations with Xeon CPU's, then no compare to my PC.

VEGA is perfectly prepared for PRO, it behaves completely different no issues at all, it is quiet.. and you get so MUCH performance per dollar is just amazing (compare to Quadro cards - P5000 -£2000, P6000 - £4000 to £5000).

Please look at the screen shots as follows:

1. Vega results - all benchmark frame rates and my platform

2. Vega results - rest of the settings from my platform

3. SPECPerfView12_1_1 - initial settings, as per spec.org I've run it with defaults to make sure that it will match uploaded results on their websites

4. All the numbers on the same image and as you can see most of the time VEGA is on par with those two (again not in workstation but standard PC)."

$1000 card performing as well as $5000 green card, and its quiet........ not throttling.... hmmm. Anything else you wanna bitch about?

On top of that, you have zero, absolute no reason to even think that the gaming-oriented version will cost more than Nvidia's; don't know where you saw that 'extra', but it goes with what i was saying before. We need to approach this more.. reasonably. Feels like you've added some hyperbole to the equation, then used it to conclude you're right to be disappointed.

Now in terms of the hype.. why did you allow any hype to influence you in the first place? Are we not in the 21st century? Who is it that has yet to learn how marketing is done or, even better, how better it is to let the customers do the marketing for the company? You've only yourself to blame if you fell for that, and that's the truth.

As to the latter part i quote above; NO. That's you thinking like that; skewed perceptions, a market gone haywire because that's how they prefer you to think. Do all of you here drive Porsches? Live in $1000000 mansions? No. If you get a second toddler and are in need of a new house, what you gonna do? Buy the mansion or go under the river?

Whom is it that said we must want the 'bestest'?

And by the way, because 99% i know the reply to this last bit.. if let's say it's 5% slower than the 1080Ti.. is that 'worstest'? 'Fail'? How exactly could you measure anything when all you go by is 'bestest'? Again, rather.. skewed criteria there.

*same goes for price-performance ratio.. who told you that's what everyone wants? Some have money to burn and do so just because, some purchase due to an emotional drive, others due to past impressions/familiarity, etc etc.. this "everyone" is just dead wrong. Keep it limited to what -you- want. And why it is you allowed yourself to form an opinion before it was even out (hype).

Take it or leave it, am not defending them. Am only showing you how this is very, very problematic; and most folks don't even see it no more.

In any case I haven't yet seen a need for DX12 in any game I have that has it optional. DX11 is working just fine for me...nothing has taxed my system unrealistically yet where DX12 might even be necessary to aid performance.

@I No AMD hasn't hyped this. The legion of rabid AMD fanatic fanboys has. I don't know why you sound so disappointed. Wait til RX Vega releases before being happy or disappointed.