Wednesday, June 28th 2017

AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

A lucky customer has already gotten his hands on one of these coveted, sky-powered AMD graphics cards, and is currently in the process of setting up his system. Given the absence of review samples from AMD to any outlet - a short Vega Frontier Edition supply ensured so - there isn't any other real way to get impressions on this graphics card. As such, we'll be borrowing Disqus' user #define posts as a way to cover live pics and performance measurements of this card. Expect this post to be updated as new developments arise.

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

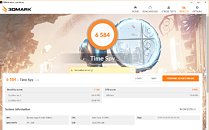

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

Sources:

#define @ Disqus, Spec.org, Spec.org

After some glamour shots of the card were taken (which really are justified by its unique color scheme), #define mentioned the card's build quality. After having installed the driver package (which, as we've covered today, includes both a developer and gaming path inside the drivers, granting increased performance in both workloads depending on the enabled driver profile, he is now about to conduct some testing on SPECViewperf and 3DMark, with both gaming and non gaming profiles.Specs of the system include an Intel Core i7 4790K (apparently at stock 4GHz), an ASUS Maximus VII Impact motherboard, and 16 GB (2x8) of Corsair Vengeance Pro Black DDR3 modules, running at 2133 MHZ, and a 550 W PSU.Update 1: #define has made an update with a screenshot of the card's score in 3DMark's FireStrike graphics test. The user reported that the Pro drivers' score "didn't make sense", which we assume means are uncooperative with actual gaming workloads. On the Game Mode driver side, though, #define reports GPU frequencies that are "all over the place". This is probably a result of AMD's announced typical/base clock of 1382 MHz and an up to 1600 MHz peak/boost clock. It is as of yet unknown whether these frequencies scale as much with GPU temperature and power constraints as NVIDIA's pascal architecture does, but the fact that #define is using a small case along with the Frontier Edition's blower-style cooler could mean the graphics card is heavily throttling. That would also go some way towards explaining the actual 3DMark score of AMD's latest (non-gaming geared, I must stress) graphics card: a 17,313 point score isn't especially convincing. Other test runs resulted in comparable scores, with 21,202; 21,421; and 22,986 scores. However, do keep in mind these are the launch drivers we're talking about, on a graphics card that isn't officially meant for gaming (at least, not in the sense we are all used to.) It is also unclear whether there are some configuration hoops that #define failed to go through.Update 2: # After fiddling around with Wattman settings, #Define managed to do some more benchmarks. Operating frequency should be more stable now, but alas, there still isn't much information regarding frequency stability or throttling amount, if any. He reports he had to set Wattman's Power Limit to 30% however; #define also fiddled with the last three power states in a bid to decrease frequency variability on the card, setting all to the 1602 MHz frequency that AMD rated as the peak/boost frequency. Temperature limits were set to their maximum value.Latest results post this non-gaming Vega card around the same ballpark as a GTX 1080:For those in the comments going about the Vega Frontier Edition professional performance, I believe the following results will come in as a shock. #define tested the card in Specviewperf with the PRO drivers enabled, and the results... well, speak for themselves.

#define posted some Specviewperf 12.1 results from NVIDIA's Quadro P5000 and P6000 on Xeon machines, below (in source as well):And then proceeded to test the Vega Frontier Edition, which gave us the following results:So, this is a Frontier Edition Vega, which isn't neither a professional nor a consumer video card, straddling the line in a prosumer posture of sorts. And as you know, being a jack of all trades usually means that you can't be a master at any of them. So let's look at the value proposition: here we have a prosumer video card which costs $999, battling a $2000 P5000 graphics card. Some of its losses are deep, but it still ekes out some wins. But let's look at the value proposition: averaging results between the Vega Frontier Edition (1014,56 total points) and the Quadro P5000 (1192.23 points), we see the Vega card delivering around 80% of the P5000's performance... for 50% of its price. So if you go with NVIDIA's Quadro P5000, you're trading around a 100% increase in purchase cost, for a 20% performance increase. You tell me if it's worth it. Comparisons to the P6000 are even more ridiculous (though that's usual considering the increase in pricing.) The P6000 averages 1338.49 points versus Vega's 1014,56. So a 25% performance increase from the Quadro P6000 comes with a price tag increased to... wait for it... $4800, which means that a 25% increase in performance will cost you a 380% increase in dollars.

Update 3:

Next up, #define did some quick testing on the Vega Frontier Edition's actual gaming chops, with the gaming fork of the drivers enabled, on The Witcher 3. Refer to the system specs posted above. he ran the game in 1080p, Über mode with Hairworks off. At those settings, the Vega Frontier Edition was posting around 115 frames per second when in open field, and around 100 FPS in city environments. Setting the last three power states to 1602 MHz seems to have stabilized clock speeds.Update 4:

#define has now run 3D Mark's Time Spy benchmark, which uses a DX12 render path. Even though frequency stability has improved on the Vega Frontier Edition due to the change of the three last power states, the frequency still varies somewhat, though we can't the how much due to the way data is presented in Wattman. That said, the Frontier Edition Vega manages to achieve 7,126 points in the graphics section of the Time Spy benchmark. This is somewhat on the ballpark of stock GTX 1080's, though it still scores a tad lower than most.

200 Comments on AMD Radeon Pro Vega Frontier Edition Unboxed, Benchmarked

Sorry, I had to.

Gimme a break. The retardation of commenters (have you seen vidcardz and wcctardtech) is only rivaled by the people posting this shit show for clicks.

Fanboys: No no no, this is a PROSUMER card. It is NOT for gaming.

People try to bench VEGA FE against other workstation cards:

Fanboys: No no no, this is a PROSUMER card you cannot compare it to Quandro, UNFAIR blah blah blah.

People: So can we bench this AGAINST ANYTHING?

Fanboys: I guess NO?

Raja@RTG: YES, this is EXACTLY why we push VEGA as a "prosumer" card. The fanboys will defend us like mad men.

In reality, Vega FE is an abomination. It is neither for gaming nor for content creation. Or it is both for gaming and content creation.

This is some excellent(shitty) marketing right there. Well played Raja, well played RTG.In Vulkan and well optimized DX12 applications I imagine that to become reality.

Also new sauce:

1) Forced application paths, you know, forcing true or HDR color on everything, forcing redundancy checks on everything to make sure rendered calculations are correct.

2) Immature drivers, and drivers specifically not for performance gaming that probably have hooks for other applications to read data and or dump data about render stages.

3) Looking at the card they have probably tuned the profile to keep temps low and fan speed low as it is essentially a workstation card.

4) Not being a gaming card.

I wonder if people who buy Prius cars are surprised they don't go as well as a Tesla, cause, they are both electric, have four wheels and a steering wheel, have windows that roll up and down, seat belts, and rear seats....What a great and helpful post. Did you write it all by yourself? Do you understand what the card is for? Do you understand Vega FE is meant for midsized business to do things like oil exploration and other modeling? Game companies to use when rendering scenes and dumping data to see where and if any bottlenecks occur?

probably not, now run along while the adults talk.

VEGA FE and RX VEGA use the exact the same GPU. Does your lovely Prius and Tesla use the exact same engine? I think not.

And yeah if reasoning fails you resort to another good old tactic---personal attack. How mature of you.

Things like 16GB of HBM2, HBC (which allows for a 512 TB frame-buffer!), rapid packed math, and the rest of the bells and whistles could make this incredible at certain work-loads.

I wouldn't be surprised if there were some professional apps that got massive boosts from Vega, and like everyone says we need real game benchmarks (and a stable boost clock) to see how this does in gaming.

Where and how do you know the Vega FE and RX have the same GPU?

The only test to come out of this is from someone who said "i don't know WattMan or these settings, I'm sorry" and the last test was at 1080 levels, on a non-gaming card.

The only personal part was a response to calling anyone who says to wait, and that this is a prosumer card a fanboi, so pot and kettle mate. Based solely on one person, with no knowledge of the product, in a system that is unknown, and highly unlikely to be able to be reproduced..... its a bit unfair to call the game at this point.

Doesn't matter though: This guy clearly is a noob, and 3Dmark is a wast of energy. Real games and professional apps are what matters.

If the PSU is not supplying enough power, the computer will crash, not get low scores in firestrike.

OMG.

Also, Can you game on Nvidia Quadro / AMD Pro cards?

yes, yes you can. And performance is about the same as their gaming counterpart.

So I see no reason not to use VEGA FE to indicate RX VEGA gaming performance.

Firestrike is always going to favour pascal, simply because it's dx11. Vega is designed for dx12 and mainly vulkan. Than again, DiRT 4, what I believe is a dx11 game, performs really well with an rx 580, so with the right optimizations vega should run well in any game. Just one problem: not many game devs can be bothered to optimize their games, especially for AMD! At least it won't be as bad as ryzen 7 at launch. Some games have nearly doubled 1080p framerates!

Can we at least wait untill we get proper reviews and at least one gaming driver update? That way we know AMD has spent time on the gaming drivers and the reviewer actually has a clue what on earth he's doing. 1080 performance in firestrike is great, but I expect at least 10% better from vega FE and much closer to 1080 ti with rx vega with driver optimizations, increased clockspeeds, etc.

Yes, this is not a pure gaming card.

Most games are dx11, really. Dx12 is making headway, but i wouldnt call it saturated with 20 games...

en.m.wikipedia.org/wiki/List_of_games_with_DirectX_12_support