Monday, February 19th 2018

Intel Unveils Discrete GPU Prototype Development

Intel is making progress in its development of a new discrete GPU architecture, after its failed attempt with "Larrabee" that ended up as an HPC accelerator; and ancient attempts such as the i740. This comes in the wake of the company's high-profile hiring of Raja Koduri, AMD's former Radeon Technologies Group (RTG) head. The company unveiled slides pointing to the direction in which its GPU development is headed, at the IEEE International Solid-State Circuits Conference (ISSCC) in San Francisco. That direction is essentially scaling up its existing iGPU architecture, and bolstering it with mechanisms to sustain high clock speeds better.

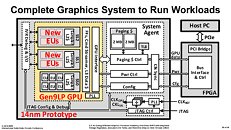

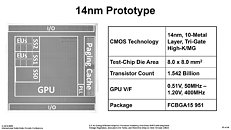

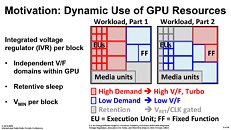

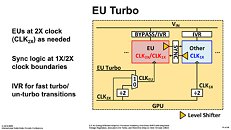

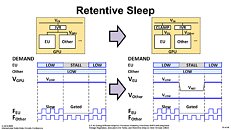

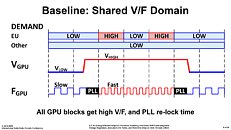

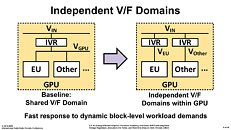

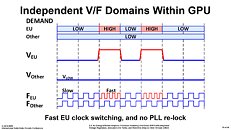

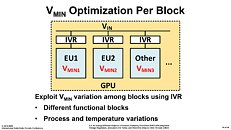

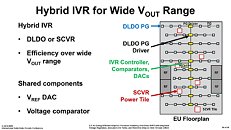

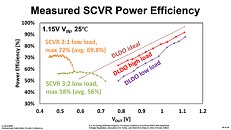

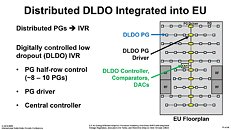

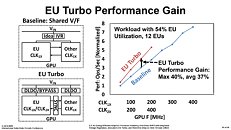

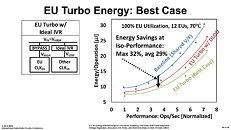

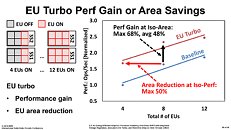

The company's first 14 nm dGPU prototype, shown as a test-chip at the ISSCC, is a 2-chip solution. The first chip contains two key components, the GPU itself, and a system agent; and the second chip is an FPGA that interfaces with the system bus. The GPU component, as it stands now, is based on Intel's Gen 9 architecture, and features a three execution unit (EU) clusters. Don't derive numbers from this yet, as Intel is only trying to demonstrate a proof of concept. The three clusters are wired to a sophisticated power/clock management mechanism that efficiently manages power and clock-speed of each individual EU. There's also a double-clock mechanism that doubles clock speeds (of the boost state) beyond what today's Gen 9 EUs can handle on Intel iGPUs. Once a suitable level of energy efficiency is achieved, Intel will use newer generations of EUs, and scale up EU counts taking advantage of newer fab processes, to develop bigger discrete GPUs.More slides follow.

Source:

PC Watch

The company's first 14 nm dGPU prototype, shown as a test-chip at the ISSCC, is a 2-chip solution. The first chip contains two key components, the GPU itself, and a system agent; and the second chip is an FPGA that interfaces with the system bus. The GPU component, as it stands now, is based on Intel's Gen 9 architecture, and features a three execution unit (EU) clusters. Don't derive numbers from this yet, as Intel is only trying to demonstrate a proof of concept. The three clusters are wired to a sophisticated power/clock management mechanism that efficiently manages power and clock-speed of each individual EU. There's also a double-clock mechanism that doubles clock speeds (of the boost state) beyond what today's Gen 9 EUs can handle on Intel iGPUs. Once a suitable level of energy efficiency is achieved, Intel will use newer generations of EUs, and scale up EU counts taking advantage of newer fab processes, to develop bigger discrete GPUs.More slides follow.

65 Comments on Intel Unveils Discrete GPU Prototype Development

Kappa

ARM, Imagination Technologies, Qualcomm and Vivante all make GPU's, as well as technically S3/VIA...

I most likely missed some companies as well, but the problem is, none of them can keep up with Nvidia due to the amount of money they're throwing at their R&D of new graphics architectures.

Even Intel isn't going to be able to catch up any time soon. At best I'd expect something mid-level for the first 2-3 years, as it's expensive, it's resource intensive and time consuming to make GPU's.

Good luck with that!

If Intel manages to do just that once again, customers win.

PS With Intel having bought in the past Altera, I wonder if their first GPUs will be targeting gamers, or miners.

In business, small brands and co-operations, general computers dont need external GPU to function. A IGP is more then enough to provide 2D work and some video acceleration. These days it's more and more tasks being shifted to the GPU.

The shop bought ones four or five years out imho and certainly won't be a dual chip solution maybe MCM though.

Because that FPGA could possibly be a game changer if used to pump the GPUs core performance intelligently and or to directly affect a new Api for mainstream use case acceleration, intel already do fpga in server so they know it's got legs.

They made attempts on AMD boards for example, to put a seperate memory module on the board itself which was the memory for the IGP. This was alot faster then shared ram sollution.

But as long as we stick to relatively slow DDR on PC's any IGP will lack bandwidth thus never offer serious performance compared to dedicated card.

1) Not quite as strong as Nvidia/AMD's halo products

2) Not quite as good price/perf as AMD's midrange

3) But industry-leading perf/watt.

For the time, the 10-25w IvyBridge-Broadwell had absolutely incredible perf/watt. But they just couldn't scale them up efficiently past even ~50w...