Wednesday, August 22nd 2018

NVIDIA Releases First Internal Performance Benchmarks for RTX 2080 Graphics Card

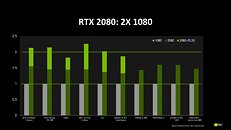

NVIDIA today released their first official performance numbers for their new generation of GeForce products - particularly, the RTX 2080. The RTX 20 series of graphics cards, according to the company, offers some 50% performance improvements (on average) on architectural improvements alone, in a per-core basis. This number is then built upon with the added RTX performance of the new RT cores, which allows the RTX 2080 to increase its performance advantage over the last generation 1080 by up to 2x more - while using the new DLSS technology. PUBG, Shadow of the Tomb Raider, and Final Fantasy XV are seeing around 75 percent or more improved performance when using this tech.

NVIDIA is also touting the newfound ability to run games at 4K resolutions at over 60 FPS performance, making the RTX 2080 the card to get if that's your preferred resolution (especially if paired with one of those dazzling OLED TVs...) Of course, IQ settings aren't revealed in the slides, so there's an important piece of the puzzle still missing. But considering performance claims of NVIDIA, and comparing the achievable performance on last generation hardware, it's fair to say that these FPS scores refer to the high or highest IQ settings for each game.

NVIDIA is also touting the newfound ability to run games at 4K resolutions at over 60 FPS performance, making the RTX 2080 the card to get if that's your preferred resolution (especially if paired with one of those dazzling OLED TVs...) Of course, IQ settings aren't revealed in the slides, so there's an important piece of the puzzle still missing. But considering performance claims of NVIDIA, and comparing the achievable performance on last generation hardware, it's fair to say that these FPS scores refer to the high or highest IQ settings for each game.

107 Comments on NVIDIA Releases First Internal Performance Benchmarks for RTX 2080 Graphics Card

Either ways, I'll stick with my first word of the post, unimpressed....

GTX 1080 8227.8 GFLOPS vs RTX 2080 8920.3 GFLOPS at base clocks.

Joking aside, it's a new AA technique that uses those shiny new tensor cores to create a better image, how it pans out in practice remains to be seen.

www.guru3d.com/news-story/nvidia-releases-performance-metrics-and-some-info-on-dlss.html

Cherry picked titles with the shiny DLSS bar on top the actual dark green bar to divert public attention... 19% higher TDP, 40 - 50% higher MSRP for an average 30% (dark green bar only) performance increase, not worth it.As far as I understand it (very basic), DLSS (a type of AA) involves heavy matrix operations and these tensor cores are specialised in making them as fast as possible.

Them tensor cores are deep learning AI processors and it tries to predict the ideal smooth image, per frame using its knowledge and algorithm (probably setup by the devs of the game), so its like its trying to fill up the pixels and eliminate jaggies based on what it understands what it looks smooth

I have no doubt it'll be better, since I doubt the performance regressed, but these "internal benchmarks" are all fishy to me.

So, the benchmarks were run at probably a very high/expensive level of AA + 4K, something you wouldn't normally ask a 1080FE to do ...

there would'nt have been so much negativity/uncertanity then...

Nvidia Ampere is 7nm (3000 Series) Second Generation Ray Tracing.

Vs Intel Arctic Sound

Vs AMD Navi

Unfortunately no competition 2018 and 2019

Vega 20 best won't match 2080Ti performance by no means.

Nvidia has market Monopoly till 2020.