Thursday, August 30th 2018

Intel Explains Key Difference Between "Coffee Lake" and "Whiskey Lake"

Intel "Whiskey Lake" CPU microarchitecture recently made its debut with "Whiskey Lake-U," an SoC designed for Ultrabooks and 2-in-1 laptops. Since it's the 4th refinement of Intel's 2015 "Skylake" architecture, we wondered what set a "Whiskey Lake" core apart from "Coffee Lake." Silicon fabrication node seemed like the first place to start, with rumors of a "14 nm+++" node for this architecture, which should help it feed up to 8 cores better in a compact LGA115x MSDT environment. Turns out, the process hasn't changed, and that "Whiskey Lake" is being built on the same 14 nm++ node as "Coffee Lake."

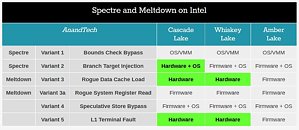

In a statement to AnandTech, Intel explained that the key difference between "Whiskey Lake" and "Coffee Lake" is silicon-level hardening against "Meltdown" variants 3 and 5. This isn't just a software-level mitigation part of the microcode, but a hardware fix that reduces the performance impact of the mitigation, compared to a software fix implemented via patched microcode. "Cascade Lake" will pack the most important hardware-level fixes, including "Spectre" variant 2 (aka branch target injection). Software-level fixes reduce performance by 3-10 percent, but a hardware-level fix is expected to impact performance "a lot less."

Source:

AnandTech

In a statement to AnandTech, Intel explained that the key difference between "Whiskey Lake" and "Coffee Lake" is silicon-level hardening against "Meltdown" variants 3 and 5. This isn't just a software-level mitigation part of the microcode, but a hardware fix that reduces the performance impact of the mitigation, compared to a software fix implemented via patched microcode. "Cascade Lake" will pack the most important hardware-level fixes, including "Spectre" variant 2 (aka branch target injection). Software-level fixes reduce performance by 3-10 percent, but a hardware-level fix is expected to impact performance "a lot less."

32 Comments on Intel Explains Key Difference Between "Coffee Lake" and "Whiskey Lake"

In addition, it's rather unlikely that they would risk to postpone something (which they actually risk to do in such a case) by fabbing a completely new mask (which always includes the risk that something goes wrong), given the competitive market-situation at the moment. So a completely or at least comprehensive re-design of the very core? In that time-frame?! Something seems fishy here.

If they actually managed to make a stunt like that (Foreshadow was revealed just in January '18!), they should've must been also able to mitigate (via µCode) or eliminate Meltdown completely and address some parts Spectre by January already in hardware. They didn't neither of both. So either they're lying this time or were lying (and were incredibly lazy) back in January (and half the year before) …

www.phoronix.com/scan.php?page=article&item=linux-419-mitigations

And now that they know that we did in fact know about what they thought we didn't know, they have to come up with some lame explanation to try to explain why, and how they are gonna fix it, and try to make themselves look good in the process....

Bottom line: C.Y.A. all the time, every time, or die tryin :D

And for vulnerabilities with minor performance impact, they can fix them quite fast.

"When fixed in software, Intel expects a 3-10% drop in performance depending on the workload – when fixed in hardware, Intel says that performance drop is a lot less, but expects new platforms (like Cascade Lake) to offer better overall performance anyway.":eek:

Wake me in 10 years when it's over... :shadedshu:

So why does multiple CPU makers do the same principal mistakes? It doesn't mean they steal from each other, but the following are major factors:

- Engineers think alike - Given similar training and experience, engineers tend to find similar solutions to the same problem. To make matters worse, I would claim ~90% of engineers overestimate their own rationality and wants to be "the smartest guy in the room" by rushing to conclusions, rather than doing the actual research.

- Switching jobs - The semiconductor world is very small (compared to e.g. the software world). Most of the people in Intel's and AMD's CPU teams have worked with one or more competitors in the past. Even though they don't steal IP, they do still bring experience and ideas, both the good and the bad.

- Knowledge sharing - There are conferences, academic research projects, and even voluntary sharing between companies in the industry. If one party shares a flawed idea, others might not challenge that.

These companies have some of the brightest engineers in the world, yet they manage to produce products year after year with the same design flaws. But why didn't anyone of them find these flaws? Chances are several of them either knew about the potential or have seen symptoms of these bugs throughout the development. But the structure of the departments, development teams and management can make it very hard to communicate the right information. Design choices, schedules, etc. are usually decided from the top down, while anyone finding such bugs will be at the very bottom, having to convince every superior about the severity of the flaw. I've experienced this several times first-hand in software development, once at a former employer I found a serious defect in a core library, but other team members dismissed it despite a proof-of-concept, because "the code had been used for years without any 'problems', so the new guy must be mistaken…", this code might still be used in equipment worth $B…

I don't make semiconductors, instead I work in a plastics plant, but even I see "defects" passed on as good product all the time. These defects are minor defects that nobody would ever find, or give a shit about if they did, but still, it's not "perfect" like it should be. And if you ask the production manager, he'll try to save as much as he possibly can, telling you to pass stuff you've been trained to know is bad.

Two vastly different products, but it's still in the world of manufacturing, where time literally is money. These guys have product to push out the door, so it wouldn't surprise me if someone knew, but thought it wasn't worth fixing, for one or more reasons... but now that it's been brought to light, they can't hide behind "security through obscurity", even if it would be hard to pull off, so now it has to be fixed.

Or maybe it really did slip by them for 20+ years... :p

But it's worth mentioning that Computer Science as a field is much more deceptive than most people realize. The fundamentals are of course completely logical on the lowest level, but human's ability to comprehend it is very limited. In CS it's possible to design and make a completely broken product that looks fine on the surface, to a much larger extent than e.g. building a bridge, a giant ship or a space rocket. And design flaws in CS might be much harder to spot without comprehensive investigation. It shouldn't surprise anyone that when Intel or AMD releases a new CPU, it usually have around 20-30 know defects in their errata. Some of these are mitigated in firmware, and could be really serious security issues, but we usually never get to know. The difference with Spectre and Meltdown is that it was found externally, and that it was present in multiple consecutive CPU designs. This doesn't mean it's even close to the most serious flaws they've shipped in a CPU. And when it comes to software, it's much worse. This comic stripe is pretty spot-on when it comes to describing the state of the field.

Whats next, Meth River? "For a complete Fix!"

Well there's AMD, in case you forgot?

Yet some of the fixes found early in the year are already fixed in hardware for WHL, how much lead time did you think Intel had for L1TF?

If you ask me, these appear A LOT more like sn honest error in design.