Sunday, December 30th 2018

NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

Here are some of the first pictures of NVIDIA's upcoming GeForce RTX 2060 Founders Edition graphics card. You'll know from our older report that there could be as many as six variants of the RTX 2060 based on memory size and type. The Founders Edition is based on the top-spec one with 6 GB of GDDR6 memory. The card looks similar in design to the RTX 2070 Founders Edition, which is probably because NVIDIA is reusing the reference-design PCB and cooling solution, minus two of the eight memory chips. The card continues to pull power from a single 8-pin PCIe power connector.

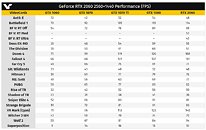

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

Source:

VideoCardz

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

234 Comments on NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

www.anandtech.com/show/537/27

www.anandtech.com/show/742/12

www.guru3d.com/articles_pages/geforce_fx_5800_ultra_the_review,24.html

www.guru3d.com/articles-pages/albatron-geforce-6800-gt-256-mb-review,1.html

These are only examples of the kind of research done when I go looking for info. People can say I talk out my backside all they want, doesn't make them correct. And not all of your numbers jive well with the info out there. Nor does it jive with what I remember from back then. Thing is, I'm not going to hold anyone's hands. If I tell you I know something and that you might be wrong, you can bet your life on the fact that I've gone looking to verify info or have personal experience(that also gets re-verified).This. Yes.

Look at Bethesda. They can't even be bothered to update their engine from something this decade. I don't think CoD's engine has had an overhaul either (also an assumption). I honestly don't care what happens with RTRT as from the videos I have seen it doesn't even look that better. I haven't seen it in person so I will hold final judgement until then. Instead of RTRT, I wish developers would spend those resources on actually finishing their games before launch.Ah, I was confused. You had quoted someone referring to a 2080ti starting at $999 and said you had gotten it for $800. I was going to call that a deal I would consider paying.

Regardless, only a single instance of a price decrease across two generations is sufficient to disprove your presented fact of, "Every generation of new GPUs get a price increase."That's all well and good, but you also made a completely false statement presented as fact that was incredibly easy to disprove by the very same research. I can only trust your knowledge by what you say, and when you say something which is false that trust is shaken and becomes uncertain. As it stands, doing independent research and verification has led to exposing that actual facts, something that would never happen if I had blind faith in your every word.

With all that out of the way it's clear the pricing on the 20 series GPUs is a rare case of substantial increase over the historical average. Since it has been so long since the last comparable increase, and the increase is subjectively not justifiable there has been an uproar surrounding it. That now taints the entire product line and as such continue to expect commotion when information on the 20 series continues to appear, including this news on the RTX 2060 FE.

Do you even read?

Facts :

1 - Indeed that's a fact but there must be a cause

2 - I couldn't agree more

3 - People complain indeed, but not because the increase of price, that's where you're forgetting point n°4 which explains this point, and doesn't justify point 1 and justify complaints

So :

4 - The ratio for manufacturers of performance/cost is ALWAYS increasing because performance increase a lot, but costs don't grow that fast, and sometimes they go down when doing shrinking or renaming.

Here is an example of your logic :

1 - a Voodoo card score 1

2 - a 2080 TI card score 1000

3 - In your logic, the starting price should increase accordingly to the increase of performance

So, saying, for example that a Voodoo costs 300$ at the time (like 20 ears ago), a 2080TI should cost 300 000$ at launch. See where you're wrong dude ?

A Voodoo should maybe cost a single dollar to produce today. And so you have to take that in input for point n°1 which explain point n°3. (old ratio : 1/300 | new ratio : 1, and higher is better)

That's the same thing for many things like HDD, SSD (remember when a SSD cost 1$/GB ? with Vertex 2 for example). Remember the cost of a 8086 ? now it's a single dollar, even less and a high-end CPU which is 200000x more powerful is "only" 400$. See ? Get out of the matrix lex, you're blinded.

People are complaining because Nvidia is putting crazy prices on something easy to produce for them. End of story.

And stop with "Vote with your wallet" or "learn how to save money". I could build myself a right now with the last Threadripper or i9 + 2TB SSD + 2080Ti and don't even sense it on my accounts. It's a question of taking people for idiots and Nvidia is doing it for many years now.

8800 Ultra becomes ~$1007

8800 GTX (which launched at $649) becomes ~$811

Prices for Turing doesn't look so bad then…

The 8800 GTX was 600$ or today 725$

8800 Ultra was 800$ or today 999$

The 8800 GT was only 300$ or today 360$

All with inflation.

Here's the thing. The 8800 GT gave you about 75%-80% of the performance the 8800 Ultra did for almost a third of its price. It was fairly close in performance, yet much cheaper.

That's how things were sold to us. The 8800 Ultra was the cream of the crop for fine diners, while offering slightly higher performance.

Today, you have:

RTX 2080 Ti for 999$

RTX 2080 for 799$

RTX 2070 for 499$

The performance difference between the RTX 2070 and RTX 2080 Ti is quite substantial. You don't get 75% of flagship performance for half of its price. You get just a little over half.

The flagship to mainstream ratio is very different today than it was 10-11 years ago, even if "cards were still sold at over 750$". There was compensation for the mid range. Today midrange is absolutely cruel.

The only things 300$ will get you is RX 590, or GTX 1060 GDDR5X, with both having 2+ year technologies in them

fact is they're using 12nm to launch a $350 card that,if the leak is to be true,outperforms AMD's $400 V56 and nearly matches $500 V64.Has RT and DLSS support too,while all Vega has is gimmicky HBCC that they hyped but it turned out to have zero impact. Meanwhile all AMD did this year is launch a 12nm flop at $280 that is barely faster than rx580 oc vs oc.

nvidia has had better value cards at the ~$350-400 price point for quite some time,starting with the gtx 970,then 1070,1070Ti and now rtx 2060 is gonna be no exception.They didn't make the jump to 7nm yet,but still are bringing the performance of 1080/V64 down to $350 level at 12nm.

EDIT: For what is worth, I have $1000 into my monitor and GPU. Going by these leaks, a 2060 and a G-Sync monitor is going to cost a little bit more than that. How bad is the value really?

That does not mean that a price increase is pure inflation, there are many factors here, including varying production costs, competition, etc. But these can only be compared after we have the price corrected for inflation, otherwise any comparison is pointless.