Sunday, December 30th 2018

NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

Here are some of the first pictures of NVIDIA's upcoming GeForce RTX 2060 Founders Edition graphics card. You'll know from our older report that there could be as many as six variants of the RTX 2060 based on memory size and type. The Founders Edition is based on the top-spec one with 6 GB of GDDR6 memory. The card looks similar in design to the RTX 2070 Founders Edition, which is probably because NVIDIA is reusing the reference-design PCB and cooling solution, minus two of the eight memory chips. The card continues to pull power from a single 8-pin PCIe power connector.

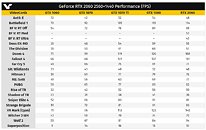

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

Source:

VideoCardz

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

234 Comments on NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

If you can't understand that, good day!

*or at least the 99 or 90 percentile, because absolute min fps is usually just a freak occurence

What is the exact point that we should stop "fps chasing" ? 90 fps ? 100 fps ? 85 fps ? Can you please tell us ? Cause I was under the impression that it's subjective in every case. I absolutely can feel 100 vs 130 fps instantly. The blind test was pointless and just pulling wool over the public eyes.

EDIT; Realistically, 120hz is the physical limit to what the human eye can distinguish in real-time. While we can still see a difference in "smoothness" above 120hz, it's only a slight perceptional difference and can be very subjective from person to person.

And I don't even want to imagine playing any BF title for hundreds of hours. It only brings up images of my own suicide.I don't play for sides. There is really nothing Cucker has said that is wrong, just that it doesn't apply to what started this debate about the blind test.

The 680 should have been the GK100, witch nvidia later renamed into GK110 because of the 7 series or kepler refresh - and sold as the original titan - a 1000$ card! No other high end video card sold for that much before - the GTX 480 had a MSRP of 499$, and the 580 was 450$ - so nvidia doubled their profits with Kepler by simply moving the high and mid end cards around their lineup and "inventing" two new models - the Titan, and later, the GK110b, the 780ti - but not before milking consumers with their initial run of defective GK110 chips, the GTX 780, or GK110-300.

Now nvidia is asking 400$ for a mainstream card, almost as much as the 2, 4 and 5 series high end models cost. But wait - the current flagship, the Titan RTX is now 2500 bloody dollars, and the 2800ti was 1299$ at launch, with prices reaching 1500$ in some places. In fact, it's still 1500$ at most online retailers in my country. F#(k that! 1500$ can buy you a lot of nice stuff - a decent car, a good bike, a boat, loads of clothes, a nice vacation - I'm not forking over to nvidia to pay for a product witch has cost around the 400$ mark for the better part of 18 freakin' years.

Don't you realize we're being taken for fools? Companies are treating us like idiots, and we're happy to oblige by forking out more cash for shittier products...

I'd rather go with the modern card with all the latest gizmos, and less power use given the same cost and performance.

The top model of the 600 series was the GTX 690, having two GK104 chips.I have to correct you there.

GK100 was bad and Nvidia had to do a fairly "last minute" rebrand of "GTX 670 Ti" into "GTX 680". (I remember my GTX 680 box had stickers over all the product names) The GK100 was only used for some compute cards, but the GK110 was a revised version, which ended up in the GTX 780, and was pretty much what the GTX 680 should have been.

You have to remember that Kepler was a major architectural redesign for Nvidia.

2080Ti RTX = V64 rasterization

A 2080Ti should cost ~800/900 IMO and a 2080 600$.

If AMD was in the competition, I think that nvidia would be much closer to that range of prices.

As has been said, don't like it? vote with your wallet! I sure have. I bought a GTX1080 at launch and ~2.5 years later I personally have no compelling reason to upgrade, that comes down to my rig, screen, available time to game, what I play, price performance etc etc etc, add it all together - that equation is different for every buyer.

Do I think 20 series RTX is worth it? Not yet but I'm glad someones doing it, I've seen BFV played with it on and I truly hope Ray Tracing is in the future of gaming.

My take is that when one or both of these two things happen prices will drop, perhaps but a negligible amount, perhaps significantly;

1. Nvidia clears out all (or virtually all) 10 series stock, which the market still seems hungry for, partly because many offerings offer more than adequate performance for the particular consumer's needs.

2. AMD answer the 20 series upper level performance, or release cards matching 1080/2070/vega perf at lower prices (or again, both)

If Nvidia manages to tweak the hardware for their 7nm lineup, then we'll have a strong proposition for DXR. Otherwise, we'll have to wait for another generation.

And about differences, I'm really not aware of many, save for the tweaks Nvidia did to make it more fit for DXR (and probably less fit for general computing). I'm sure Anand did a piece highlighting the differences (and I'm sure I read it), but nothing striking has stuck.

That said, yes, R&D does not usually pay off after just one iteration. I was just saying they've already made some of that back.