Thursday, July 16th 2020

The Curious Case of the 12-pin Power Connector: It's Real and Coming with NVIDIA Ampere GPUs

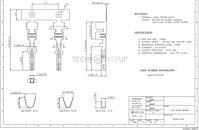

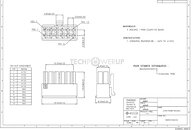

Over the past few days, we've heard chatter about a new 12-pin PCIe power connector for graphics cards being introduced, particularly from Chinese language publication FCPowerUp, including a picture of the connector itself. Igor's Lab also did an in-depth technical breakdown of the connector. TechPowerUp has some new information on this from a well placed industry source. The connector is real, and will be introduced with NVIDIA's next-generation "Ampere" graphics cards. The connector appears to be NVIDIA's brain-child, and not that of any other IP- or trading group, such as the PCI-SIG, Molex or Intel. The connector was designed in response to two market realities - that high-end graphics cards inevitably need two power connectors; and it would be neater for consumers to have a single cable than having to wrestle with two; and that lower-end (<225 W) graphics cards can make do with one 8-pin or 6-pin connector.

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.As for the power delivery, we have learned that the designers will also specify the cable gauge, and with the right combination of wire gauge and pins, the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

Looking at the keying, we can see that it will not be possible to connect two classic six-pins to it. For example pin 1 is square on the PCIe 6-pin, but on NVIDIA's 12-pin is has one corner angled. It also won't be possible to use weird combinations like 8-pin + EPS 4 pin, or similar—NVIDIA made sure people won't be able to connect their cables the wrong way.

On topic of the connector's proliferation, in addition to PSU manufacturers launching new generations of products with 12-pin connectors, most prominent manufacturers are expected to release aftermarket modular cables that can plug in to their existing PSUs. Graphics card vendors will include ketchup-and-mustard adapters that convert 2x 8-pin to 1x 12-pin; while most case/power manufacturers will release fancy aftermarket adapters with better aesthetics.

Update 08:37 UTC: I made an image in Photoshop to show the new connector layout, keying and voltage lines in a single, easy to understand graphic.

Sources:

FCPowerUp (photo), Igor's Lab

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.As for the power delivery, we have learned that the designers will also specify the cable gauge, and with the right combination of wire gauge and pins, the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

Looking at the keying, we can see that it will not be possible to connect two classic six-pins to it. For example pin 1 is square on the PCIe 6-pin, but on NVIDIA's 12-pin is has one corner angled. It also won't be possible to use weird combinations like 8-pin + EPS 4 pin, or similar—NVIDIA made sure people won't be able to connect their cables the wrong way.

On topic of the connector's proliferation, in addition to PSU manufacturers launching new generations of products with 12-pin connectors, most prominent manufacturers are expected to release aftermarket modular cables that can plug in to their existing PSUs. Graphics card vendors will include ketchup-and-mustard adapters that convert 2x 8-pin to 1x 12-pin; while most case/power manufacturers will release fancy aftermarket adapters with better aesthetics.

Update 08:37 UTC: I made an image in Photoshop to show the new connector layout, keying and voltage lines in a single, easy to understand graphic.

178 Comments on The Curious Case of the 12-pin Power Connector: It's Real and Coming with NVIDIA Ampere GPUs

LOOOL

Since this new 12-pin connector seems to be monolithic (althought it's mentioned it can be split in half, that has me puzzled) they wouldn't need any sense as it's always a 12-pin plugged.

Or if it indeed can be split for smaller GPUs then you're right it would require one sense pin on the 2nd half

The 3dfx actually came with it's own power brick to be plugged into the wall to feed the GPU.

Apart from that, weird decision. 2x8 pins should be more then enough for most GPU's unless this thing is supposed to feed GPU's in enterprise markets or machines.

Lot of wires are overrated as well. One single yellow wire on a good build PSU could easily push 10A up to 13A.

remember Nvidia HGX gpu? well.. that's a 400W BEAST... but does not have any connector to power it because.. well.. 4*8pin PEG cable is not an option.

definitely 600W for a single VGA (single or dual gpu doen't matter) is quite too much for consumer, but not for PRO / server / HPC application

Must have RGB lighting in this new spec and adapters!

I get that FCPowerup is legit, I'm just confused AF

To be fair if it late night and you are just reading stuff literally...... sure

But context is important here, the piece is too high effort and well reference to be a joke, especially when it is just a single line at the bottom of the page.

Anyway, he put in additional material already, although I like the write up with Igor's lab more, FCPowerup's info is about the same, go check it out :D

I can't see this coming to desktops. AI focused cards? Yes. Desktops? No. Why? Because places like California would have a power usage fit. To me, the industry is very conscience of their power usage and NOT trying to draw attention to themselves.

www.techpowerup.com/225808/new-california-energy-commission-regulation-threatens-pre-built-gaming-desktops

www.techpowerup.com/249605/all-asus-motherboards-meet-stringent-new-california-energy-commission-standards

wondering when cards will just have 2x12pin LOL

Just saying!